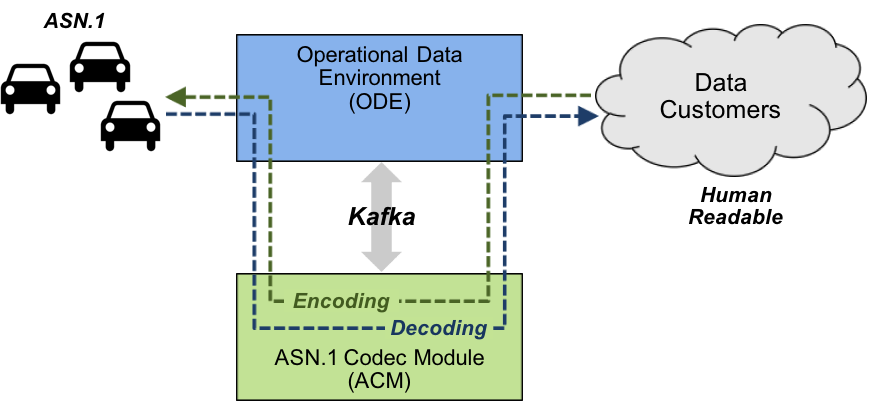

The ASN.1 Codec Module (ACM) processes Kafka data streams that preset ODE Metadata wrapped ASN.1 data. It can perform one of two functions depending on how it is started:

-

Decode: This function is used to process messages from the connected vehicle environment to ODE subscribers. Specifically, the ACM extacts binary data from consumed messages (ODE Metadata Messages) and decodes the binary ASN.1 data into a structure that is subsequently encoded into an alternative format more suitable for ODE subscribers (currently XML using XER).

-

Encode: This function is used to process messages from the ODE to the connected vehicle environment. Specifically, the ACM extracts human-readable data from ODE Metadata and decodes it into a structure that is subsequently encoded into ASN.1 binary data.

README.md

- Release Notes

- Getting Involved

- Documentation

- Generating C Files from ASN.1 Definitions

- Confluent Cloud Integration

Other Documents

The current version and release history of the asn1_codec: asn1_codec Release Notes

This project is sponsored by the U.S. Department of Transportation and supports Operational Data Environment data type conversions. Here are some ways you can start getting involved in this project:

- Pull the code and check it out! The ASN.1 Codec project uses the Pull Request Model.

- Github has instructions for setting up an account and getting started with repositories.

- If you would like to improve this code base or the documentation, fork the project and submit a pull request.

- If you find a problem with the code or the documentation, please submit an issue.

This project uses the Pull Request Model. This involves the following project components:

- The usdot-jpo-ode organization project's master branch.

- A personal GitHub account.

- A fork of a project release tag or master branch in your personal GitHub account.

A high level overview of our model and these components is as follows. All work will be submitted via pull requests.

Developers will work on branches on their personal machines (local clients), push these branches to their personal GitHub repos and issue a pull

request to the organization asn1_codec project. One the project's main developers must review the Pull Request and merge it

or, if there are issues, discuss them with the submitter. This will ensure that the developers have a better

understanding of the code base and we catch problems before they enter master. The following process should be followed:

- If you do not have one yet, create a personal (or organization) account on GitHub (assume your account name is

<your-github-account-name>). - Log into your personal (or organization) account.

- Fork asn1_codec into your personal GitHub account.

- On your computer (local client), clone the master branch from you GitHub account:

$ git clone https://github.com/<your-github-account-name>/asn1_codec.gitThis documentation is in the README.md file. Additional information can be found using the links in the Table of

Contents. All stakeholders are invited to provide input to these documents. Stakeholders should

direct all input on this document to the JPO Product Owner at DOT, FHWA, or JPO.

Code documentation can be generated using Doxygen by following the commands below:

$ sudo apt install doxygen

$ cd <install root>/asn1_codec

$ doxygenThe documentation is in HTML and is written to the <install root>/asn1_codec/docs/html directory. Open index.html in a

browser.

Check here for instructions on how to generate C files from ASN.1 definitions: ASN.1 C File Generation

This should only be necessary if the ASN.1 definitions change. The generated files are already included in the repository.

Rather than using a local kafka instance, the ACM can utilize an instance of kafka hosted by Confluent Cloud via SASL.

- The DOCKER_HOST_IP environment variable is used to communicate with the bootstrap server that the instance of Kafka is running on.

- The KAFKA_TYPE environment variable specifies what type of kafka connection will be attempted and is used to check if Confluent should be utilized.

- The CONFLUENT_KEY and CONFLUENT_SECRET environment variables are used to authenticate with the bootstrap server.

- DOCKER_HOST_IP must be set to the bootstrap server address (excluding the port)

- KAFKA_TYPE must be set to "CONFLUENT"

- CONFLUENT_KEY must be set to the API key being utilized for CC

- CONFLUENT_SECRET must be set to the API secret being utilized for CC

There is a provided docker-compose file (docker-compose-confluent-cloud.yml) that passes the above environment variables into the container that gets created. Further, this file doesn't spin up a local kafka instance since it is not required.

This has only been tested with Confluent Cloud but technically all SASL authenticated Kafka brokers can be reached using this method.