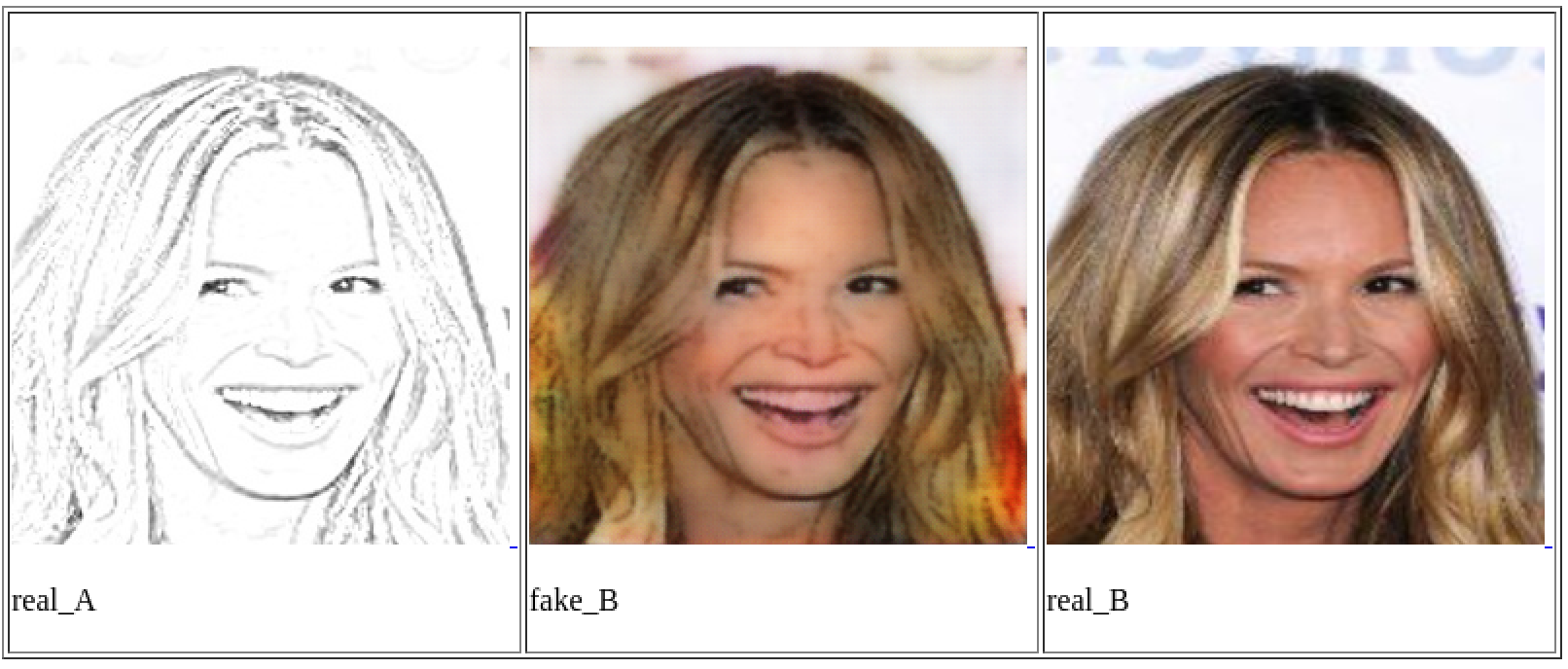

The goal of this project is to use Machine Learning to transform police sketches to realistic images.

The overall motivation is to help the police better identify and catch the bad guys faster.

-

Create a folder in

sketch2pix/dataset- ie.

faces-edge1

- ie.

-

Within that folder create 3 folders

edge,face,face2edge -

Within each of those 3 folders create 2 folders

test,train -

Put your output images in the

facefolder and split them however you want into thetestandtrainfolders. It's recommended you train on 70% of your images and test the other 30% -

Use the

sketch.shscript to generate the edge versions (aka inputs) of yourfaceimages- in the

sketch2pix/datasetrun

./sketch.sh --image-path /path/to/image_folder --face-path /path/to/face_folder --sketch-path /path/to/sketch_folder

- --sketch-path should be the

trainortestfolder inedge

- in the

-

Use the

combine.shscript to generate the combination images needed forpix2pixto train and test- in the

sketch2pix/datasetrun

./combine.sh --path faces-edge1

- This command will output to the

face2edgefolder

- in the

Run

> python sketch2pix/dataset/PencilSketch/gen_sketch_and_gen_resized_face.py \

/path/to/image_folder /path/to/face_folder /path/to/sketch_folder

In sketch2pix

Run

./train.sh --data-root ../dataset/celebfaces/face2edge --name edge2face_generation --direction BtoA --torch /root/torch/install/bin/thRequired parameters:

--data-root: the face2edge folder of your dataset

--name : name of experiment

--direction : BtoA or AtoB

--torch : path to th bin

As the model trains checkpoints will be stored in:

sketch2pix/pix2pix/checkpoints/{--name}

where --name is the value passed into the scripts from above.

In sketch2pix

Run

./test.sh --data-root ../dataset/celebfaces/face2edge --name edge2face_generation --direction BtoA --torch /root/torch/install/bin/th [--custom_image_dir my_named_gen]Required parameters:

--data-root: the face2edge folder of your dataset

--name : should be the same as what was used in the train script

--direction : BtoA or AtoB

--torch : path to th bin

Optional parameters:

--custom_image_dir : name of the directory you want results outputted to. Default uses the model name if not supplied.

After testing your results are stored in

sketch2pix/pix2pix/results/{--name}/latest_net_G_test/index.html

where --name is the value passed into the scripts from above.

Open that html file in a browser to examine the results of your test.

On how to improve it

- Realistic sketch generation that matches pencil sketches [done]

- More training data [done]

- Using a face segmenter, seperating the face out of the background, so input photo is just a face on white background. This way, the generated photos will also have a white background and the sketches will not have the background either.

- Using seperate model for men and women, seperate model for different hair colours. All achievable by running through internal FaceSDK

- Large-scale CelebFaces Attributes

- Labeled Faces In The Wild

- CUHK Face Sketch Database

- CUHK Face Sketch FERET Database

- XM2VTS data set

- SCface - Surveillance Cameras Face Database

- Face Recognition Databases

- adiencedb - homepage

- User1m - Script development and Pix2Pix model training / testing, Web App

- koul - Seed idea

- wtam, tikyau, getCloudy - Help with data generation

MIT