Each folder contains a standalone, short (~100 lines of Tensorflow), main.py that implements a neural-network based model for Named Entity Recognition (NER) using tf.estimator and tf.data.

These implementations are simple, efficient, and state-of-the-art, in the sense that they do as least as well as the results reported in the papers. The best model achieves in average an f1 score of 91.21. To my knowledge, existing implementations available on the web are convoluted, outdated and not always accurate (including my previous work). This repo is an attempt to fix this, in the hope that it will enable people to test and validate new ideas quickly.

The script lstm_crf/main.py can also be seen as a simple introduction to Tensorflow high-level APIs tf.estimator and tf.data applied to Natural Language Processing.

You need python3 -- If you haven't switched yet, do it.

You need to install tf_metrics (multi-class precision, recall and f1 metrics for Tensorflow).

git clone https://github.com/guillaumegenthial/tf_metrics.git

cd tf_metrics

pip install .

Follow the data/example.

- For

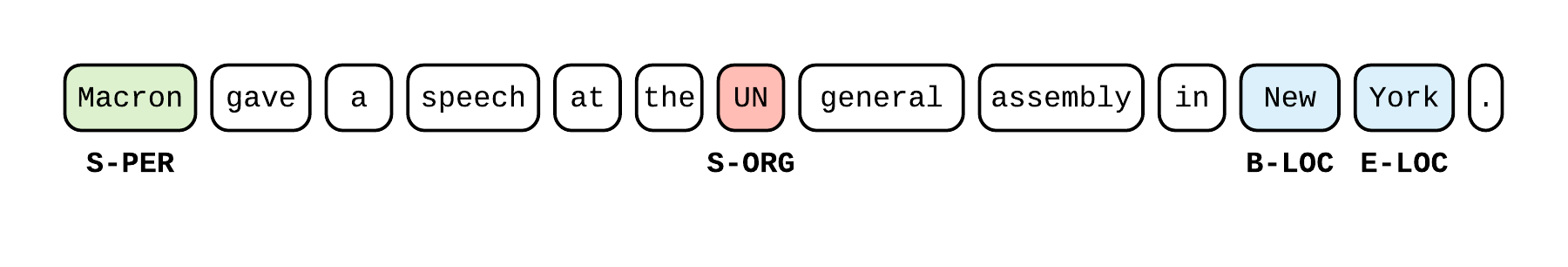

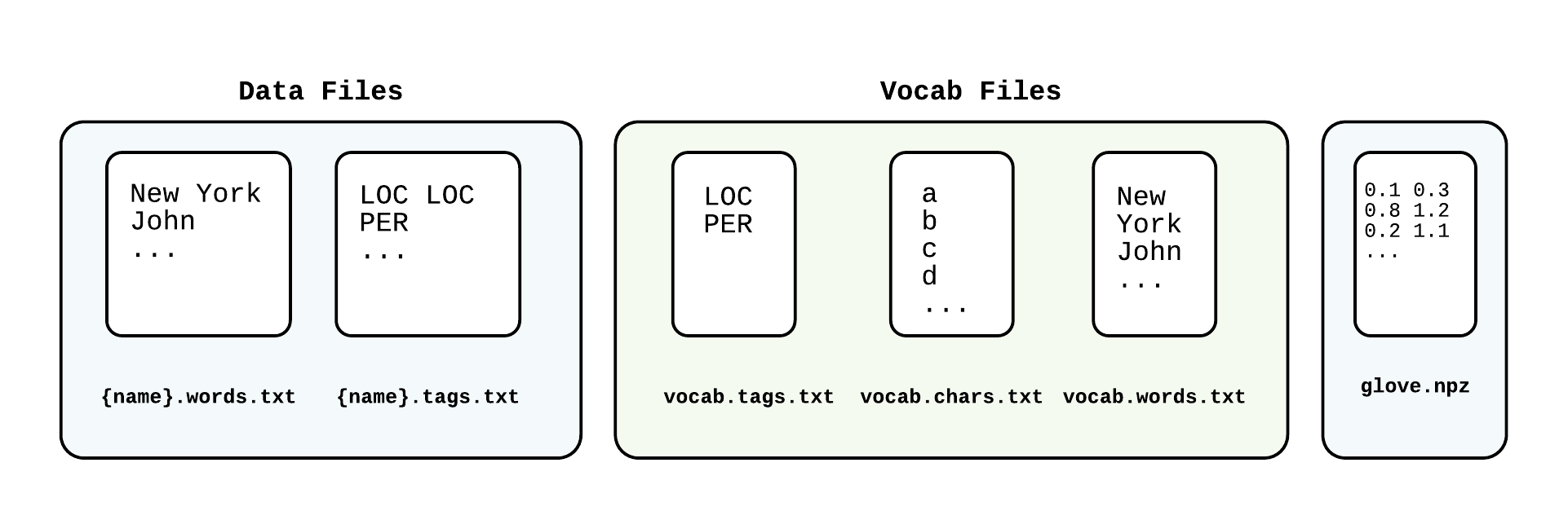

namein{train, testa, testb}, create files{name}.words.txtand{name}.tags.txtthat contain one sentence per line, each word / tag separated by space. I recommend using theIOBEStagging scheme. - Create files

vocab.words.txt,vocab.tags.txtandvocab.chars.txtthat contain one token per line. - Create a

glove.npzfile containing one arrayembeddingsof shape(size_vocab_words, 300)using GloVe 840B vectors andnp.savez_compressed.

An example of scripts to build the vocab and the glove.npz files from the {name}.words.txt and {name}.tags.txt files is provided in data/example. See

If you just want to get started, once you have created your {name}.words.txt and {name}.tags.txt files, simply do

cd data/example

make download-glove

make build

(These commands will build the example dataset)

Note that the example dataset is here for debugging purposes only and won't be of much use to train an actual model

Once you've produced all the required data files, simply pick one of the main.py scripts. Then, modify the DATADIR variable at the top of main.py.

To train, evaluate and write predictions to file, run

cd models/lstm_crf

python main.py

(These commands will train a bi-LSTM + CRF on the example dataset if you haven't changed DATADIR in the main.py.)

Took inspiration from these papers

- Bidirectional LSTM-CRF Models for Sequence Tagging by Huang, Xu and Yu

- Neural Architectures for Named Entity Recognition by Lample et al.

- End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF by Ma et Hovy

You can also read this blog post.

Word-vectors are not retrained to avoid any undesirable shift (explanation in these CS224N notes).

The models are tested on the CoNLL2003 shared task.

Training times are provided for indicative purposes only. Obtained on a 2016 13-inch MBPro 3.3 GHz Intel Core i7.

For each model, we run 5 experiments

- Train on

trainonly - Early stopping on

testa - Select best of 5 on the perfomance on

testa(token-level F1) - Report F1 score mean and standard deviation (entity-level F1 from the official

conllevalscript) - Select best on

testbfor reference (but shouldn't be used for comparison as this is just overfitting on the final test set)

In addition, we run 5 other experiments, keeping an Exponential Moving Average (EMA) of the weights (used for evaluation) and report the best F1, mean / std.

As you can see, there's no clear statistical evidence of which of the 2 character-based models is the best. EMA seems to help most of the time. Also, considering the complexity of the models and the relatively small gap in performance (0.6 F1), using the lstm_crf model is probably a safe bet for most of the concrete applications.

Architecture

- GloVe 840B vectors

- Bi-LSTM

- CRF

Related Paper Bidirectional LSTM-CRF Models for Sequence Tagging by Huang, Xu and Yu

Training time ~ 20 min

train |

testa |

testb |

Paper, testb |

|

|---|---|---|---|---|

| best | 98.45 | 93.81 | 90.61 | 90.10 |

| best (EMA) | 98.82 | 94.06 | 90.43 | |

| mean ± std | 98.85 ± 0.22 | 93.68 ± 0.12 | 90.42 ± 0.10 | |

| mean ± std (EMA) | 98.71 ± 0.47 | 93.81 ± 0.24 | 90.50 ± 0.21 | |

| abs. best | 90.61 | |||

| abs. best (EMA) | 90.75 |

Architecture

- GloVe 840B vectors

- Chars embeddings

- Chars bi-LSTM

- Bi-LSTM

- CRF

Related Paper Neural Architectures for Named Entity Recognition by Lample et al.

Training time ~ 35 min

train |

testa |

testb |

Paper, testb |

|

|---|---|---|---|---|

| best | 98.81 | 94.36 | 91.02 | 90.94 |

| best (EMA) | 98.73 | 94.50 | 91.14 | |

| mean ± std | 98.83 ± 0.27 | 94.02 ± 0.26 | 91.01 ± 0.16 | |

| mean ± std (EMA) | 98.51 ± 0.25 | 94.20 ± 0.28 | 91.21 ± 0.05 | |

| abs. best | 91.22 | |||

| abs. best (EMA) | 91.28 |

Architecture

- GloVe 840B vectors

- Chars embeddings

- Chars 1d convolution and max-pooling

- Bi-LSTM

- CRF

Related Paper End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF by Ma et Hovy

Training time ~ 35 min

train |

testa |

testb |

Paper, testb |

|

|---|---|---|---|---|

| best | 99.16 | 94.53 | 91.18 | 91.21 |

| best (EMA) | 99.44 | 94.50 | 91.17 | |

| mean ± std | 98.86 ± 0.30 | 94.10 ± 0.26 | 91.20 ± 0.15 | |

| mean ± std (EMA) | 98.67 ± 0.39 | 94.29 ± 0.17 | 91.13 ± 0.11 | |

| abs. best | 91.42 | |||

| abs. best (EMA) | 91.22 |