# Clone the repository

git clone git@github.com:aboros98/synth.git

# (Optional) Create a virtual environment

python3 -m venv venv

source venv/bin/activate # On Windows use `venv\Scripts\activate`

# Install the requirements

pip install -r requirements.txtexport [OPENAI_API_KEY | TOGETHER_API_KEY | ANTHROPIC_API_KEY | MISTRAL_API_KEY]=<your_api_key># Example configuration using the OpenAI engine:

strong_model:

engine: openai

model: gpt-4

seed: 42

generation_config:

max_tokens: 4096

temperature: 0.7

top_p: 0.9

target_model:

engine: openai

model: gpt-3.5-turbo

seed: 42

generation_config:

max_tokens: 4096

temperature: 0.7

top_p: 0.9

judge_model:

engine: openai

model: gpt-4o

seed: 42

generation_config:

max_tokens: 512

temperature: 0.0

top_p: 1.0

pipeline:

n_instructions: 2

n_rubrics: 4

n_iterations: 4

margin_threshold: 0.5

output_path: <path_to_output>

dataset_path: <local_path_or_huggingface_dataset>python main.py -c <path_to_config_file> -p <number_of_parallel_processes>-

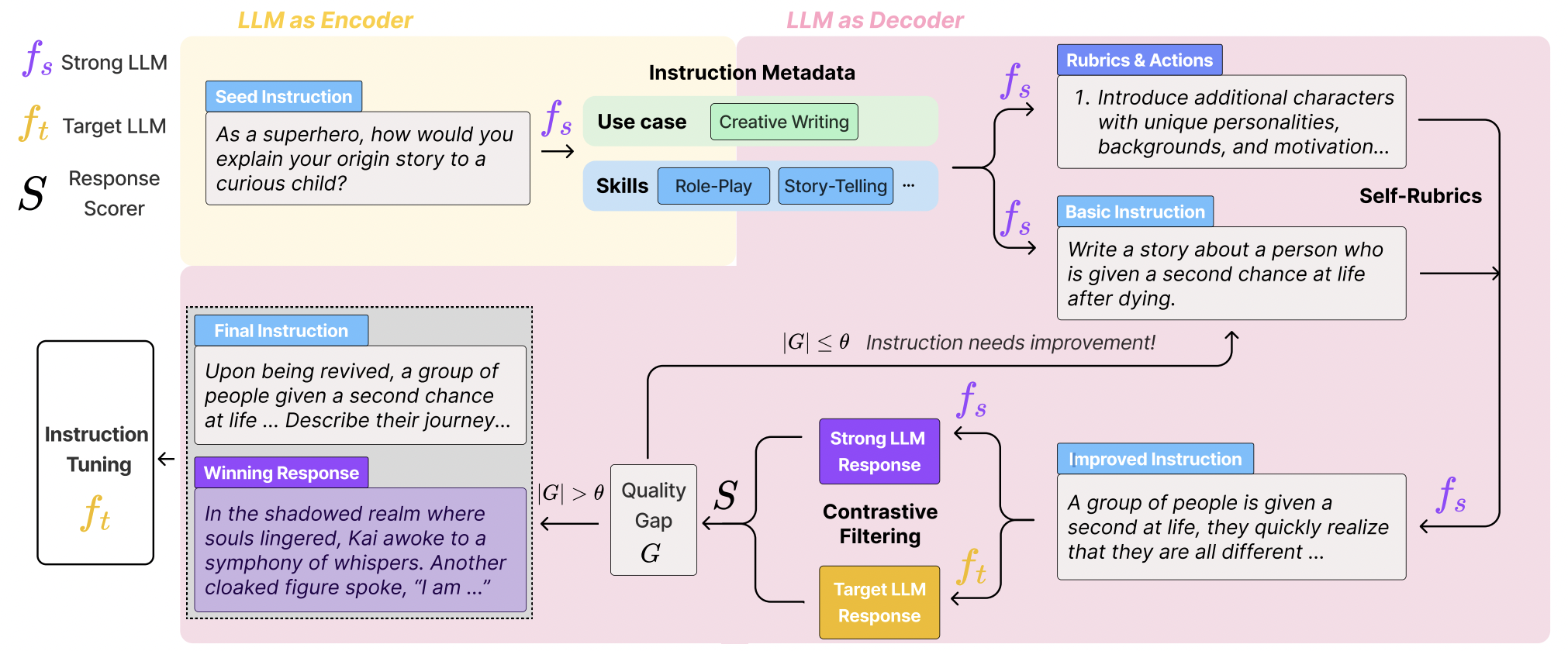

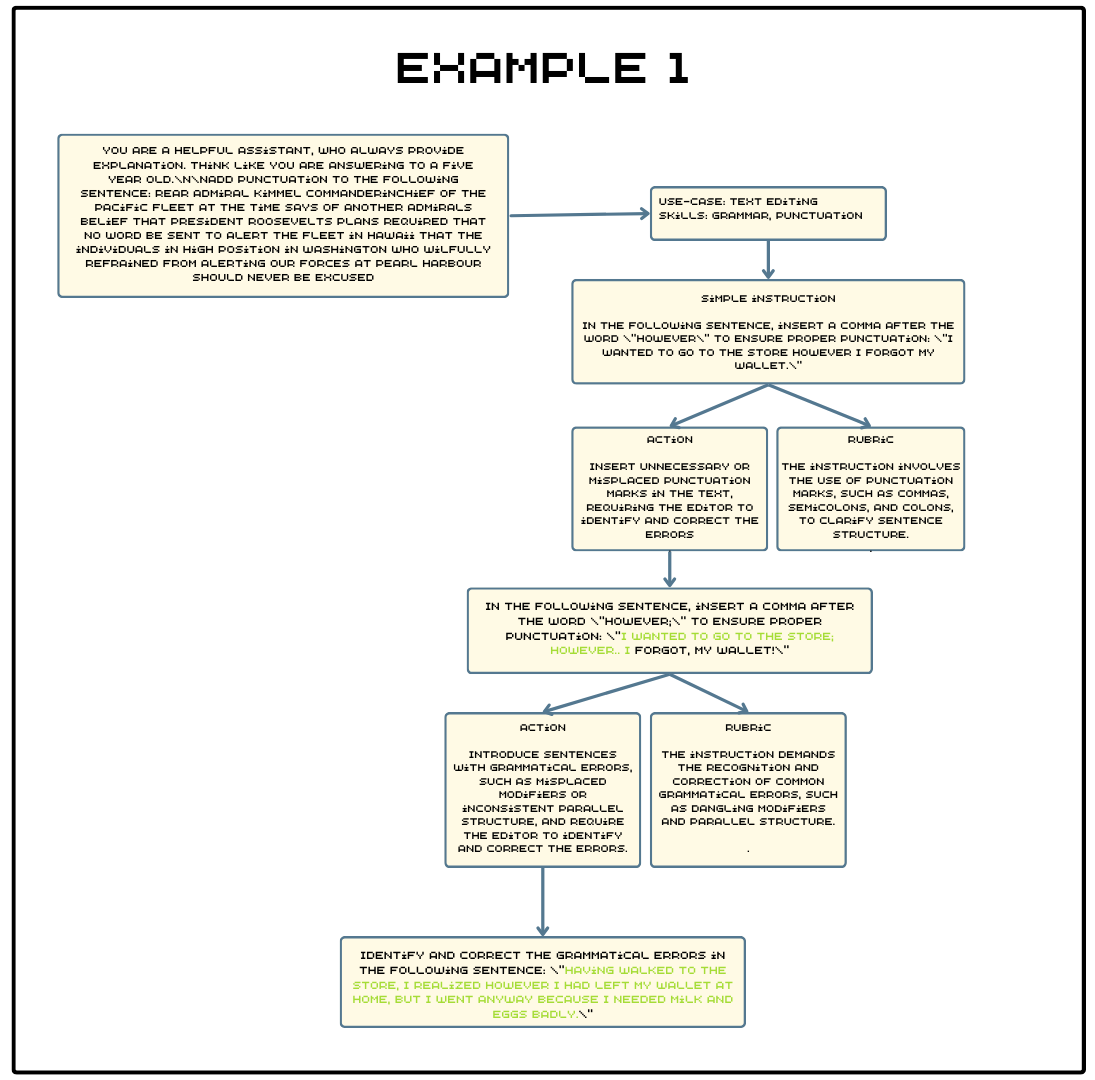

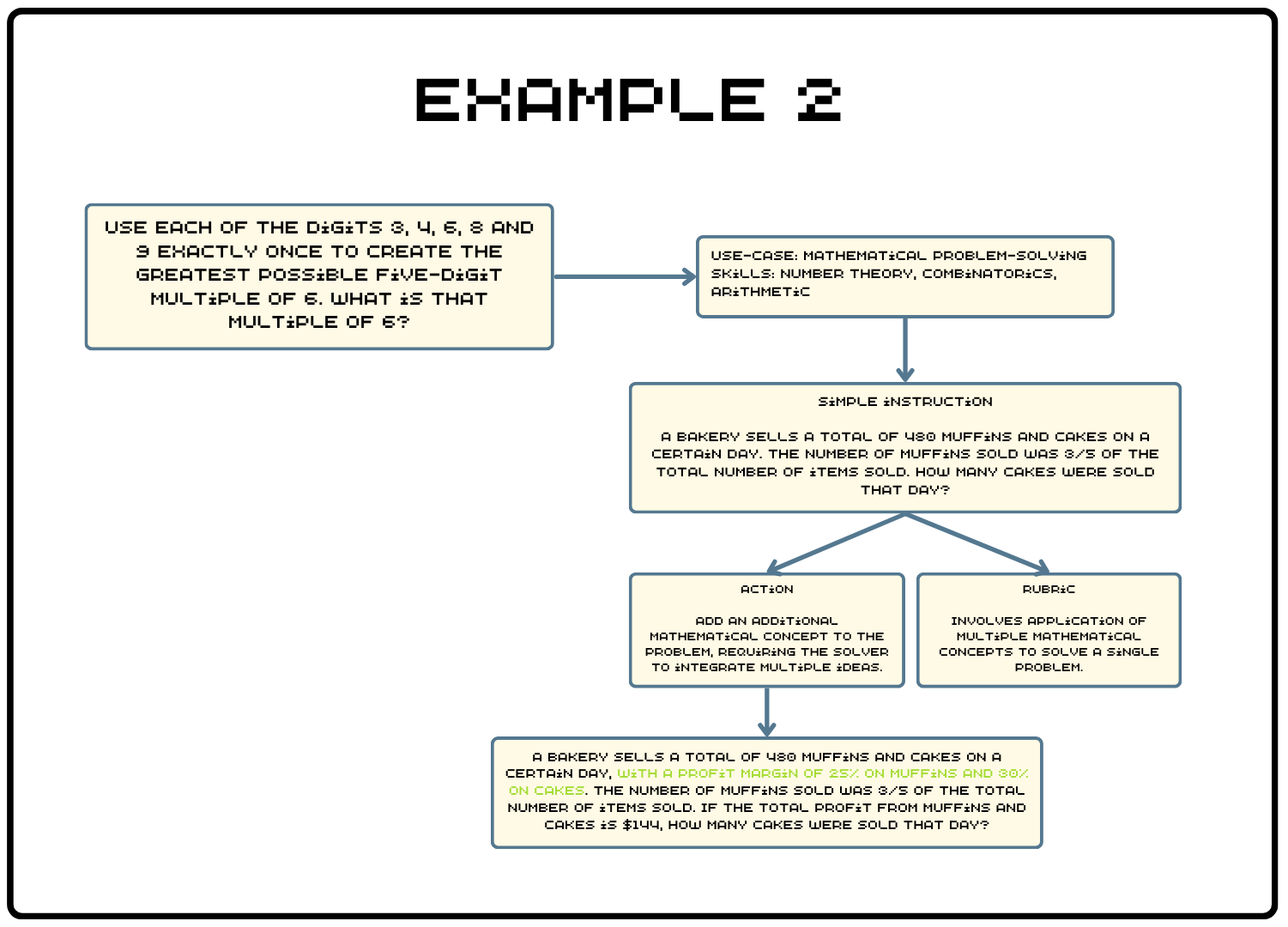

Instruction encoding

- Encodes the instruction into skills and use-cases using a robust LLM.

- Model Used: Strong LLM

-

Simple instructions generation

- Generates a new instruction based on skills and use-cases without accessing the original instruction.

- Model Used: Strong LLM

-

Rubric and action generation

- Generates

nrubrics and corresponding actions. The actions are used to enhance the instruction. - Model Used: Strong LLM

- Generates

-

Instruction improvement

- Enhances the instruction according to a randomly selected rubric and action.

- Model Used: Strong LLM

-

Generated instructions answers generation

- Generates answers for the instructions.

- Model Used: Strong and Target LLMs

-

Instruction-answer pair ranking

- Ranks instruction-answer pairs, scoring responses from both LLMs.

- Model Used: Strong LLM

-

Contrastive filtering

- Filters instructions based on the score margin, sending those below the threshold back to

Step 3for further improvement. - Model Used: Strong LLM

- Filters instructions based on the score margin, sending those below the threshold back to

-

Final generated instructions ranking

- Ranks the final generated instructions using a judge model. This step is optional. The score given by the Judge LLM is between 0 and 5.

- Model Used: Judge LLM

generated_dataset.json- Contains the final generated dataset.

{

"instruction": "The generated instruction",

"answer": "The generated answer for the instruction",

"model": "The model used to generate the answer",

"contrastive_score": "The contrastive score of the instruction-answer pair",

"judge_instruction_score": "The score given by the JudgeLLM",

"judge_reason": "The reason for the score given by the JudgeLLM",

"judge_model_name": "The JudgeLLM model used to score the instruction-answer pair",

"topic": "The topic of the instruction",

"subtopic": "The subtopic of the instruction"

}processed_data.json- Contains all the data processed by the pipeline.

{

"instruction_index": "The index of the instruction",

"seed_instruction": "The seed instruction from the dataset",

"task": "The task of the instruction",

"skills": "The skills extracted from the instruction",

"rubrics": "The rubrics generated for the instruction",

"actions": "The actions generated for the instruction",

"simple_instructions": "The simple instructions generated given the skills and task",

"strong_model": "The StrongLLM model",

"target_model": "The TargetLLM model",

"improved_instructions": {

"improvement_step": "The step of the improvement",

"original_instruction": "The simple instruction",

"rubric": "The sampled rubric used for the improvement",

"action": "The sampled action used for the improvement",

"improved_instruction": "The improved instruction",

"strong_answer": "The answer generated by the StrongLLM",

"target_answer": "The answer generated by the TargetLLM",

"strong_score": "The strong answer score from the contrastive filtering",

"target_score": "The target answer score from the contrastive filtering",

"improvement_history": "All the history of the improvements"

}

}skipped_data.json- The index of the instructions that were skipped by the pipeline.

[

"The index of the skipped instructions"

]| Generation Engine | Supported |

|---|---|

| OpenAI | ✅ |

| Mistral | ✅ |

| Anthropic | ✅ |

| TogetherAI | ✅ |

- CodecLM: Aligning Language Models with Tailored Synthetic Data

- Self-Rewarding Language Models

- Sensei

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.