- Transformer model for Neural Machine Translation from Russian to English

- PyTorch implementation of "Attention Is All You Need" by Ashish Vaswani et al. (link)

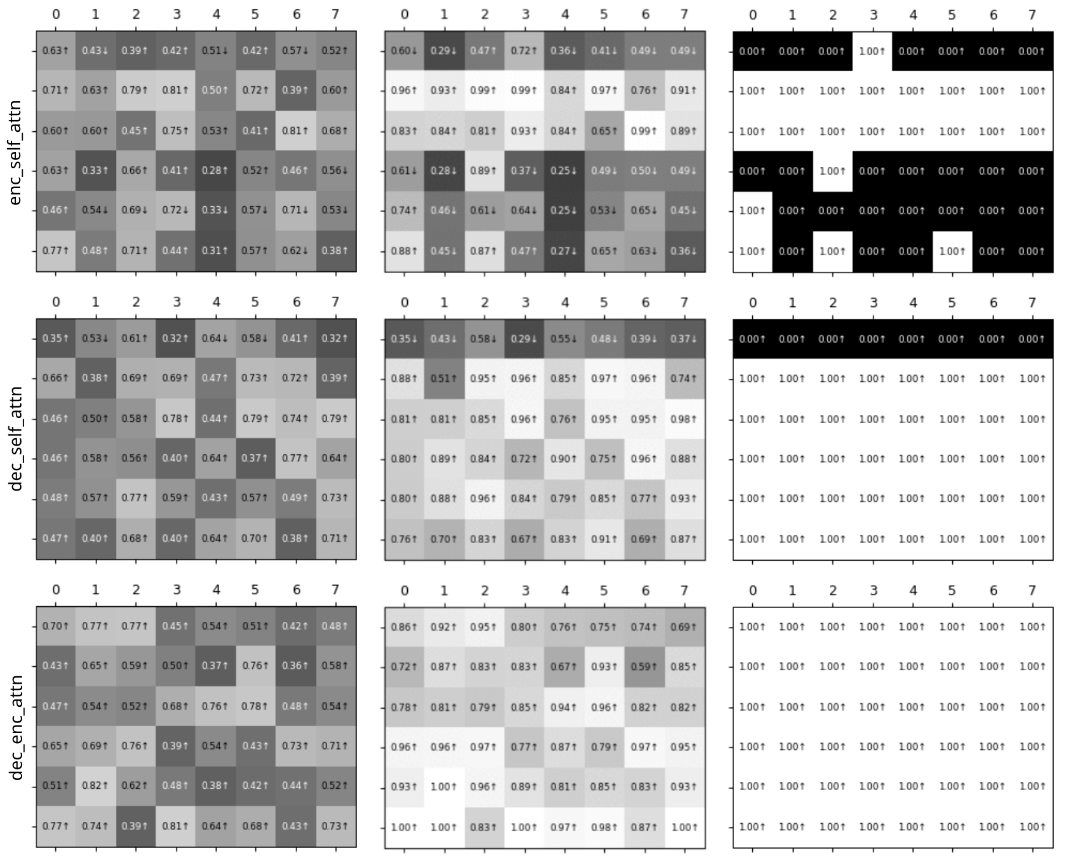

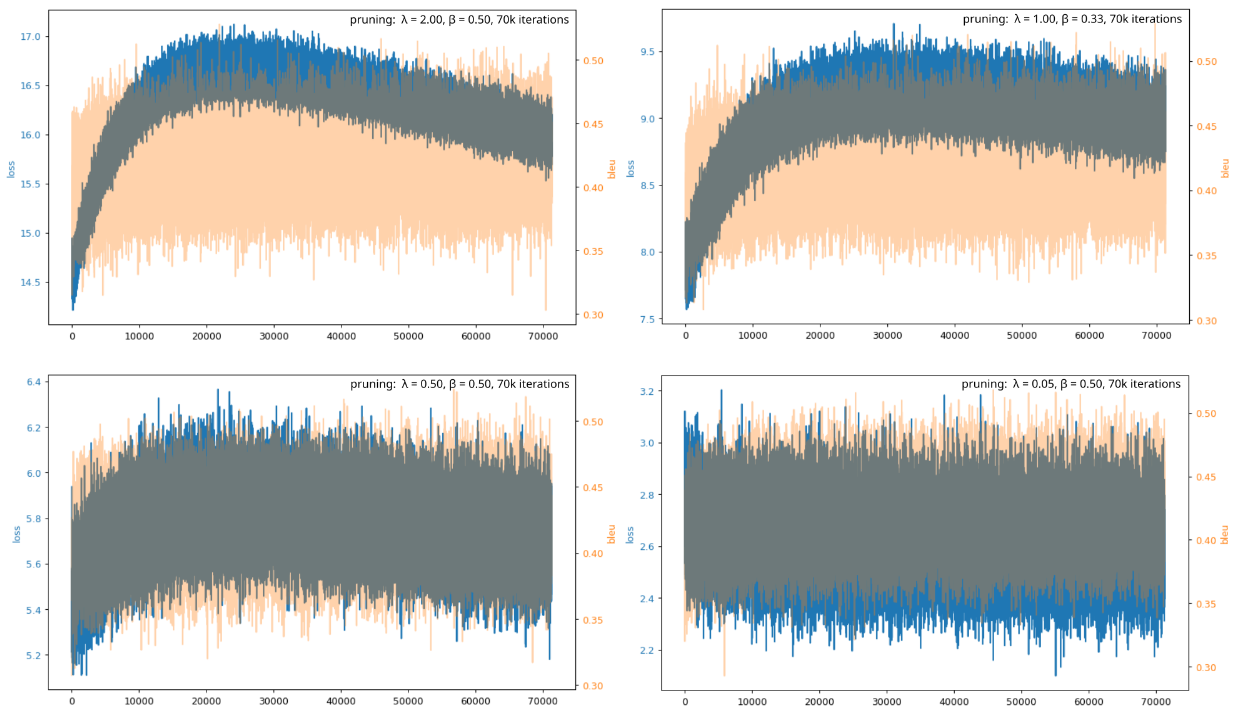

- Implementation of the method for pruning of attention heads by Elena Voita et al. (link)

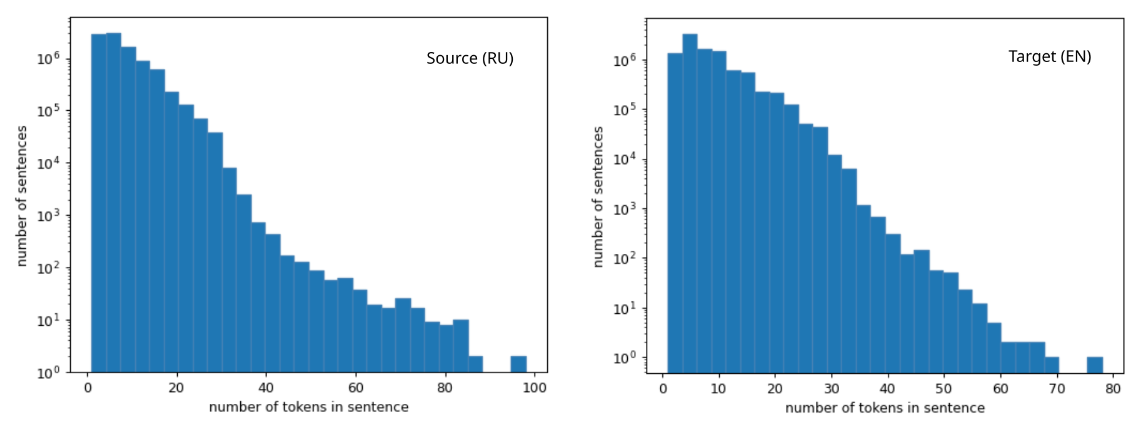

- Dataset: OpenSubtitles v2018 (link)

- Total number of sentence pairs in corpus: ~26M

- Number of sentence pairs in training: ~9M / ~250K / ~250K

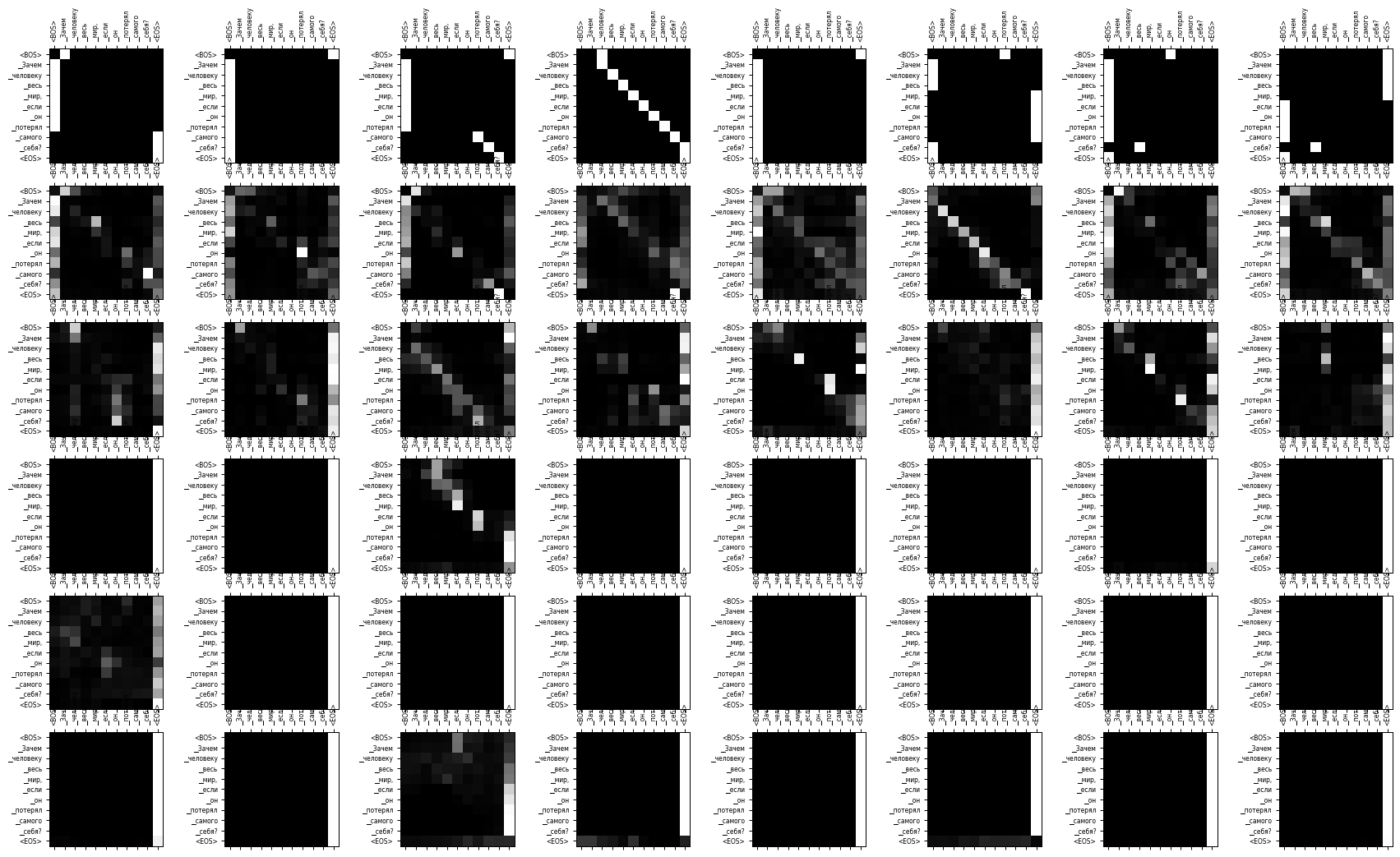

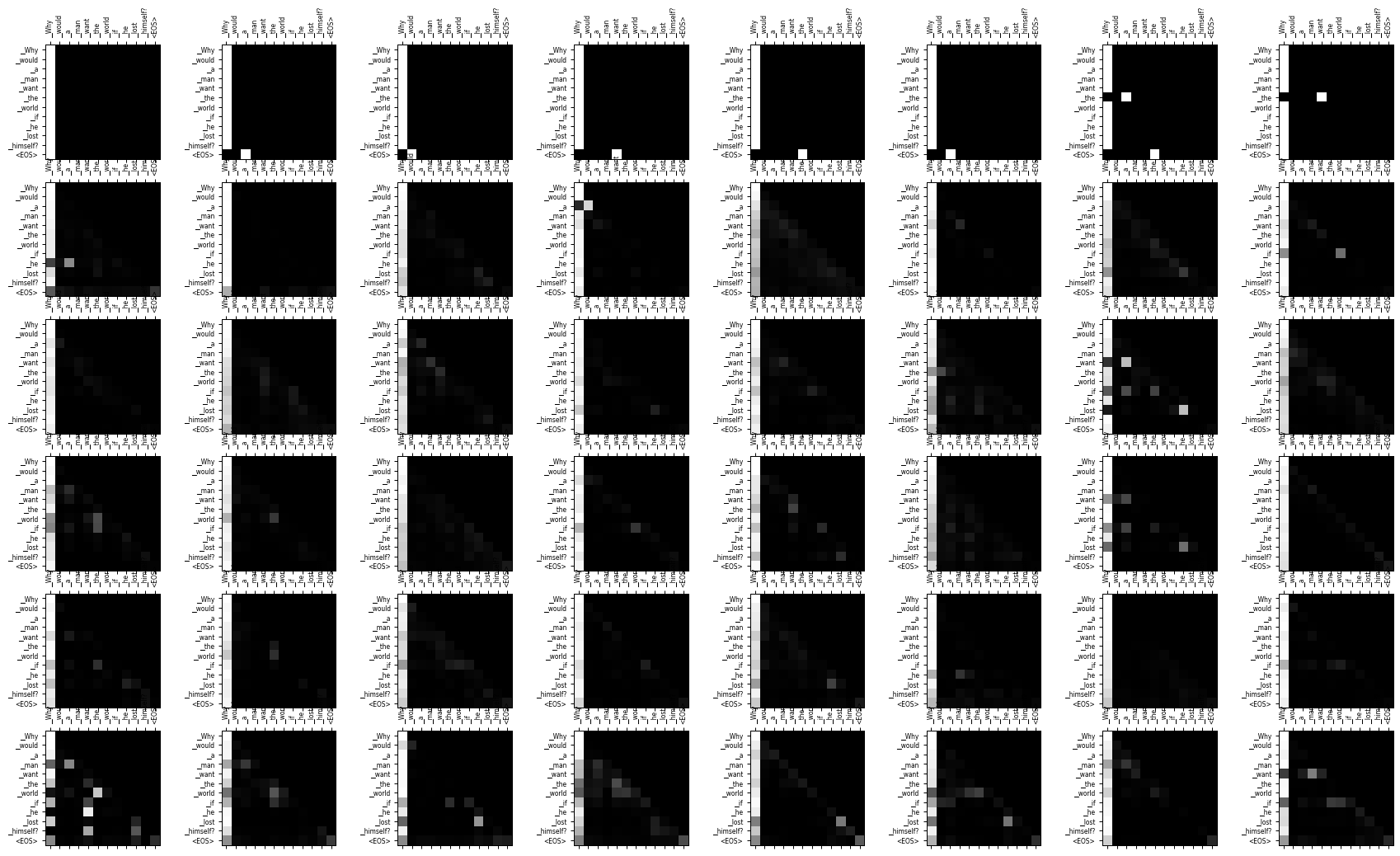

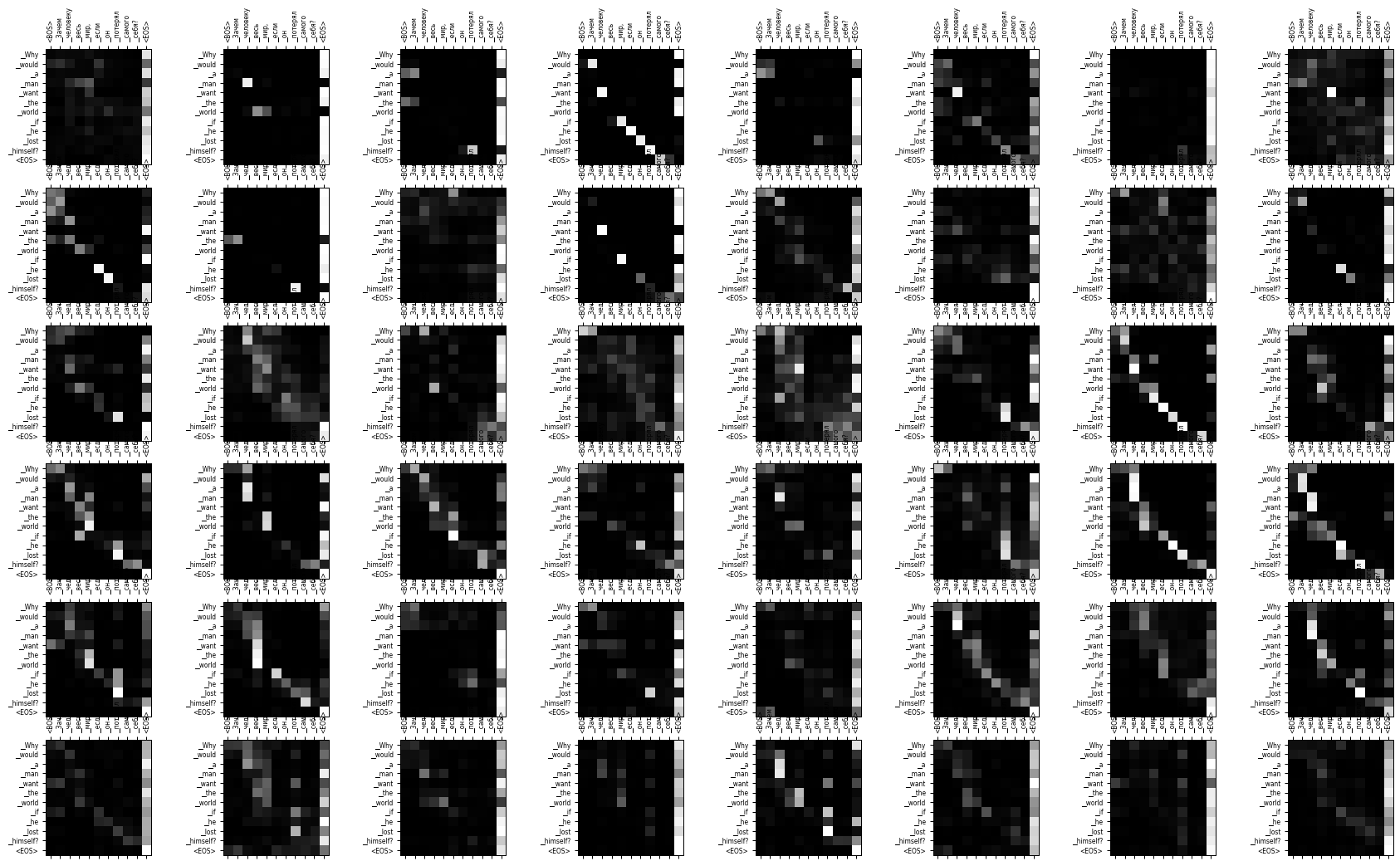

- 6 layers, 8 heads, ~26M parameters

- max len = 100, hid dim = 256, pf dim = 512

- Fast Byte Pair Encoding (youtokentome)

- Source / target vocabulary size: 30k / 20k tokens

- Dropout 0.1, gradient clipping 1

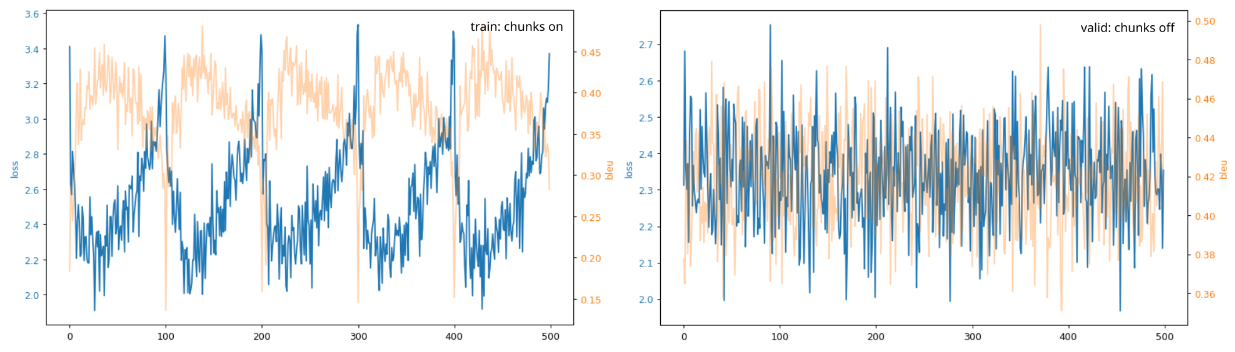

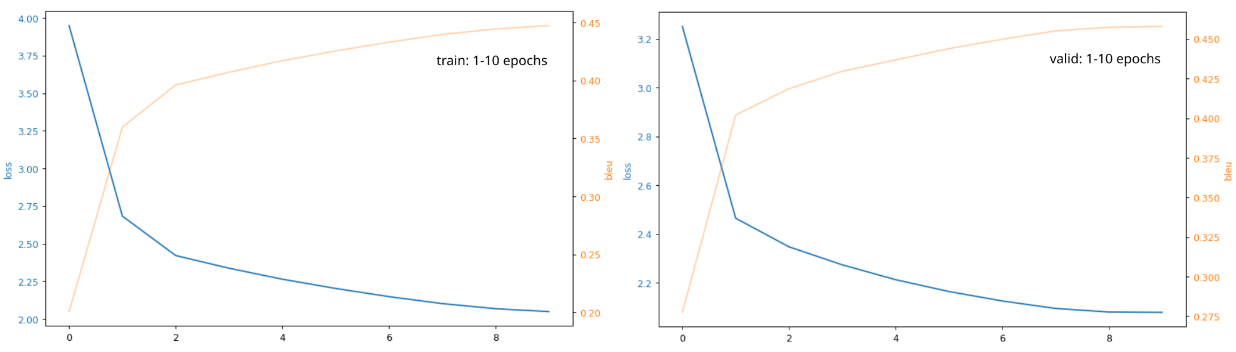

- Loss function: Cross Entropy Loss, Target Metric: BLEU

- 10 epochs, ~25 hours on Kaggle and Google Colab GPUs

- Loader: 128 sentence pairs / batch, 100 batches / chunk

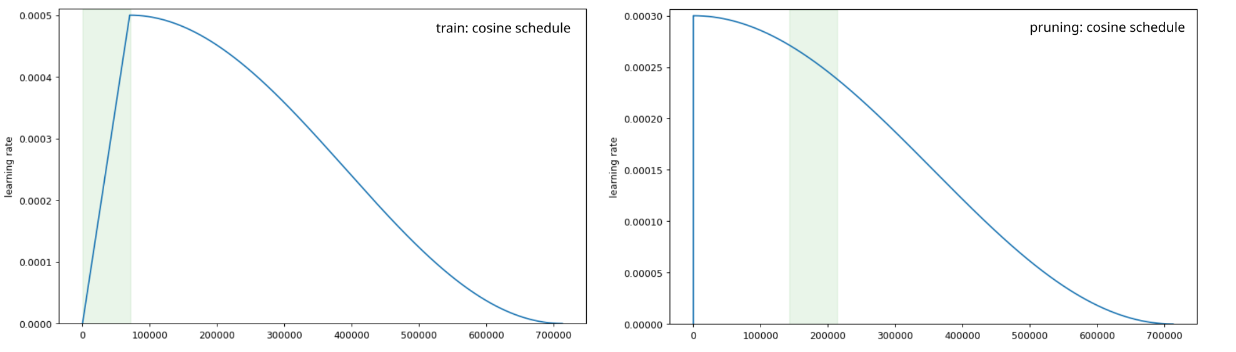

- Optimizer: Adam, lr 0.0005, cosine schedule, warm up 70K steps

- λ = 0.05, β = 0.50, 70k iterations

- Gumbel noise, Hard Concrete Gates

- Extra penalty for too many attention heads

- Greedy and Beam Search generation (k=4)

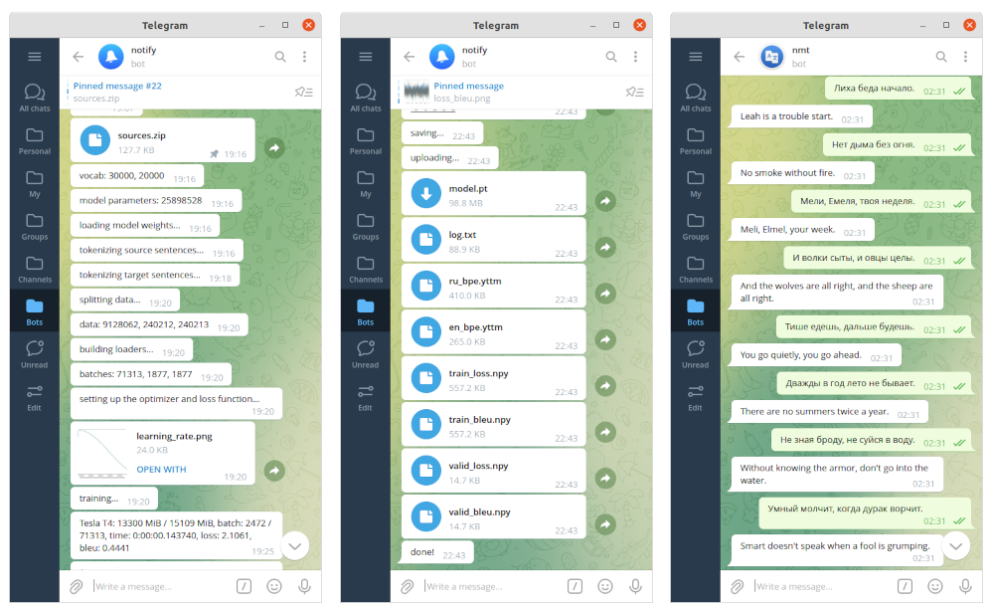

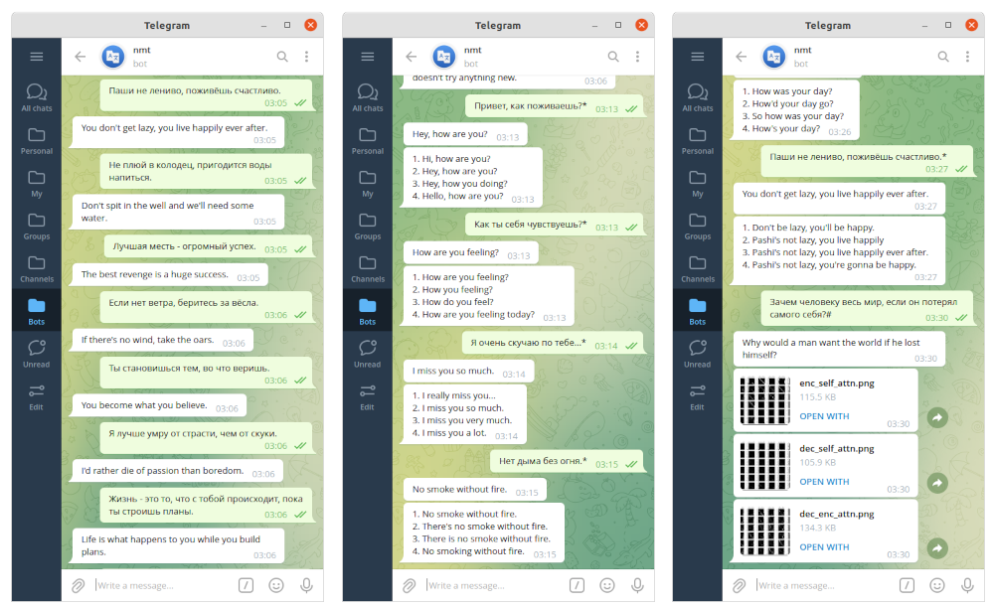

You can download and run it yourself or you can use this bot.

python3 -m venv env

source env/bin/activate

pip install -r requirements.txt

gdown 1heNu80X8DcTKTx2Od0-EW-6JrkXxk5Ze

gdown 1c4LakbKi7-gbKyAvcoGkJ8Yic16wvJx0

gdown 1I46t9Qgz0NbXjT-EPbogEUYpvGPTc408

python3 bot.py <bot_owner_id> <bot_token>