Image Analyzer for Home Assistant using GPT Vision

🌟 Features

·

📖 Resources

·

⬇️ Installation

·

gpt4vision is a Home Assistant integration that allows you to analyze images and camera feeds using GPT-4 Vision.

Supported providers are OpenAI, Anthropic, LocalAI and Ollama.

- Compatible with OpenAI, Anthropic Claude, LocalAI and Ollama

- Takes images and camera entities as input as well as image files

- Images can be downscaled for faster processing

- Can be installed and updated through HACS and can be set up in the Home Assistant UI

Check the 📖 wiki for examples on how you can integrate gpt4vision into your Home Assistant setup or join the 🗨️ discussion in the Home Assistant Community.

- Search for

GPT-4 Visionin Home Assistant Settings/Devices & services - Select your provider

- Follow the instructions to complete setup

- Download and copy the gpt4vision folder into your custom_components folder.

- Add integration in Home Assistant Settings/Devices & services

- Provide your API key or IP address and port of your self-hosted server

Simply obtain an API key from OpenAI and enter it in the Home Assistant UI during setup.

A pricing calculator is available here: https://openai.com/api/pricing/.

Obtain an API key from Anthropic and enter it in the Home Assistant UI during setup. Pricing is available here: Anthropic image cost. Images can be downscaled with the built-in downscaler.

To use LocalAI you need to have a LocalAI server running. You can find the installation instructions here. During setup you'll need to provide the IP address of your machine and the port on which LocalAI is running (default is 8000).

To use Ollama you need to have an Ollama server running. You can download it from here. Once installed you need to run the following command to download the llava model:

ollama run llavaIf your Home Assistant is not running on the same computer as Ollama, you need to set the OLLAMA_HOST environment variable.

On Linux:

- Edit the systemd service by calling

systemctl edit ollama.service. This will open an editor. - For each environment variable, add a line Environment under section [Service]:

[Service]

Environment="OLLAMA_HOST=0.0.0.0"

- Save and close the editor.

- Reload systemd and restart Ollama

systemctl daemon-reload

systemctl restart ollamaOn Windows:

- Quit Ollama from the system tray

- Open File Explorer

- Right click on This PC and select Properties

- Click on Advanced system settings

- Select Environment Variables

- Under User variables click New

- For variable name enter

OLLAMA_HOSTand for value enter 0.0.0.0 - Click OK and start Ollama again from the Start Menu

On macOS:

- Open Terminal

- Run the following command

launchctl setenv OLLAMA_HOST "0.0.0.0"- Restart Ollama

After restarting, the gpt4vision.image_analyzer service will be available. You can test it in the developer tools section in home assistant. To get OpenAI gpt-4o's analysis of a local image, use the following service call.

service: gpt4vision.image_analyzer

data:

provider: OpenAI

message: Describe what you see?

max_tokens: 100

model: gpt-4o

image_file: |-

/config/www/tmp/example.jpg

/config/www/tmp/example2.jpg

image_entity:

- camera.garage

- image.front_door_person

target_width: 1280

detail: low

temperature: 0.5

include_filename: trueNote

Note that for image_file each path must be on a new line.

The parameters provider, message, max_tokens and temperature are required.

Additionally, either image_file or image_entity need to have at least one input.

You can send multiple images per service call as well as mix image_file and image_path inputs. To also include the filname in the request, set include_filename to true.

Optionally, the model, target_width and detail properties can be set.

- Most models are listed below. For all available models check these pages: OpenAI models, Anthropic Claude models, Ollama models and LocalAI model gallery.

- The target_width is an integer between 512 and 3840 representing the image width in pixels. It is used to downscale the image before encoding it.

- The detail parameter can be set to

loworhigh. If it is not set, it is set toauto. OpenAI will then use the image size to determine the detail level. For more information check the OpenAI documentation.

Note

If you set include_filename to false (the default) requests will look roughly like the following:

Images will be numbered sequentially starting from 1. You can refer to the images by their number in the prompt.

Image 1:

<base64 encoded image>

Image 2:

<base64 encoded image>

...

<Your prompt>

Note

If you set include_filename to true requests will look roughly like the following

- If the input is an image entity, the filename will be the entity's

friendly_nameattribute. - If the input is an image file, the filename will be the file's name without the extension.

- Your prompt will be appended to the end of the request.

Front Door:

<base64 encoded image>

front_door_2024-12-31_23:59:59:

<base64 encoded image>

...

<Your prompt>

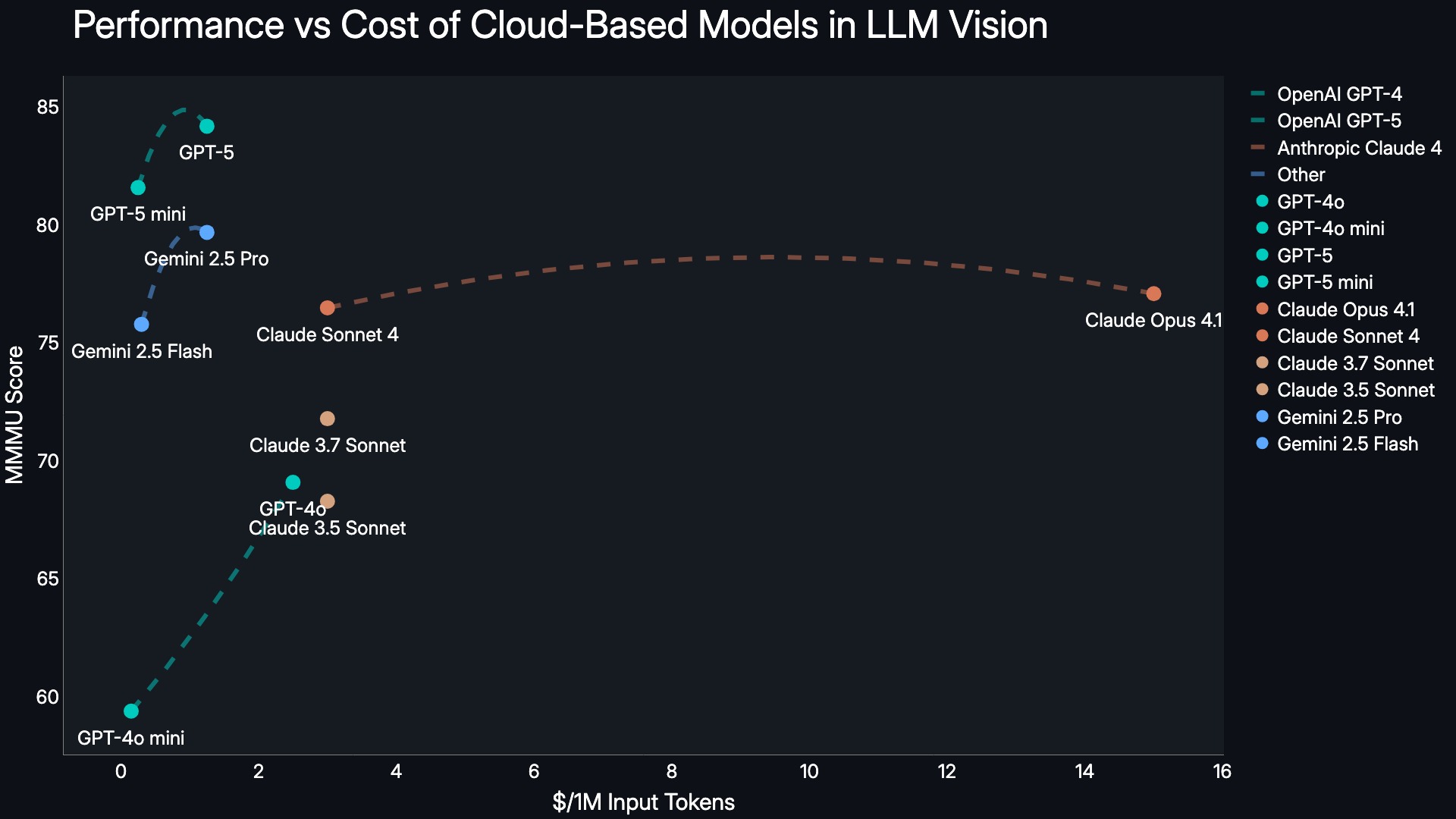

| Model Name | Hosting Options | Description | MMMU1 Score |

|---|---|---|---|

| GPT-4o | Cloud (OpenAI API key required) | Best all-round model | 69.1 |

| Claude 3 Haiku | Cloud (Anthropic API key required) | Fast model optimized for speed | 50.2 |

| Claude 3 Sonnet | Cloud (Anthropic API key required) | Balance between performance and speed | 53.1 |

| Claude 3 Opus | Cloud (Anthropic API key required) | High-performance model for more accuracy | 59.4 |

| Claude 3.5 Sonnet | Cloud (Anthropic API key required) | Balance between performance and speed | 68.3 |

| LLaVA-1.6 | Self-hosted (LocalAI or Ollama) | Open-Source alternative | 43.8 |

Data is based on the MMMU Leaderboard2

Note

Claude 3.5 Sonnet achieves strong performance - comparable to GPT-4o - in the Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark MMMU1, while being 40% less expensive. This makes it the go-to model for most use cases.

gpt4vision is compatible with multiple providers, each of which has different models available. Some providers run in the cloud, while others are self-hosted.

To see which model is best for your use case, check the figure below. It visualizes the averaged MMMU1 scores of available cloud-based models. The higher the score, the better the model performs.

The Benchmark will be updated regularly to include new models.

1 MMMU stands for "Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark". It assesses multimodal capabilities including image understanding.

2 The data is based on the MMMU Leaderboard

To enable debugging, add the following to your configuration.yaml:

logger:

logs:

custom_components.gpt4vision: debugImportant

Bugs: If you encounter any bugs and have followed the instructions carefully, feel free to file a bug report.

Feature Requests: If you have an idea for a feature, create a feature request.