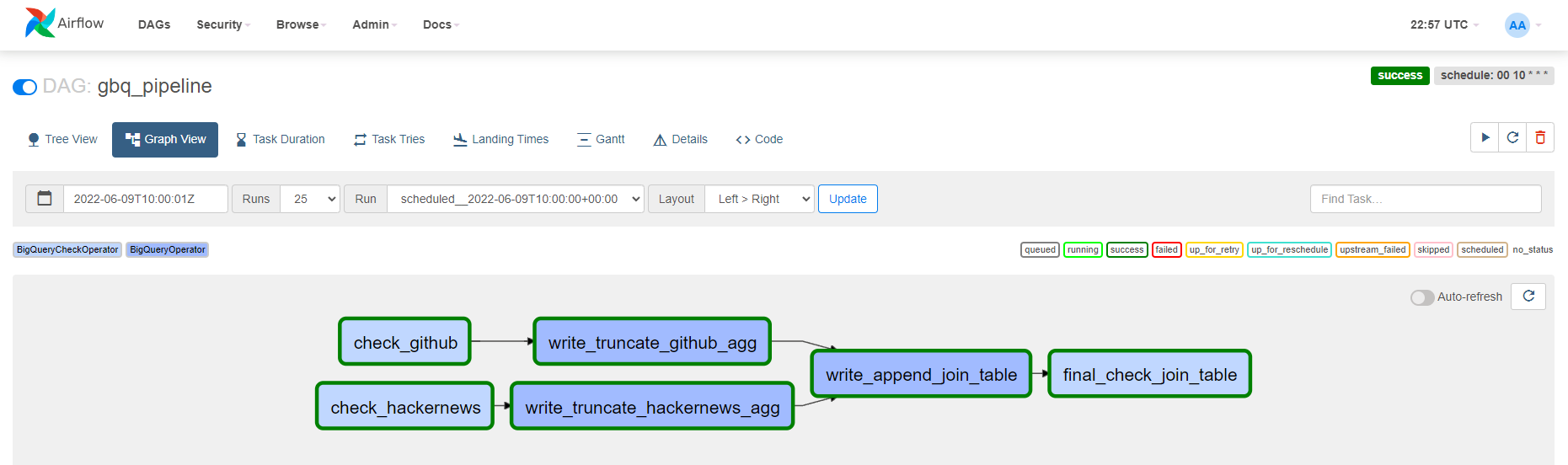

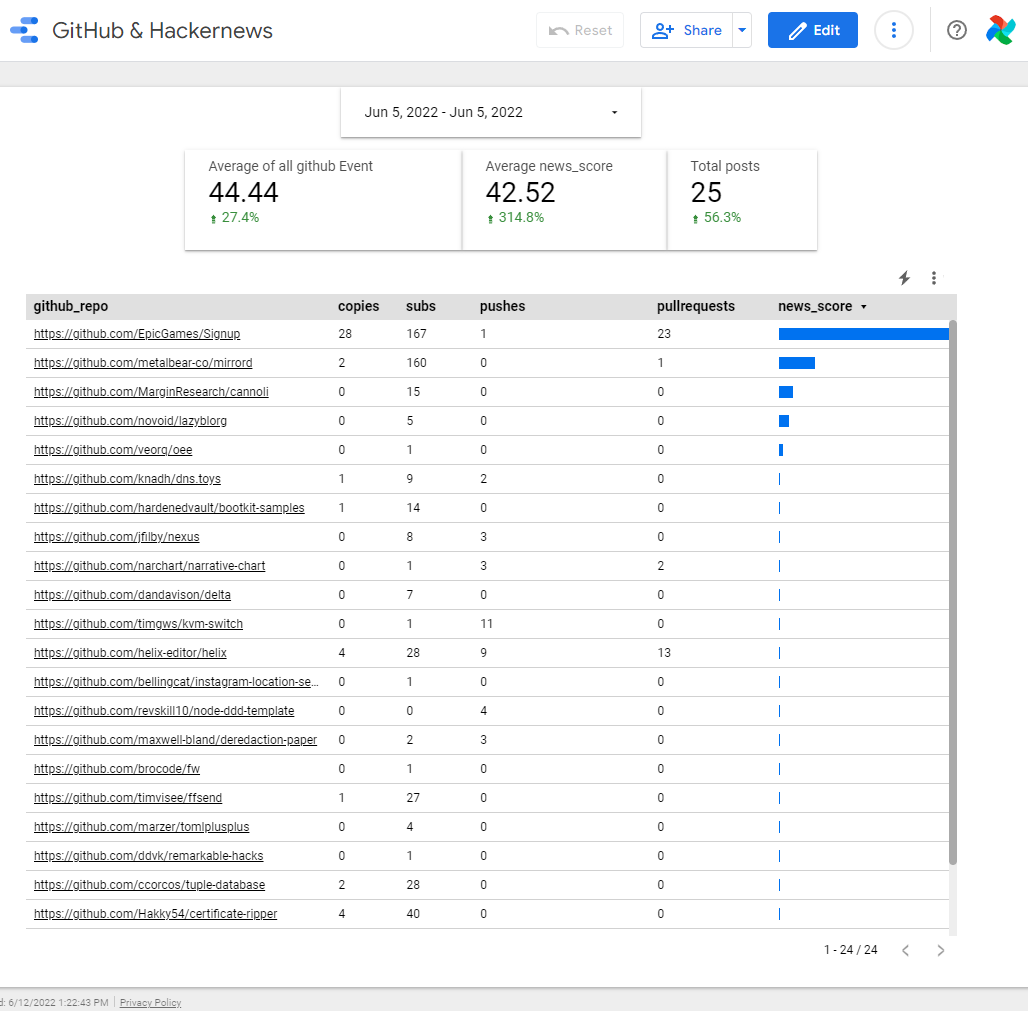

In this project, Airflow will be use to build a pipeline that leverage public datasets on Bigquery, update aggregated table on a daily basis that feed into a dashboard on Data Studio

- Docker desktop

- BigQuery account (sandbox)

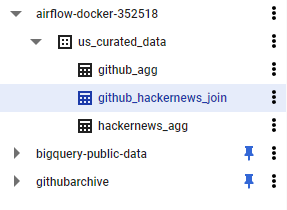

- This project leverage 2 public dataset of bigquery:

bigquery-public-data.hacker_newsandgithubarchive.day - For billing, we can use sandbox account with 10GB storage, and 1TB query data free of charge monthly

- Docker compose filepath:

./docker-compose.yml - Airflow image:

apache/airflow:2.0.1(with Flower off, default examples off) - Redis image:

redis:latest - Postgre image:

postgres:13

- Dag filepath:

./dags/gbq_pipeline.py

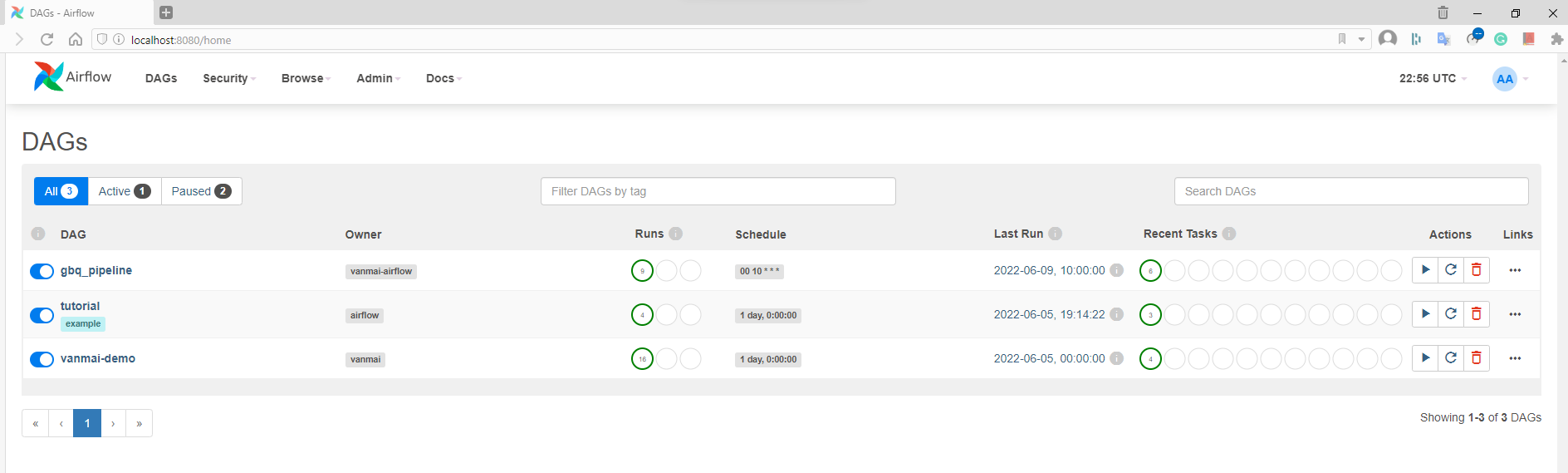

> docker-compose up airflow-init -d

> docker-compose up -d

- Server at: http://localhost:8080 (login & password:

airflow)

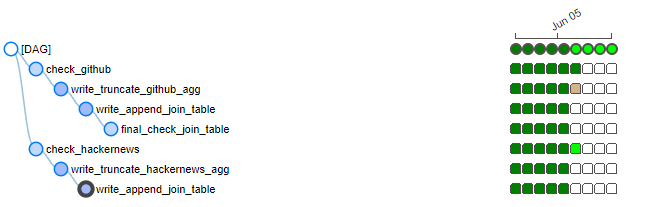

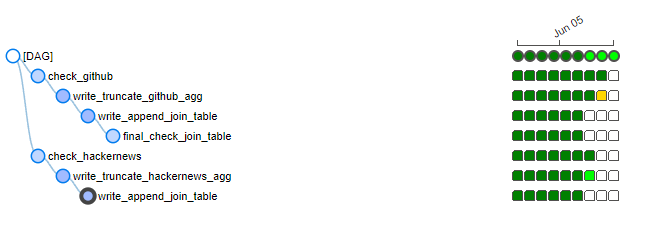

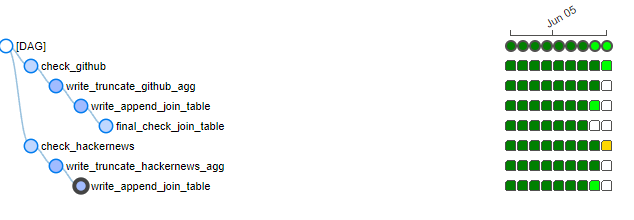

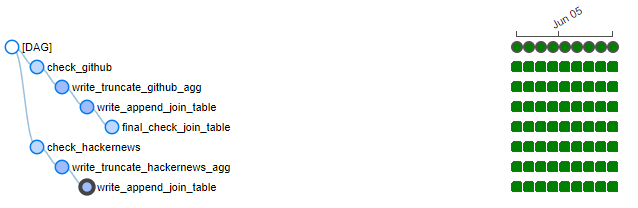

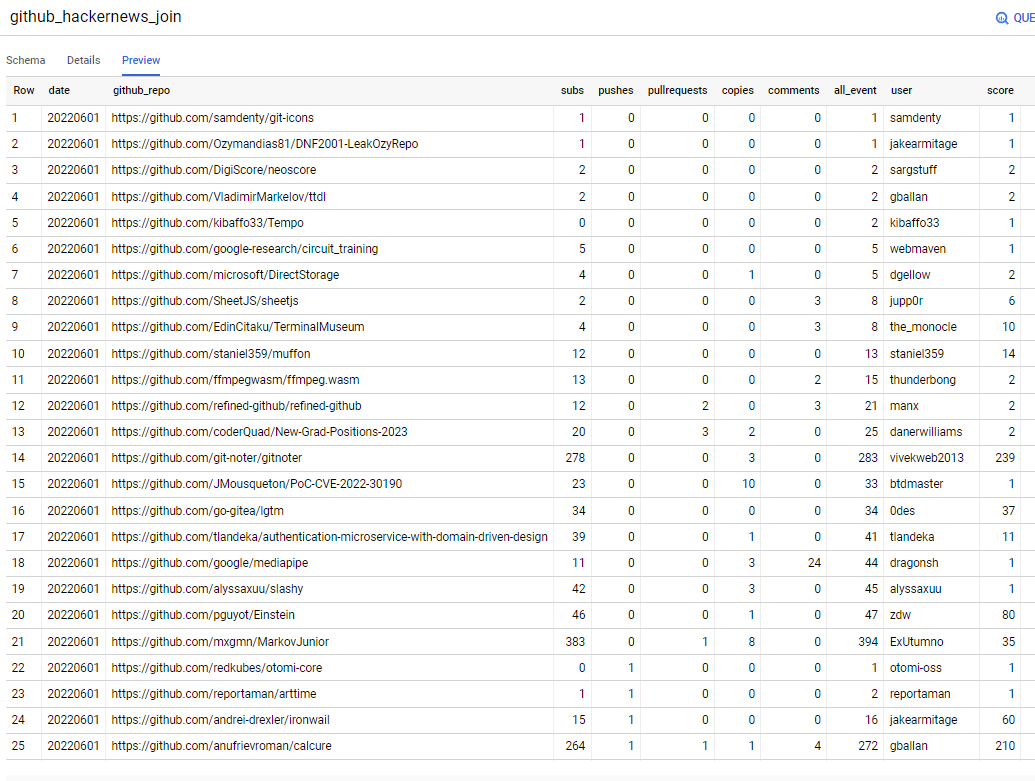

- Tables are created in GBQ project, and the final join table

github_hackernews_joinalso get data populated