Kindly go through our Research Paper published by IEEE on this project for more information and cite it in your projects

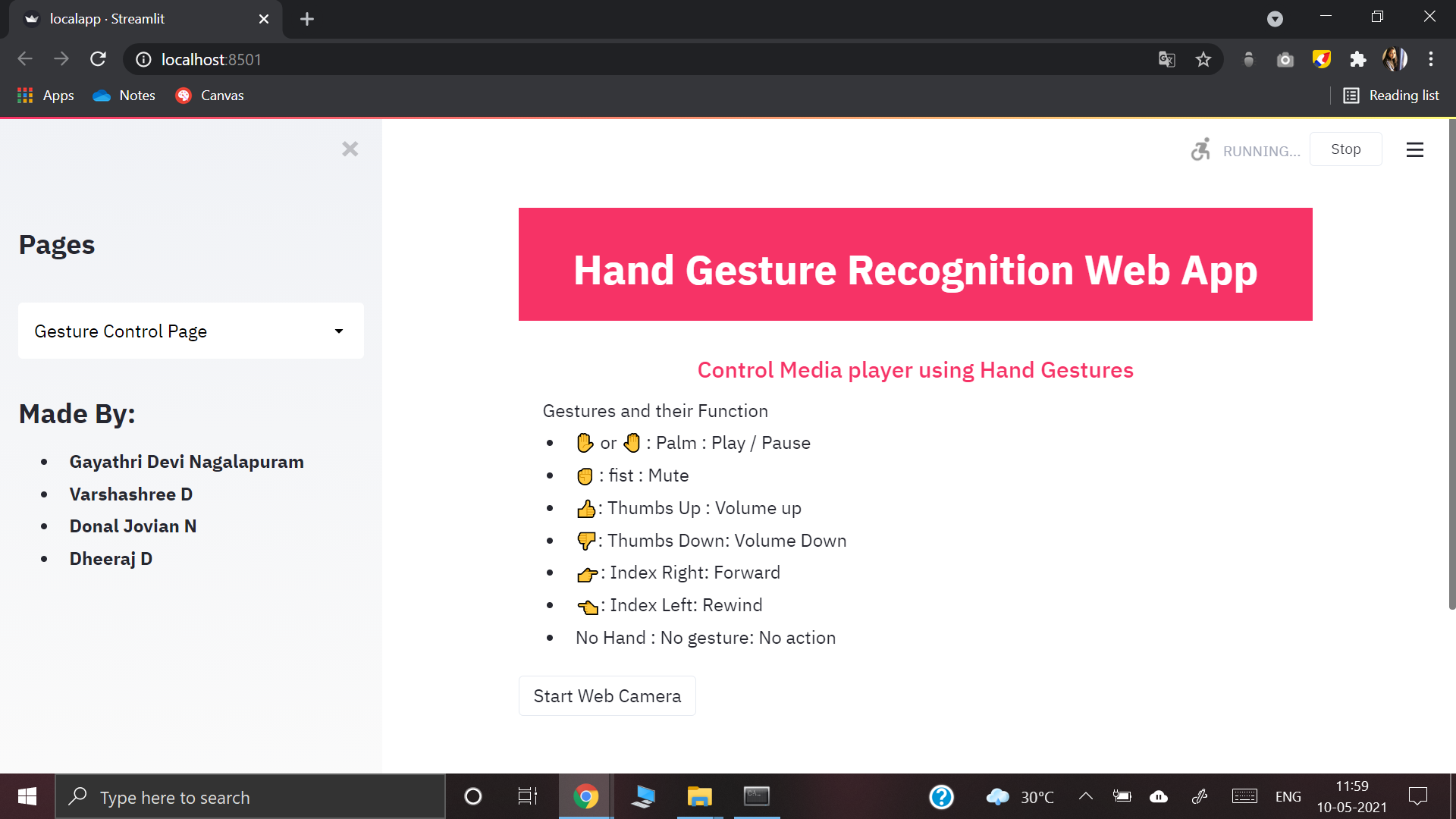

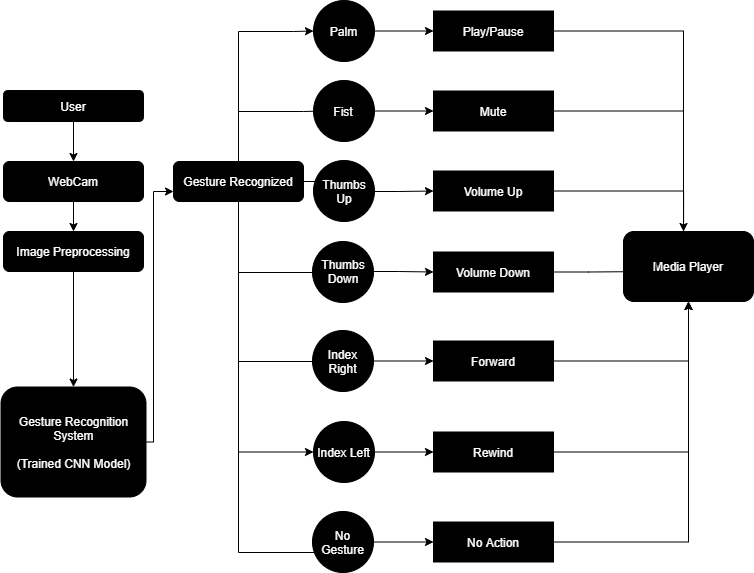

The primary aim is to use the most natural form, i.e., hand gestures to interact with the computer system. The goal of this project is to create a web application that uses your device's camera to give you touch-free and remote-free control over any media player application (with no special hardware). It would implement these gestures such that they are easy to perform, fast, efficient, and ensure an immediate response. It increases your productivity and makes your life easier and comfortable by letting you control your device from a distance.

The proposed system can control the media player from a distance using hand gestures.

- OpenCV is used to collect raw images and convert them to black and white images for dataset creation.

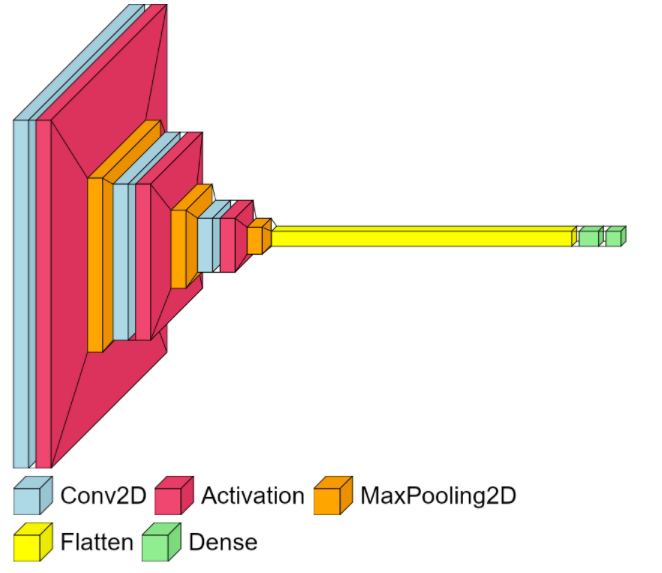

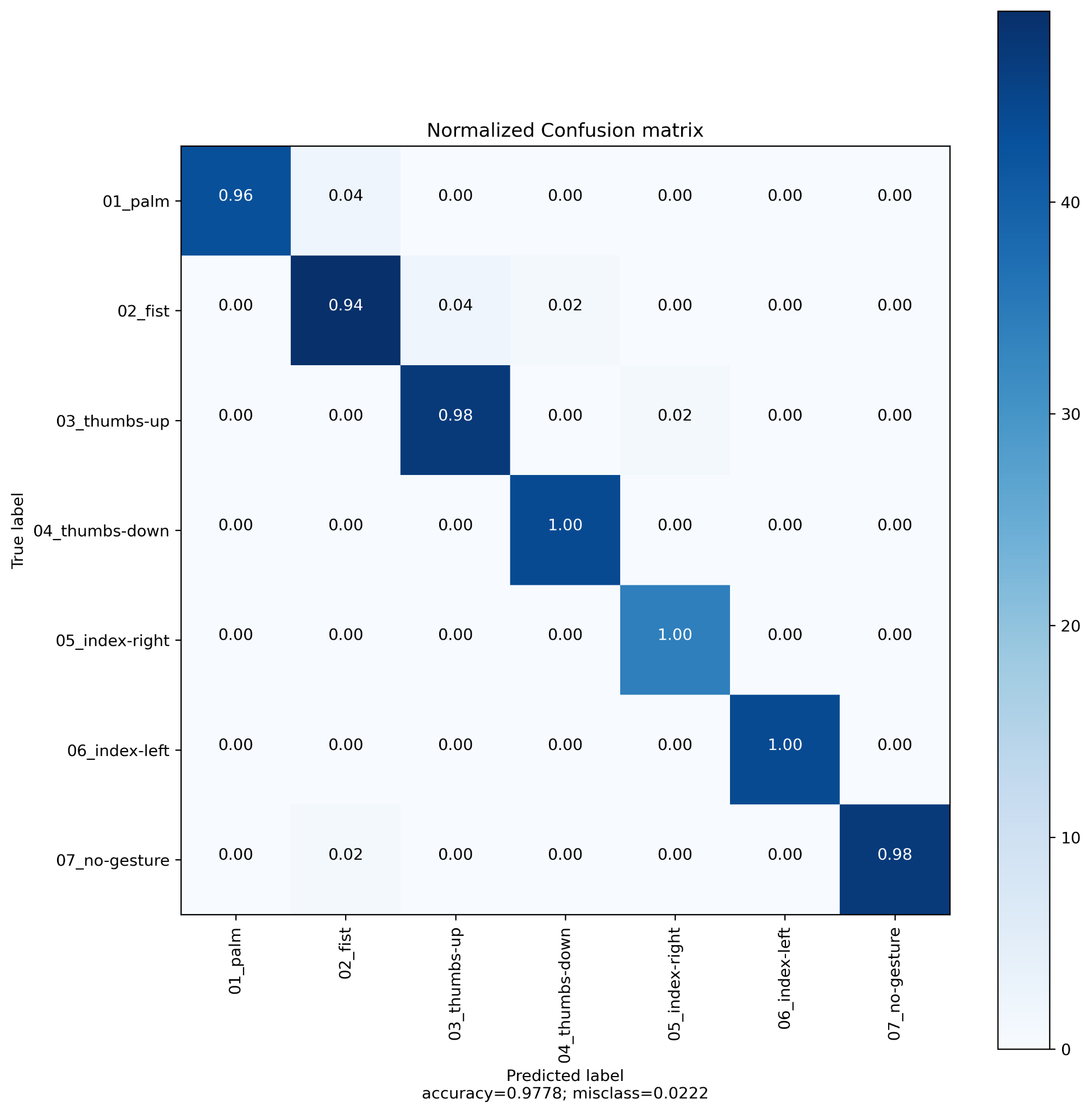

- Two Dimensional Convolutional Neural Network is built for feature extraction and classification.

- The PyAutoGUI library is used to integrate the Keyboard keys to hand gestures

- A user interface is created using the Streamlit web framework

- A webpage is deployed which contains source files and demo using streamlit.io sharing.

https://share.streamlit.io/gayathri1462/hand-gesture-recognition-streamlit/main/webapp.py

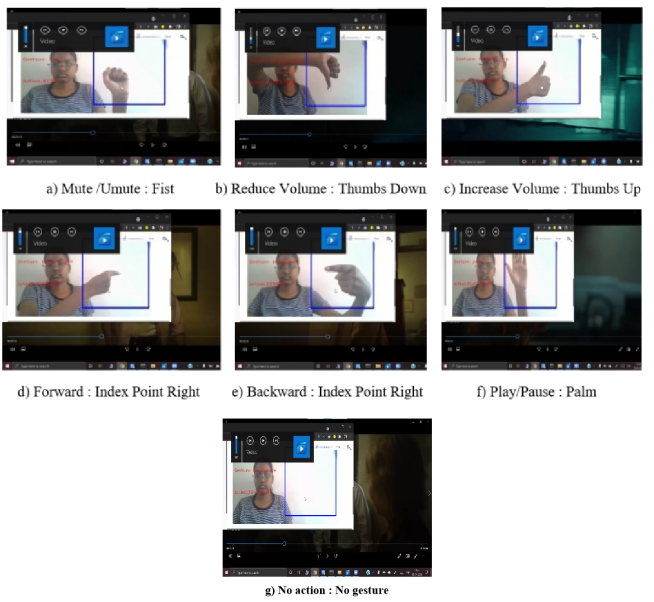

Gestures obtained after Data collection and preprocessing: Palm, fist, thumbs up, thumbs down, index pointing right, index pointing left and no gesture (Left to right)