This repo is the official implementation for Historical Astronomical Diagrams Decomposition in Geometric Primitives.

This repo builds on the code for DINO-DETR, the official implementation of the paper "DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection".

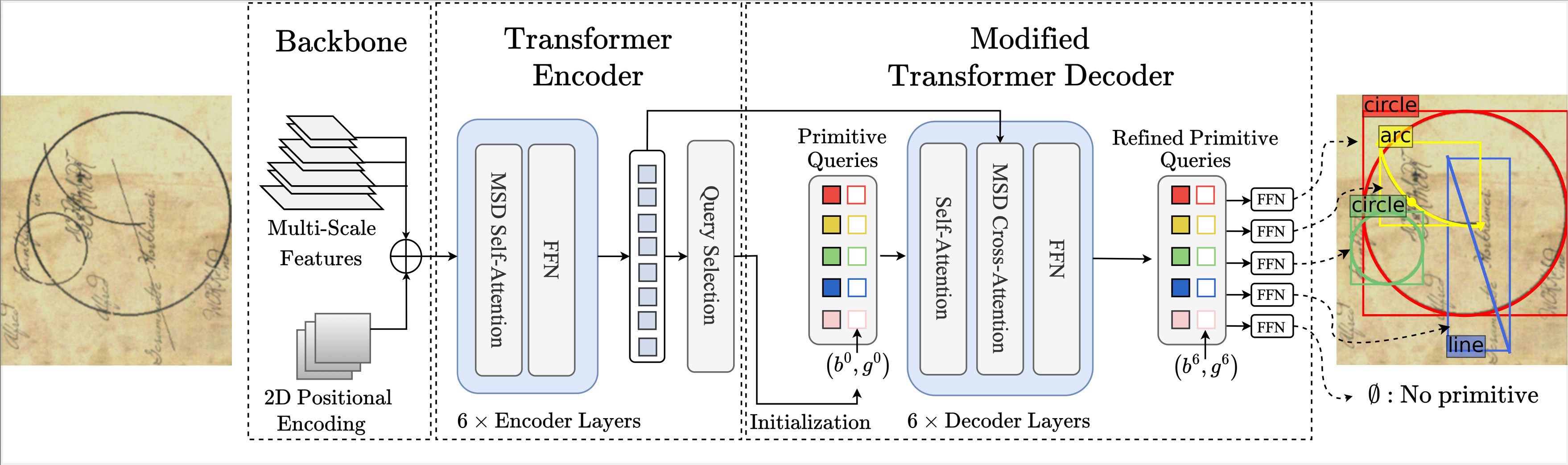

We present a model which modifies DINO-DETR to perform historical astronomical diagram vectorization by predicting simple geometric primitives, such as lines, circles, and arcs.

1. Installation

The model was trained with ```python=3.11.0 pytorch=2.1.0,cuda=11.8``` and builds on the DETR-variants DINO/DN/DAB and Deformable-DETR.- Clone this repository.

- Install Pytorch and Torchvision. The model builds on the DETR-variants DINO/DN/DAB and Deformable-DETR. If you have the environment for DINO/DN/DAB DETR, you can skip this part. Follow the instruction on https://pytorch.org/get-started/locally/

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118- Install other needed packages

pip install -r requirements.txt- Compiling CUDA operators

cd src/models/dino/ops

python setup.py build install

# unit test (should see all checking is True) # could output an outofmemory error

python test.py

cd ../../../..- Installing the local package for synthetic data generation

cd synthetic

pip install -e .

cd ../2. Annotated Dataset and Model Checkpoint

Our annotated dataset along with our main model checkpoints and configuration file can be found here. Checkpoints and corresponding configuration files should be stored in a logs folder.

HDV/

logs/

└── main_model/

└── checkpoint0012.pth

└── checkpoint0036.pth

└── config_cfg.pyAnnotations are in SVG format. We provide helper functions for parsing svg files in Python if you would like to process a custom annotated dataset. Once downloaded and organized as follows:

HDV/

data/

└── eida_dataset/

└── images_and_svgs/

└── custom_dataset/

└── images_and_svgs/You can process the ground-truth data for evaluation using:

bash scripts/process_annotated_data.sh3. Synthetic Dataset

The synthetic dataset generation process requires a resource of text and document backgrounds. We use the resources in docExtractor and in diagram-extraction. This resource is part of the dataset used in docExtractor and in diagram-extraction. The code for generating the synthetic data is also heavily based on docExtractor.

To get the synthetic resource (backgrounds) for the synthetic dataset you can launch:

bash scripts/download_synthetic_resource.shOr download and unzip the data

Download the synthetic resource folder here and unzip it in the data folder.

1. Evaluate our pretrained models

After downloading and processing the evaluation dataset, you can evaluate the pretrained model as follows. Download a model checkpoint, for example "checkpoint0012.pth" and launch

bash scripts/evaluate_on_eida_final.sh model_name epoch_numberFor example:

bash scripts/evaluate_on_eida_final.sh main_model 0012You should get the AP for different primitives and for different distance thresholds.

2. Inference and Visualization

For inference and visualizing results over custom images, you can use the notebook.

1. Training from scratch on synthetic data

To re-train the model from scratch on the synthetic dataset, you can launchbash scripts/train_model.sh config/2. Training on a custom dataset

To train on a custom dataset, the custom dataset annotations should be in a COCO-like format, and should be in data/

└── custom_dataset_processed/

└── annotations/

└── train/

└── val/You should then adjust the coco_path variable to 'custom_dataset_processed' in the config file.

If you find this work useful, please consider citing:

@misc{kalleli2024historical,

title={Historical Astronomical Diagrams Decomposition in Geometric Primitives},

author={Syrine Kalleli and Scott Trigg and Ségolène Albouy and Mathieu Husson and Mathieu Aubry},

year={2024},

eprint={2403.08721},

archivePrefix={arXiv},

primaryClass={cs.CV}

}