- ML development concerns experimenting and developing a robust and reproducible model training procedure (training pipeline code), which consists of multiple tasks from data preparation and transformation to model training and evaluation.

- Training operationalization concerns automating thre process of packaging, testing, and deploying repeatable and reliable training pipelines.

- Continuous training concerns repeatedly executing the training pipeline in response to the new data or to code changes, or on a schedule, potentially with new training settings.

- Model deployment concerns packaging, testing, and deploying a model to a serving environment for online experimentation and production serving.

- Prediction serving is about serving the model that is deployed in production for inference.

- Continuous monitoring is about monitoring the effectiveness and efficiency of a deployed model.

- Data and model management is a central, cross-cutting functon for governing ML artifacts to support audit-ability, traceability, and compliance. Data and model management can also promote shareability, resuability, and discoverability of ML assets

The MLOps Workflow is segmented into two modules:

- MLOps pipelines (build, deploy, and monitoring) - the upper layer.

- Build pipeline: Data ingestion, Model training, Model testing, Model packaging, Model registering.

- Data ingestion: This step is a trigger step of the ML Pipeline. It deals with the volume, velocity, veracity, and variety of data by extracting data from various data sources and ingesting the required data for the model training step. Robust data pipelines connected to multiple data sources enable it to perform ETL operations to provide necessary data for ML training purposes.

- Model training: After procuring the required data for ML model training, this step will enable model training. It has modular scripts or code that performs all he traditional steps in ML, such as data pre-processing, feature engineering, and feature scaling before training or retraining any model. Grid Search or Random Search cam be used for automatic hyperparameter tuning.

- Model testing: In this tep, we evaluate the trained model performance on a separated set of data points named test data - which was split and versioned in Data ingestion step. The inference of the trained model is evaluated according to selected metrics as per the use case. The output of this step is a report on the trained model's performance.

- Model packaging: After trained model has been tested in the previous step, the model can be serialized into a file or containerized (using Docker) to be exported to the production environment.

- Model registering: The model that was serialized or containerized is registered and stored in the model registry. A registered model is a logical collection of package of one or more files that assemble, represent, and execute your ML model. For example, multiple files can be registered as one model. For instance, a classification model can be comprised of a vectorizer, model weights, and serialized model files. All these files can be registered as one single model. After registering the model, the model can be downloaded and deployed as needed.

- Deploy. The deploy module enables operationalizing the ML models we developed in build stage. Deploy pipeline consist of two major components: Application Testing which transforms into Production Release. Deployment pipeline is enabled by streamlined CI/CD pipelines connecting the development to production environments.

-

Application testing: Before deploying an ML model to production, it is vital to test its robustness and performance via testing. So, for this we have Application testing phase where we need to test all the trained models for robustness and performance in a production-like environment alled a test environment. This environment must replicate the production environment.

The ML model for testing is deployed as an API or streaming service in the test environment to deployment targets such as Kubernetes clusters, container instances, or scalable virtual machines or edge devices as per the need and use case. - Drivers: Data, code, artifacts, middleware, and infrastructure - mid and lower layers.

- Continuous Integration.

- Continuous Delivery.

- Microservices (smaller version of an application that is specialized to serve one particular purpose).

- Infrastructure as Code (IaC, defining what your infrastructure will do and checking that into your source control repository).

- Monitoring and Logging.

- Communication and Collaboration.

- [1] Google MLOps Whitepapers. 2021.

- [2] Book: Engineering MLOPs. Rapidly build, test, and manage production-ready machine learning life cycles at scale. Written by Emmanuel Raj. 2021.

- [3] Book: Data Science on AWS. Implementing End-to-End, Continuous AI and Machine Learning Pipelines. Written by Chris Fregly & Antje Barth. 2021.

- Makefile.

- requirements.txt.

- hello.py - for demonstrating purposes.

- test_hello.py - for demonstrating purposes.

- virtualenv - (Virtual environment).

- Unit

- Integration

- Functional

- End-to-End

- Acceptance

- Performance

- Exploratory

- Github

- Jenkins

- AWS Code Built

- Terraform

- Cloud Formation

- Other kind of infrastructure...

- Project itself (Flask application, or similar).

- app.yaml.

- cloudbuild.yaml.

- requirements.txt.

- SaaS (Software as a Service).

A good example of this could be Gmail, where you do not have to host your own web server that handles the mail and provide it to clients. You can sign up for an account and get that service. There are also other formats how SaaS can be look like. And these often take place of things like monitoring. A good example of this could be Splunk, or DataDog, or any other large scale IPO company. They build services so that you do not have to provide those services to your company. - PaaS (Platform as a Service).

This is about abstracting away the infrastructure. So the application developer focus on building applications. A good example of this would be Heroku - that's been a really common platform as service that been around long time. Google has GAE (Google App Engine), Amazon itself has Beamstalk. The core idea is that you as a developer decide to pay a little bit more, and cloud provider will manage everything for you. So this is almost like a full service. - IaaS (Infrastructure as a Service).

It is one of the most extensive offering that you can get. You can get thinks in a bulk and the cost is very low. Good example of this could be Amazon EC2. You can go through and rent a Virtual Machine (VM). You can even bid on a VM via Spot Instances, and get let's say, 10% of the cost of a typical VM. So with IaaS you as the software engineer, cloud architect, need to go through and spin up VMs, Set-up the Newtworking Layer, but at significant cost savings. - MaaS (Metal as a Service).

Provided the ability to spin up and provision machines yourself. So, with MaaS you can physically control options there. A lot of these more suited towards, for example, virtualization. This is a core component of most cloud computing, but there are ways to physically control servers. A good example would be GPU. You may have a very specialized multi-GPU setup for, let's say, Machine Learning or specialized database, and you may want to control that physical hardware. - Serverless.

Very similar as PaaS, with one exception: it is really based around a function. Sou you could also call as Serverless FaaS, or Function as a Service. A reason of this is a different paradigm of developing software. It is basically about piece of logic. And this piece of logic, you put somewhere in the cloud and you hook it up to an event. Serverless is a way of abstracting the business logic into a unit of work and then applying that, wherever you need. Example: AWS Lambda. - Elasticity.

Elasticity is the ability to expand a contract according to the demand. That is mean that, your company has some web servers and if the traffic goes up, you can respond by automatically getting a new VM (or two, or more) and you can respond to that traffic. Likewise, if the traffic goes down, you can put away those VMs and you can make them go into a resource where you do not have to physically purchase it. It is about scaling up and down according to demand. - Availability.

Solves the question can you respond to a request? Do you have enough capacity? Response reliability can be described as 99.9999, or similar. - Self-service

A self-service means that you can procure things yourself. You do not need to go through an IT procurement process. You can put a credit card and launch a VM. It is more expensive, but it solves the problem of not needing humans. - Reduced complexity.

Because the cloud provider is handling a lot of lower level details like networking or security in a data center, you have less complexity for your company to deal with. It is not mentioned a lot, but very important. It is mean that you are focusing on solving the business problems, and not focusing on deciding is there a security problem in your data center, or is your network under attack by people outside the company. This is one of comparative advantages of why you should use cloud computing. - Total Cost of Ownership (TCO).

Asking to questions: What is the cost for a five year period you are spending on software and IT, and salary versus the cost you are paying by renting these capacity? Often times, it is the case that the total cost of ownership when you are using cloud resources is much lower than your own physical data center. - Operational resilience.

Asking the question: Can your company withstand a natural disaster? When you use the cloud, they have so much resilience built in. You get this as part of your relationship with the cloud vendor. - Business agility.

It is really easy to lose sight of the fact that your company does a specific thing. It is often not anything to do with infrastracture for computing. By leveraging the growing number of services that come with the cloud providers, you can focus your company on building things quicker and responding to the customer needs. - Write tests

- Setup tests

- Best practices

test_email: Testing author's email adress which must consist of a given name and surname with an underscore between. In this test we start with the first couple of names and surnames of the books, and change it after first part of this test in lines of code no. 14 and 15. I always recommend do not limit your choices of test variations for every single tests, use some edge cases by side, as I have mentioned before.test_old: Testing how old the book is. This is should valid the simple formula2021 - self.year. In this test we start with the first couple of values for the books, and change its puslished years values in line codes 27 and 28, as we did in the first test.test_apply_discount: Testing the final price if the discount will be applied. The default value of discount is 10%.- Cloud-native environments. Often times Containers can be optimal choice because of all the advancements that are happening in the cloud, such as Container Services, where you take a Container, deploy it as a service. This is the simplest possible way to deploy an application.

There are many cloud managed Kubernetes services that can take care of things - Microservices. Microservices are way of solving a problem in a very efficient and simple way where one service does one thing, and it works really well with a container. A Container allows you to basically build something that is reproducible and fits in this microservice workflow.

- DevOps Workflow. One of the best DevOps practices is reproduce the environment as well as the source code. In this case, DevOps allows you to programatically build the Container as well as programatically build-out the source code and deploy it into an ecosystem using IaC (Infrastructure as Code).

- Job Management. A lot of times when you build jobs over and over again, and you are reproducing these jobs, than often times a container-based workflow works very well. That is why many build service companies or SaaS (Software as a Service) companies are using Containers to manage their workloads.

- Portability and Usability. Usability is an another key component. In DevOps and Data Science, are two domains where portability can really pay dividends. For example, if you are data scientist and you have a whole environment that does some ML workflow, and you give someone a source control of it, and inside of that source control repository, there is no way to reproduce a runtime. So, giving them a source code, did not really solve the problem for them. But with the Container, it allows the runtime as well to be included and this is a key takeaway. This runtime, when you are able to package it in your project, it is completely reproducible.

- Container Runtime

- Developer Tools

- Docker App

- Kubernetes

- Private and Public repositories

- Automated build of container images via Github

- Pull and use certified images

- Team and Organizations

- Powerful orchestration service for containers.

- Container Health Managements.

- Build highly available solutions.

- Scale.

- Auto-heal

- Secret and and configuration management.

- Step 1. Create a Kubernetes cluster.

- Step 2. Deploy your application (push application to the cluster).

- Step 3. Expose application port. Your internal port will be exposed to web browser or a mobile client.

- Step 4. Application (automatically) scale up.

- Step 5. Application update using CD (Continuous Delivery).

DevOps is a practice that improves a velocity of your team. Main Key Terms of DevOps are listed below.

The whole project structure must be executed in isolated Virtual Environment which can be created in terminal by typing this command:

python -m venv env_name

Once you have created you virtual environment, you can activate it with this command:

source ˜/.env_name/bin/activate

Once you have activated your virtual environment, you can check it where the activated Python kernel is located by typing which python in your terminal. The appeared located must match with location of your virtual environment rood directory. The next step is to locate to the directory where requirements.txt is located and install all required dependencies for the project with this command:

pip install -r requirements.txt

For MLOps purposes the suggesting Data Science project structure should consists of following files:

This structure must be dominated in any Github repository to be able it deploy, or transfer to any cloud system.

Suggesting example of Makefile is represented below.

install:

pip install --upgrade pip &&\

pip install -r requirements.txt

format:

black *.py

lint:

pylint --disable=R,C hello.py

test:

python -m pytest -vv --cov=hello test_hello.py

all: install lint test

To construct Makefile always use Tabs, not Spaces. You can use the same Makefile over and over again for the projects.

If you want to launch specifically lint part, type make lint in your terminal. Alternatively, to launch format part, type make format in your terminal.

By running make all you can run the whole pipeline in one line.

Recommended requirements.txt contect is this.

pylint

pytest

click

black

pytest-cov

We do not specify versions of these packages assuming the we will use the newest ones. This list of modules can be extended by adding those ones which are rquired for your case or project.

The example of test_hello.py can be the following code:

from hello import add

def test_add()

assert add(1, 2) == 3You can define as many test files as you want for usual and extreme use cases.

In case you want to set-up your github action for your project, click on setup a workflow yourself and you will be re-directed to you_project/.github/workflows/main.yml.

In this file you are telling the system when you push something to the master/main branch, and can be something like this represented below.

name: Python application test with Github action

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python 3.8

uses: actions/setup-python@v1

with:

python-version: 3.8

- name: Install dependencies

run: |

make install

- name: Lint with Python

run: |

make lint

- name: Test with Python

run: |

make test

- name: Format code with Python black

run: |

make: format

You can have as many of these files as you want. For every single file that runs, it will exactly do what you say. For example, if we want to later setup a Google based deployment, we can set that up. If we want to set up an Azure based testing project, we can go ahead and set that up.

You can check the performance of your Github actions by clicking on Actions in the top menu on you Github. On the top of that, you can create a special link to your Readme.md file which will dynamically shows-up the status of your selected Github action. For this, choose and click on Create status badge in your Github action window. This is an useful aspect of a SaaS based continuous integration (CI) system.

There are following tpyes of tests:

You can create your new SSH key for your new virtual environment by type ssh-keygen -t rsa in your terminal activated within your virtual environment. From here, you can print-out how public key is looks like by typing cat /home//.ssh/id_rsa.

Then go yo your Github account. There go to Settings, then go to SSH and GPG keys, type the new name of the key and paste the public key into Key section (starting with ssh-rsa).

Continuous Delivery (furthermore CD) is a term which means that the code is always in a deployable state, both in term of application software and the infrastructure needed to run the code.

Example. You as the user, you may be developing code on your laptop, and you are checking your code into a source control repository. And you would have the master branch which is the default branch in GitHub be the place where it would be hooked up to a particular environment. When you make the change a build server, and this could be many different types:

This IaC allows you to dynamically update a new environment or even create one. And that environment will be directly mapped to the branch in your source control.

For example, you could have a development branch. You could have a staging branch, and you could have a production branch. And each one of those situations, those branches could automatically create a parallel environment.

You could push your code into a development branch. And then when you are ready to test a change, that would be something that would go to production later, you can merge it into the staging branch. It will automatically go through lint code, test your code, deploy it to your staging environment, you could then do a very extensive load test to verify that your web application could scale to 100,000 users.

And then after that is done, you say, great, let's go ahead and merge it to production and it could go directly into production.

There are four main parts of a simple Continuous Delivery (CD) project:

The examples of these files are represented below:

app.yaml. Holds Google App Engine (or similar) configuration data.

runtime: python38

cloudbuild.yaml. Holds the Google Cloud Build (or similar) deployment configuration.

steps:

- name: "gcr.io/cloud-builders/gcloud"

args: ["app", "deploy"]

timeout: "1600s"

requirements.txt. Hold the package information for the project.

flask

app.py. Flask application source code.

from flask import Flask

from flask import jsonify

app = Flask(__name__)

@app.route('/')

def hello():

"""Return a friendly HTTP greeting."""

print("I am inside hello world")

return 'Hello World! CD'

@app.route('/echo/<name>')

def echo(name):

print(f"This was placed in the url: new-{name}")

val = {"new-name": name}

return jsonify(val)

if __name__ == '__main__':

app.run(host='127.0.0.1', port=8080, debug=True)There are many different types of cloud service models. The most popular of these are described in a list below.

Unit testing is a method by which components of source code are tested for robustness with coerced data and usage methods to determine whether the component is fit for the production system.

Quick introduction into Unit Testing, main keypoints and usageIn this section we will go cover following steps in domain of Unit testing.

If you not currently test your codes then it is definitely something that you are going to learn and start adding it to your projects. I guess a lot of you have heard about testing but might not know exactly what is it.

Testing your code is the most exciting thing to do but there is a reason that most companies and teams require their code to be tested. If you want to take a responsible role on big projects, then you are going to need to know how to test.

And reason for that is that it is going to save you a lot of time and headache in your daily working routine.

When you write good tests for you code it gives you more confidence that your updates and refactoring do not have any unexpected impact or break your code anyway.

For example, if you update a function in your project, those changes may have actually broken several sections of your code even if that function itself is still working.

Good unit test will make sure that everything is still working as it should.

So in this section I will cover the main principles about built-in unit testing module.

In this example we will work with app.py file, the code is represented below:

# Function to add numbers

def add(x: int, y: int) -> int:

return x + y

# Function to subtract numbers

def subtract(x: int, y: int) -> int:

return x - y

# Function to multiply numbers

def multiply(x: int, y: int) -> int:

return x * y

# Function to divide numbers

def divide(x: int, y: int) -> float:

if (x != 0) and (y != 0):

return x / y

else:

return 'Error. Numbers must not equal to zero.'A lot of of us for the same purpose test their code just by putting print statements and occasionally run the code. For example, just like for this print(add(9, 6)) function. You can see that the output as 15, and this is looking good. On the other hand, testing you code this way is not easy to automate and it is also hard to maintain. Also for testing a lot of different functions there is no way for us to see at a glance what failed and what succeeded. That is Unit testing comes in.

For this I we will create a new Python file with name test_app.py.

Before writing our test, important to know, that before writing our tests we need to call our file with tests underscore something (test_). So we name our test file as test_app.py.

Firsty, we need to import unittest module, it is a standard Python library, so no need to install anything. Just typepip install unittest.

To make it simply, the actual file app.py is stored in the same directory as test_app.py, so we can simply type

import app.

Now we need to create some testing cases for the code we want to test. In order to create these test cases, we need to create a test class that inherit from

unittestTestCase. To do this, we first going to create a class, we will call this class asTestCalc(). Inside this class we set the inheritence as an argumentunittest.TestCase.

Now, we are able to write our first test. To do it, we will write a method. Method must to start with

test_. This naming convention is required so that when we run this the system knows which method represent tests.First, we can test the

add()function. Let’s write deltest_add, and as always in OOP programming, the first argument for method need to beself. And now within out method we can write a test. Since we inherited fromunittestaTestCase, we have access to all these assert methods.We are going to use assert equals to test

add()function.

We can write is as simple as via the variableresult:result = app.add(9, 6).

Then it is a good time to use assert methodasertEqualwhich will check if ourresultvariable matches the conditions, so let's write:self.assertEqual(result, 15).At this phase, we should have this small Python script prepared:

import unittest

import app

class TestCalc(unittest.TestCase):

def test_add(self):

result = calc.add(9, 6)

self.assertEqual(result, 15)We can run our test by using terminal. Open it and navigate to your project directory, where application and test Python files are stored. If you run your code by typing python test_app.py, look, it return nothing.

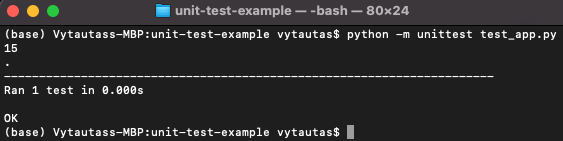

Instead we need to run unit tests as our main module and pass intest_app. We can do that by typing python -m unittest test_app.py The output is represented on the screen below.

We can see that when we run this, the output comes with the dot (.). It says - one run, one test. And the the bottom it says, that everything passed sucesfully.

Just come down in our code and we can say:

if __name__ == ‘__main__’then within this condition we can just sayunittest.main(). Now our Python code for testing should be look like:

import unittest

import app

class TestCalc(unittest.TestCase):

def test_add(self):

result = app.add(9, 6)

self.assertEqual(result, 15)

if __name__ == '__main__':

unittest.main()Now and run the test code in simple way: python test_app.py. This is how we can run our test directly.

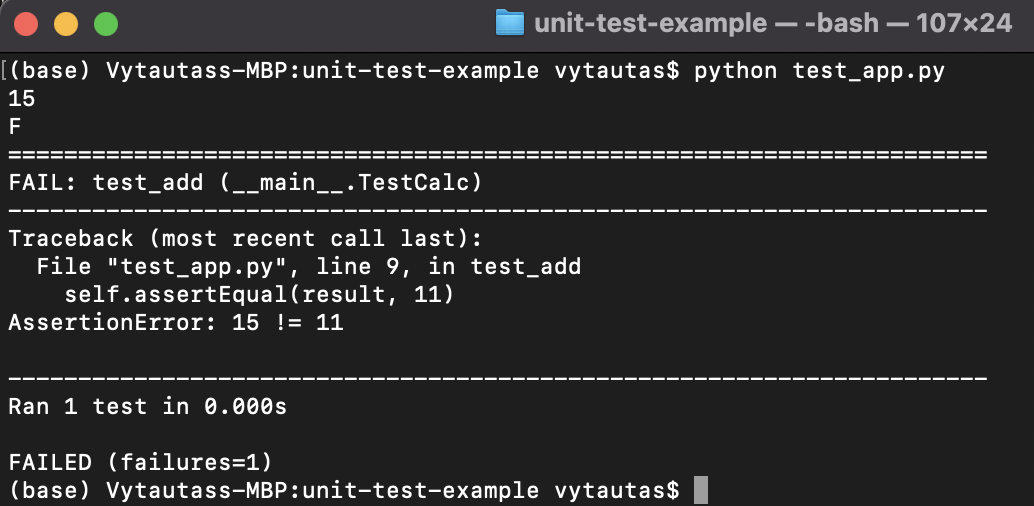

Let’s simulate the situation if our test fails. Let’s change expecting value from

15to11. First of all, we can see the letter F which stands for Fail. And also we can see that the test fails with assertion error, which says us that 15 is not equal to 11. The screen representing this situation is below.

So far we have tested only one scenario: 9 + 6 = 15. In real life, we should be expect to face with much more extreme situation. First of all, drop the

resultvariable and incorporate its arguments inselfvariable below directly. That will be easy to leverage multiple tests. And now we can experiment with more extreme scenarios line after line. The completed code is represented below.

import unittest

import app

class TestCalc(unittest.TestCase):

def test_add(self):

self.assertEqual(app.add(9, 6), 15)

self.assertEqual(app.add(-1, 1), 0)

self.assertEqual(app.add(100, 1), 101)

self.assertEqual(app.add(-20, -80), -100)

if __name__ == '__main__':

unittest.main()After run it, we still see that it was only one test. That is why these four

assertmethods here are just within this single test methodtest_add.

In order to add more tests, we need to add more test methods in

TestCalcclass. So, let’s test our rest calculation functions from the application code app.py. At the very beginning, just copy and pastetest_addmethod as many times as number of functions we want to tests. In our example, 3 more times, forsubtract(),multiply(), anddivide()functions. So now, our test code would be like this:

import unittest

import app

class TestCalc(unittest.TestCase):

def test_add(self):

self.assertEqual(app.add(9, 6), 15)

self.assertEqual(app.add(-1, 1), 0)

self.assertEqual(app.add(100, 1), 101)

self.assertEqual(app.add(-20, -80), -100)

def test_subtract(self):

self.assertEqual(app.subtract(9, 6), 3)

self.assertEqual(app.subtract(-1, 1), -2)

self.assertEqual(app.subtract(100, 1), 99)

self.assertEqual(app.subtract(-20, -80), 60)

def test_multiply(self):

self.assertEqual(app.multiply(3, 2), 6)

self.assertEqual(app.multiply(-1, 5), -5)

self.assertEqual(app.multiply(20, 1), 20)

self.assertEqual(app.multiply(2, 4), 8)

def test_divide(self):

self.assertEqual(app.divide(9, 3), 3)

self.assertEqual(app.divide(-1, 1), -1)

self.assertEqual(app.divide(4, 4), 1)

self.assertEqual(app.divide(1000, 100), 10)

if __name__ == '__main__':

unittest.main()With

assertRaisesmethod we will perform testingValueErrorin a simple edge case, individefunction. First of all, in order to make our example more easy to understand, let's changedividefunction in app.py to as represented below:

# Function to divide numbers

def divide(x: int, y: int) -> float:

if y == 0:

raise ValueError('We can not divide by zero!')

else:

return x / yHere we can see highlighted edge case, where variable

yequals to zero. We know that dividing by zero is not possible, and we want to avoid and alert user in such situations. Let's switch to test_app.py and add one more code line intest_divide()method.

self.assertRaises(ValueError, app.divide, 10, 0)Here we defined, that we are testing

ValueErrorusability within a given function. In test_app.py script, as a parameter toassertRaiseswe also include the name of testing function (app.divide, do not add any additional brackets here), then sequntialy set values for following argumentsxandy, which are10and0in your example. By reading this line, we should understand that we are testing situation where trying to perform mathematical action10 / 0, and exceptValueErrorwhich will tell us that this action can not be performed. If this error will be delivered, then our test passes; if not, will not passed.

When writingassertRaisestest, we always should be care thatValueErrorwill be checked in correct conditions. Let's simulate, how bad test is looking like. Let's change the last argument isassertRaisesmethod from0to2. By doing it, we are telling to the system that we are expecting aValueErrorby performing 10 / 2. We know that is valid procedure and no any errors in this scenario should be delivered.

The unittest module understand it and returns message AssertionError: ValueError not raised by divide. This indicates that our test is wrong and we need to fix it. So, let's change the value of2to0back inassertRaisesmethod.

This way of testing method inception into one line of code is not very efficiency. We would like the call the function that we are wanting to test normally, instead of passing all of these arguments. The best way to implement this approach is to use a context manager in Python. This allows us to handle and check the exception properly. To do it, first all, get rid of everything inline after ValueError, and transform the definition into context manager structure as follow.

with self.assertRaises(ValueError):

app.divide(10, 0)By using this context manager, we could see that all our test still passed. So, I suggest to use context managers instead of using exceptions.

In this part we will use another Python file book.py consist of single OOP class which describe a book, and have just few simple variables and methods for demonstrating purpose.

class Book:

'''A sample of Book class which must be tested'''

discount = 10

def __init__(self, author, year, cost):

self.author = author

self.year = year

self.cost = cost

@property

def author_email(self):

return f'{self.author}@mail.com'.replace(' ', '_')

@property

def old(self):

return 2021 - self.year

def apply_discount(self):

self.cost = int(self.cost * (1 - self.discount/100))Basically this class allows you to create

Bookinstances, where you can set book author, year, and cost. By using classBook()you can calculate price with discount, get book old age in years, and get author email address.

Furthermore we will go with bigger codes due it's complexity without explaining the same key points which are mentioned before in this chapter. So, let's create some test for this file. First of all, create a new file, and name it test_book.py This will be our file which will test our application script book.py.

import unittest

from book import Book

class TestBook(unittest.TestCase):

def test_email(self):

book_1 = Book('Smith Garry', 2019, 60)

book_2 = Book('Kelly Frank', 2016, 45)

self.assertEqual(book_1.author_email, 'Smith_Garry@mail.com')

self.assertEqual(book_2.author_email, 'Kelly_Frank@mail.com')

book_1.author = 'Tony King'

book_2.author = 'Jane Mandy'

self.assertEqual(book_1.author_email, 'Tony_King@mail.com')

self.assertEqual(book_2.author_email, 'Jane_Mandy@mail.com')

def test_old(self):

book_1 = Book('Smith Garry', 2019, 60)

book_2 = Book('Kelly Frank', 2016, 45)

self.assertEqual(book_1.old, 2)

self.assertEqual(book_2.old, 5)

book_1.year = 2017

book_2.year = 2021

self.assertEqual(book_1.old, 4)

self.assertEqual(book_2.old, 0)

def test_apply_discount(self):

book_1 = Book('Smith Garry', 2019, 60)

book_2 = Book('Kelly Frank', 2016, 45)

book_1.apply_discount()

book_2.apply_discount()

self.assertEqual(book_1.cost, 54)

self.assertEqual(book_2.cost, 40)

if __name__ == '__main__':

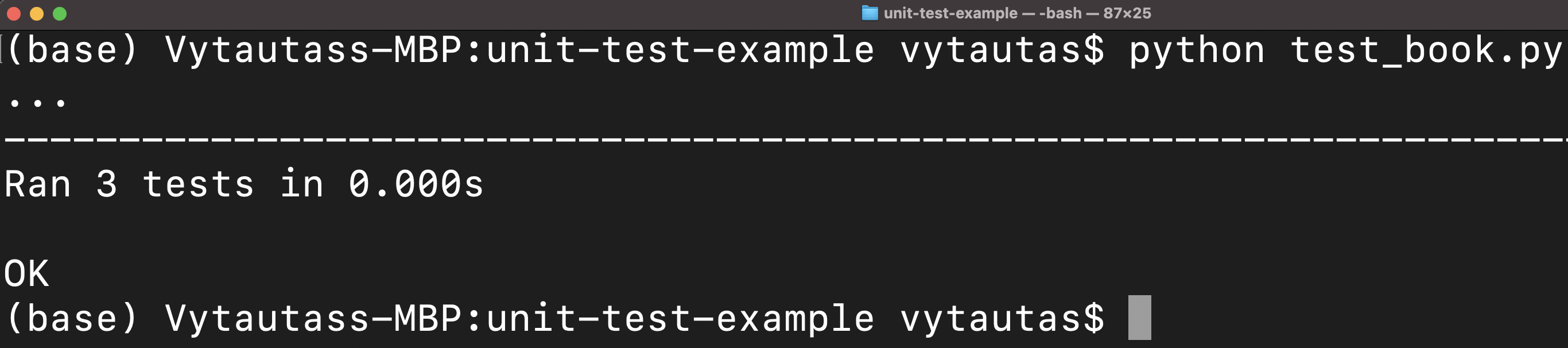

unittest.main()With the script above (test_book.py) we will test script book.py. The test file test_book.py consist of 3 different tests where we will test 2 objects of class

Book():book_1, andbook_2.

As we ran 3 different tests, we surely expect to see the output saying that all our 3 tests passed. The output in your working terminal should be as it is represented below.

Remember, in this example, application file book.py and test_book.py must be in the same directory.

| Containers | Virtual Machines |

|---|---|

| Build Cloud-native applications. | Used for monolithic applications |

| DevOps best practice. | Runs an OS inside another OS |

| Include runtime with code. | Launch time can be anywhere between seconds and minutes |

| Launch time in miliseconds. |

Docker format containers

It is a flat file that has some key directives inside. Example is below:

FROM python:3.7.3-stretch

# Working Directory

WORKDIR /app

# Copy source code to working direcyory

COPY . app.py /app/

# Install packages from requirement.txt

RUN pip install --upgrade pip &&\

pip install --trusted-host pypi.python.org -r -requirements.txt

In the file above, we inherit from Python 3.7.3, and then assign some directories where to put the code and then install the software inside. This is the file you tell it what operating system code, or the runtime should live inside of your Source Control Project. Launch time can be anywhere from seconds to minutes.

There are two main componensts of Docker: Docker Desktop and Docker Hub. Detailes key points for each of them is listed below.

Docker Desktop. It is a Local development workflow. The desktop application contains the container runtime which allows containers to execute. The The Docker App itself orchestrates the local development workflow including the ability to use Kubernetes, which is an open source system for managing containerized applications that came out of Google.

Docker Hub. It is a Collaboration workflow.

Kubernetes is a orchestration layer for containers (the standard for containers orchestration). It is a useful tool for containerized applications.

Many cloud providers supports Kubernetes: AWS via Amazon EKS; Google via Google Kuberneted Engine GKE. There are many ways to install it. One way is to use Docker Desktop. If you are more advanced user, you can use kubectl command together with curl commands.

You can have multiple containers inside a pod. You can have multiple pods inside a node. A master is where all the control is takes place.This Kubernetes API allows the orchestratons to these different nodes. Also, it is a jobs orchestration system.

We can run Kubernetes in Amazon EKS, or in Google GKE, or in Azure with its Kubernetes service. If you are using Docker, you can run it also locally.

Here is a simple example where you could expose it to port 80:80, and that would be the service, inside where you have your endpoints which csupport the things that get set up.

version: '3.3'

services:

web:

image: dockersamples/k8s-wordsmith-web

ports:

- "80:80"

words:

image: dockersamples/k8s-wordsmith-api

deploy:

replicas: 5

endpoint_mode: dnsrr

resources:

limits:

memory: 50M

reservations:

memory: 50M

db:

image: dockersamples/k8s-wordsmith-db

This could be deployed via the following command.

docker stack deploy --namespace my-app --compose-file /path/to/docker-compose.yml

One of the killer feature of Kubernetes is the ability to setup autoscaling via the Horizontal Pod Autoscaler.