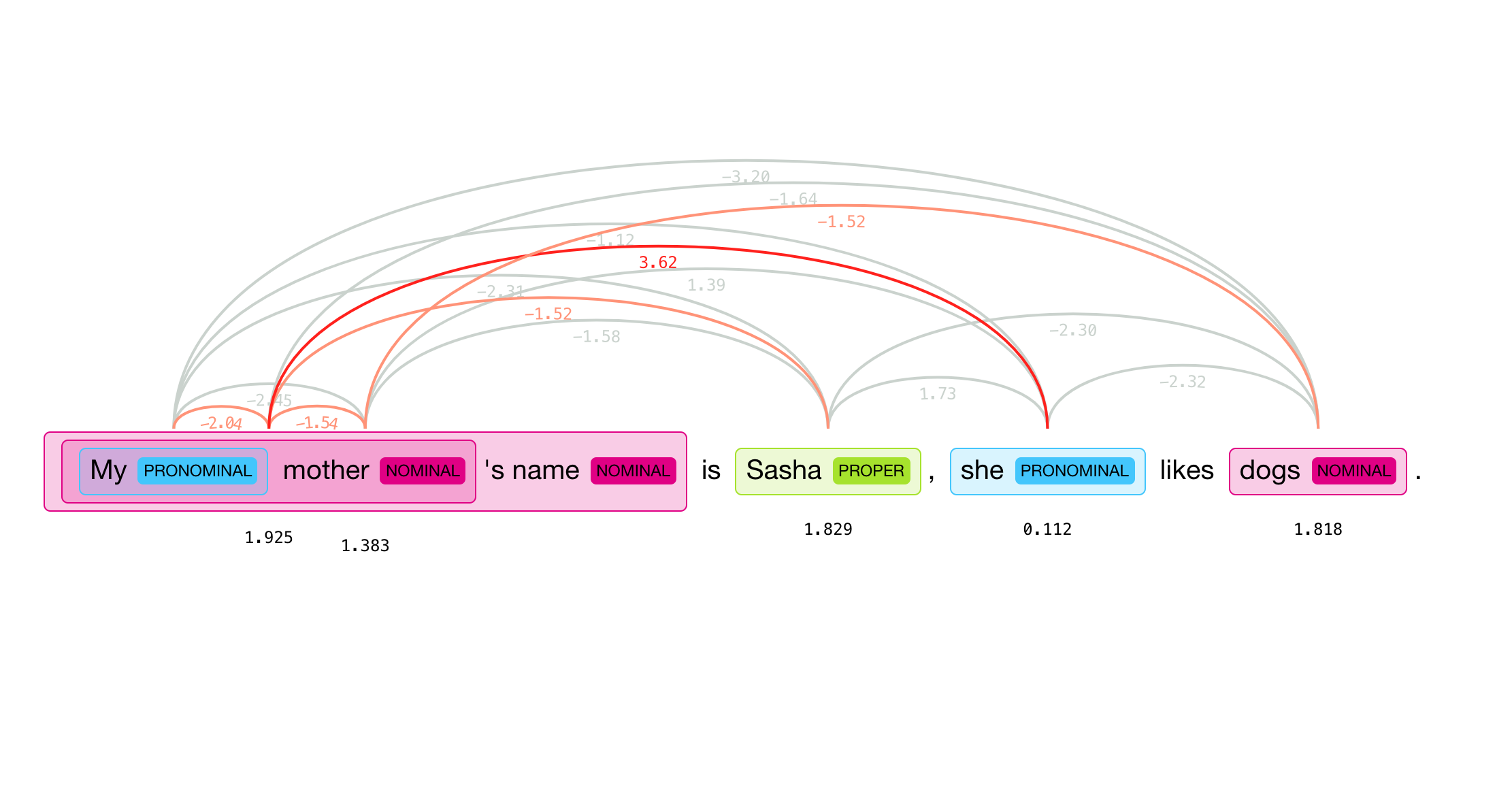

NeuralCoref is a pipeline extension for spaCy 2.0 that annotates and resolves coreference clusters using a neural network. NeuralCoref is production-ready, integrated in spaCy's NLP pipeline and easily extensible to new training datasets.

For a brief introduction to coreference resolution and NeuralCoref, please refer to our blog post. NeuralCoref is written in Python/Cython and comes with pre-trained statistical models for English. It can be trained in other languages. NeuralCoref is accompanied by a visualization client NeuralCoref-Viz, a web interface powered by a REST server that can be tried online. NeuralCoref is released under the MIT license.

✨ Version 3.0 out now! 100x faster and tightly integrated in spaCy pipeline.

This is the easiest way to install NeuralCoref if you don't need to train the model on a new language or dataset.

| Operating system | macOS / OS X, Linux, Windows (Cygwin, MinGW, Visual Studio) |

| Python version | CPython 2.7, 3.4+. Only 64 bit. |

NeuralCoref is currently available in English with three models of increasing accuracy that mirror spaCy english models. The larger the model, the higher the accuracy:

| Model Name | MODEL_URL | Size | Description |

| en_coref_sm | en_coref_sm | 78 Mo | A small English model based on spaCy en_core_web_sm-2.0.0 |

| en_coref_md | en_coref_md | 161 Mo | [Recommended] A medium English model based on spaCy en_core_web_md-2.0.0 |

| en_coref_lg | en_coref_lg | 893 Mo | A large English model based on spaCy en_core_web_lg-2.0.0 |

To install a model, copy the MODEL_URL of the model you are interested in from the above table and type:

pip install MODEL_URLWhen using pip it is generally recommended to install packages in a virtual environment to avoid modifying system state:

venv .env

source .env/bin/activate

pip install MODEL_URLClone the repo and install using pip.

git clone https://github.com/huggingface/neuralcoref.git

cd neuralcoref

pip install -e .NeuralCoref is integrated as a spaCy Pipeline Extension .

To load NeuralCoref, simply load the model you dowloaded above using spacy.load() with the model's name (e.g. en_coref_md) and process your text as usual with spaCy.

NeuralCoref will resolve the coreferences and annotate them as extension attributes in the spaCy Doc, Span and Token objects under the ._. dictionary.

Here is a simple example before we dive in greater details.

import spacy

nlp = spacy.load('en_coref_md')

doc = nlp(u'My sister has a dog. She loves him.')

doc._.has_coref

doc._.coref_clustersYou can also import NeuralCoref model directly and call its load() method:

import en_coref_md

nlp = en_coref_md.load()

doc = nlp(u'My sister has a dog. She loves him.')

doc._.has_coref

doc._.coref_clusters| Attribute | Type | Description |

doc._.has_coref |

boolean | Has any coreference has been resolved in the Doc |

doc._.coref_clusters |

list of Cluster |

All the clusters of corefering mentions in the doc |

doc._.coref_resolved |

unicode | Unicode representation of the doc where each corefering mention is replaced by the main mention in the associated cluster. |

span._.is_coref |

boolean | Whether the span has at least one corefering mention |

span._.coref_cluster |

Cluster |

Cluster of mentions that corefer with the span |

token._.in_coref |

boolean | Whether the token is inside at least one corefering mention |

token._.coref_clusters |

list of Cluster |

All the clusters of corefering mentions that contains the token |

The Cluster class is a small container for a cluster of mentions.

A Cluster contains 3 attributes:

| Attribute | Type | Description |

cluster.i |

int | Index of the cluster in the Doc |

cluster.main |

Span |

Span of the most representative mention in the cluster |

cluster.mentions |

list of Span |

All the mentions in the cluster |

The Cluster class also implements a few Python class methods to simplify the navigation inside a cluster:

| Method | Output | Description |

Cluster.__getitem__ |

return Span |

Access a mention in the cluster |

Cluster.__iter__ |

yields Span |

Iterate over mentions in the cluster |

Cluster.__len__ |

return int | Number of mentions in the cluster |

Here are a few examples on how you can navigate the coreference cluster chains and display clusters and mentions.

import spacy

nlp = spacy.load('en_coref_sm')

doc = nlp(u'My sister has a dog. She loves him')

doc._.coref_clusters

doc._.coref_clusters[1].mentions

doc._.coref_clusters[1].mentions[-1]

doc._.coref_clusters[1].mentions[-1]._.coref_cluster.main

token = doc[-1]

token._.in_coref

token._.coref_clusters

span = doc[-1:]

span._.is_coref

span._.coref_cluster.main

span._.coref_cluster.main._.coref_clusterImportant: NeuralCoref mentions are spaCy Span objects which means you can access all the usual Span attributes like span.start (index of the first token of the span in the document), span.end (index of the first token after the span in the document), etc...

Ex: doc._.coref_clusters[1].mentions[-1].start will give you the index of the first token of the last mention of the second coreference cluster in the document.

A simple example of server script for integrating NeuralCoref in a REST API is provided as an example in examples/server.py.

There are many other ways you can manage and deploy NeuralCoref. Some examples can be found in spaCy Universe.

If you want to retrain the model or train it on another language, see our detailed training instructions as well as our detailed blog post

The training code will soon benefit from the same Cython refactoring than the inference code.