- I’m a developer, devops, craftsman, factotum

- I 💓 GNU/Linux 🐧, Docker 🐳 & GNU/Emacs 🐪

- I 💓 Free-software !

- I 💓 Go, Java, Python and much more

- I maintain, amongst other projects,

docker/docker🐳docker/libcompose🐙containous/traefik🐹

- I work for @Docker in the

#coreteam - And I 💓 unicode, 🚴 & 🚶

- Orchestration 🕸, wat ? why ?

- Your choice ! 🐣

- Docker 🐳

- Docker Swarm 🐝, the old way, the new way…

- Demo, demo, demo 🌠

an inherent intelligence or even implicitly autonomic control

[…]

the effect of automation or systems deploying elements of control theory.

Orchestration happens on cluster(s)

a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system.

Cluster

- You might not have a choice 👼

- Enhance availability

- Scale easily

- Distribute the load

- Manager resource easier

Orchestration

- Continuous deployment

- Ease the deployment and maintenance

- Efficiency, Agility

- « You want to deploy, not to think how to deploy »

- placement

- scheduling

- deployment

- rules & constraints

- load-balancing

- update (rolling updates, …)

- self-healing (health-monitoring, re-schedule)

- discovery

- secure

- provisioning

- declarative (most of the time)

« Pour le meilleur et pour le pire » 💍

- Containers based

- Swarm (docker)

- Kubernetes (google)

- Rancher (rancher)

- Fleet (coreos)

- General purpose

- Nomad (hashicorp)

- Mesos (apache, mesosphere)

- O.V.N.I.

- Amazon web services (ECS, EC2…)

- Goggle cloud (GCE)

- Service discovery

- etcd

- consul

- zookeeper

- Provisionning

- terraform (hashicorp)

- infrakit (docker)

- chef, puppet, ansible, saltstack

- Monitor

- prometheus

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications.

– docker.com

Docker is an open-source project that automates deployment of applications inside softwark containers.

– wikipedia.org

- Company: Docker Inc.

- Platform: dockerd (engine), docker (cli)

- Tools: compose, swarmkit, containerd

Goods transportation with container

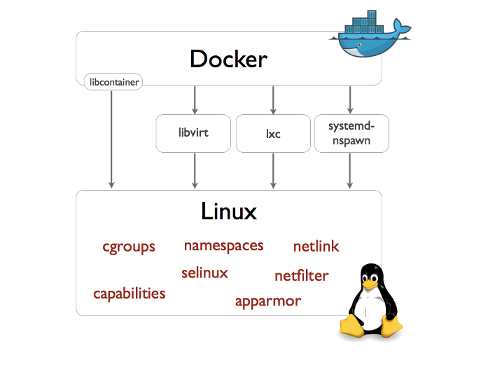

Standing on the shoulders of giants

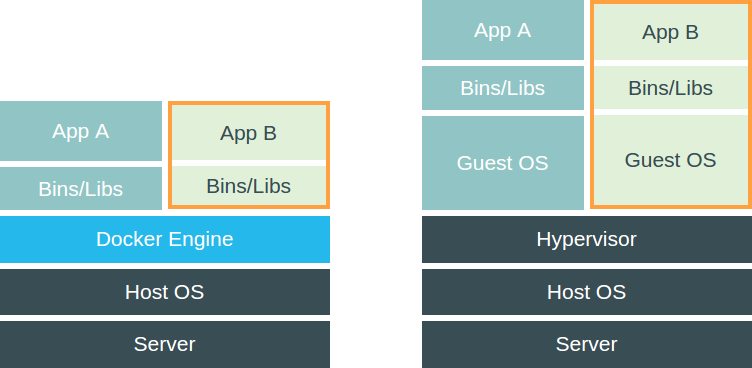

Quick note : Repeat after me Containers ARE NOT VMs !

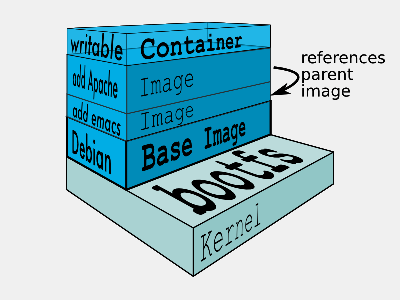

- Registry (Distributing)

- image depo

- Images (Building)

- template

- read-only

- Conteneurs (Runtime) :

- based on an image

- has a state

# Run an image…

$ docker run -ti --rm ubuntu:14.04 /bin/bash

# … or something more useful

$ docker run -d -p 8080:8080 -p 80:8000 \

-v $PWD/traefik.toml:/traefik.toml \

emilevauge/traefik

# … or totaly crazy

$ docker run -d -v /tmp/.X11-unix:/tmp/.X11 \

-e DISPLAY=unix$DISPLAY \

# …

--name spotify vdemeester/spotify

# What is running ?

$ docker psA large number of insects, especially when in motion or (for bees) migrating to a new colony.

Swarm is the name of (almost) 2 projects @Docker:

docker/swarm, i.e. Swarm v1- swarm mode and the

docker/swarmkitproject

These projects could have been named: pod, gam, herd (group of whale 🐳), but it’s another story 👼

Docker Swarm provides native clustering capabilities to turn a group of Docker engines into a single, virtual Docker Engine.

– docker.com

- Same Docker API, with pros and cons

- Requires an external key/value store (etcd, consul, …)

- No service model (scaling, updates, discovery, load-balancing not built-in)

- Hard to setup (security, …)

Feedback aquired help understand limits and build better.

A toolkit for orchestrating distributed systems at any scale. It includes primitives for node discovery, raft-based consensus, task scheduling and more.

– github.com/docker/swarmkit

The swarm mode is docker/swarmkit integrated in the

docker engine, starting from 1.12.

- Enhance the docker API

- No need for an external key/value store

- Secure by default (automatic TLS keying and signing)

- Easy to setup

docker/swarmkitcan work withoutdocker(with different runtimes)

- Declarative service model, Scaling

- Desired state reconciliation: constantly monitors the cluster state and reconciles any differences between the actual state your expressed desired state

- Multi-host networking

- Service discovery: each service have an entry in the swarm a unique DNS name and load balances running containers

- Load balancing: You can expose the ports for services to an external load balancer

- Rolling updates: At rollout time you can apply service updates to nodes incrementally.

- …

- Cluter: at least one node

- Nodes: a docker engine instance

- managers: maintain the cluster state

one of them is elected as the leader

- workers: received and execute task that manager assigned them

- managers: maintain the cluster state

- Services: specified by its desired state, will create tasks

- desired state

- replicas, global, …

- Tasks:

- attached to a worker

- created fro a service

- corresponds to a specific container

- immutable, doesn’t move, doesn’t update

- Stack (client-side) : group of services (something like

docker-compose.yml)

- Small cluster

- 3 managers

- 5 workers

- 1 cluster visualizer

- Monitoring tools (elk, …)

- Lot’s of non useful apps 👼

- and a few useful ones 👼

- 🗹 Creation d’un cluster 🏋

- ☐ Initialisation du swarm cluster

- ☐ Validation que celà fonctionne

- ☐ Création de services

- ☐ Rolling upgrade

- ☐ Update the cluster

- ☐ Health monitoring

- ☐ put your game here…

Thank You 🐸

The demo, at home 🏡This demo cluster is setup using docker-machine because it’s easy

and straighforward.

- Choose the provider you want (

digitaloceanfor the demo)# For digital ocean, let's export the token to ease the later commands export DO_TOKEN=7b54b35…

- We’ll first create machines (repeat thing for the number of

machine you want)

docker-machine create --driver=digitalocean \ --digitalocean-access-token=$DO_TOKEN \ --digitalocean-region=ams2 --digitalocean-image=debian-8-x64 \ --engine-opt "experimental" manager1

- Then let’s init the swarm on a manager (

manager1)docker-machine ssh manager1 "docker swarm init" - Let’s get the tokens (manager and worker)

docker-machine ssh manager1 "docker swarm join-token manager -q" docker-machine ssh manager1 "docker swarm join-token worker -q"

- And make the other nodes join the swarm

docker-mahine ssh manager2 "docker swarm join --token ${manager_token} \ --listen-addr $(docker-machine ip manager2) \ --advertise-addr $(docker-machine ip manager2) \ $(docker-machine ip manager1)"

When we do docker swarm init:

- a keypair is created for the root CA of our Swarm

- a keypair is created for the first node

- a certificate is issued for this node

- the join tokens are created

There is one token to join as a worker, and another to join as a manager.

The join tokens have two parts:

- a secret key (preventing unauthorized nodes from joining)

- a fingerprint of the root CA certificate (preventing MITM attacks)

If a token is compromised, it can be rotated instantly with:

docker swarm join-token --rotate <worker|manager>

When a node joins the Swarm:

- it is issued its own keypair, signed by the root CA

- if the node is a manager:

- it joins the Raft consensus

- it connects to the current leader

- it accepts connections from worker nodes

- if the node is a worker:

- it connects to one of the managers (leader or follower)

- When running in Swarm mode, each node advertises its address to the others (i.e. it tells them “you can contact me on 10.1.2.3:2377”)

- If the node has only one IP address (other than 127.0.0.1), it is used automatically

- If the node has multiple IP addresses, you must specify which one to use (Docker refuses to pick one randomly)

- You can specify an IP address or an interface name (in the latter case, Docker will read the IP address of the interface and use it)

- You can also specify a port number (otherwise, the default port 2377 will be used)

- If your nodes have only one IP address, it’s safe to let

autodetection do the job

(Except if your instances have different private and public addresses, e.g. on EC2, and you are building a Swarm involving nodes inside and outside the private network: then you should advertise the public address.)

- If your nodes have multiple IP addresses, pick an address which is reachable by every other node of the Swarm

- 2N+1 nodes can (and will) tolerate N failures (you can have an even number of managers, but there is no point)

- 1 manager = no failure

- 3 managers = 1 failure

- 5 managers = 2 failures (or 1 failure during 1 maintenance)

- 7 managers and more = now you might be overdoing it a little bit

- Create a service featuring an Alpine container pinging Google resolvers:

docker service create alpine ping 8.8.8.8

- Check where the container was created:

docker service ps <serviceID>

- Check the logs

docker-machine ssh nodeX docker logs <containerID> # experimental docker service logs <serviceID>

Services can be exposed, with two special properties:

- the public port is available on every node of the Swarm,

- requests coming on the public port are load balanced across all instances.

docker service create --name hello --publish 80 emilevauge/whoamiServices can be updated using `service update` command (or shortcuts like `service scale`)

docker service update --replicas=10 hello

# Same as

docker service scale hello=10- If you are fast enough, you will be able to see multiple states:

- assigned (the task has been assigned to a specific node)

- preparing (right now, this mostly means “pulling the image”)

- running

- When a task is terminated (stopped, killed…) it cannot be restarted (A replacement task will be created)

- SwarmKit will upgrade N instances at a time (following the update-parallelism parameter)

- New tasks are created, and their desired state is set to Ready (this pulls the image if necessary, ensures resource availability, creates the container … without starting it)

- If the new tasks fail to get to Ready state, go back to the previous step (SwarmKit will try again and again, until the situation is addressed or desired state is updated)

- When the new tasks are Ready, it sets the old tasks desired state to Shutdown

- When the old tasks are Shutdown, it starts the new tasks

- Then it waits for the update-delay, and continues with the next batch of instances

- SwarmKit integrates with overlay networks, without requiring an extra key/value store

- Overlay networks are created the same way as before

docker network create --driver overlay demo-net

docker network ls

docker-machine ssh worker1 docker network ls- Create multiple services and attaches them on services

docker service create --network demo-net --name whoami emilevauge/whoami

docker service create --network demo-net --name curlito nathanleclaire/curl sh -c \

"while true; do curl http://whoami/; sleep 2; done"- By default, overlay networks are using plain VXLAN encapsulation (~Ethernet over UDP, using SwarmKit’s control plane for ARP resolution)

- Encryption can be enabled on a per-network basis (It will use IPSEC encryption provided by the kernel, leveraging hardware acceleration)

- This is only for the overlay driver (Other drivers/plugins will use different mechanisms)

- Create networks

docker network create insecure --driver overlay --attachable docker network create secure --opt encrypted --driver overlay --attachable

- Start a service in one node, and “sniff” network from another

docker service create --name whoami --network secure \ --network insecure --constraint node.hostname==node2 emilevauge/whoami docker-machine ssh node2 docker run \ --net host jpetazzo/netshoot ngrep -tpd eth0 HTTP - From node2, run the following

docker run --rm --net insecure nicolaka/netshoot curl web # should display an HTTP frame docker run --rm --net secure nicolaka/netshoot curl web # should only display #

- We need to run a registry:2 container (make sure you specify tag :2 to run the new version!)

- It will store images and layers to the local filesystem (but you can add a config file to use S3, Swift, etc.)

- Docker requires TLS when communicating with the registry

- unless for registries on localhost

- or with the Engine flag –insecure-registry

- Our strategy: publish the registry container on port 5000, so that it’s available through localhost:5000 on each node

- Create the registry service, publishing its port on the whole

cluster

docker service create --name registry --publish 5000:5000 registry:2 - Make sure it works on several nodes

docker-machine ssh manager1 curl localhost:5000/v2/_catalog docker-machine ssh manager1 curl localhost:5000/v2/_catalog # […]

- Make sure we have the busybox image, retag it and push it:

docker pull busybox docker tag busybox localhost:5000/busybox docker push localhost:5000/busybox

- Docker has a “secret safe” (secure key→value store)

- You can create as many secrets as you like

- You can associate secrets to services

- Secrets are exposed as plain text files, but kept in memory only (using tmpfs)

- Secrets are immutable (at least in Engine 1.13)

- Secrets have a max size of 500 KB

- Can be (ab)used to hold whole configuration files if needed

- If you intend to rotate secret foo, call it foo.N instead, and map it to foo

(N can be a serial, a timestamp…)

docker service create --secret source=foo.N,target=foo ... - You can update (remove+add) a secret in a single command:

docker service update ... --secret-rm foo.M --secret-add source=foo.N,target=foo

- Global volumes exist in a single namespace

- A global volume can be mounted on any node (bar some restrictions specific to the volume driver in use; e.g. using an EBS-backed volume on a GCE/EC2 mixed cluster)

- Attaching a global volume to a container allows to start the container anywhere (and retain its data wherever you start it!)

- Global volumes require extra plugins (Flocker, Portworx…)

- Docker doesn’t come with a default global volume driver at this point

- Therefore, we will fall back on local volumes (and use constraint for our services)

- Build on our local node (node1)

- Tag images with a version number (timestamp; git hash; semantic…)

- Upload them to a registry

- Create services using the images

- We use

docker-composeto test develop and run our application - Let’s build, tag and push our images

DOCKER_REGISTRY=localhost:5000 TAG=v0.1 for SERVICE in hasher rng webui worker; do docker-compose build $SERVICE docker tag dockercoins_$SERVICE $DOCKER_REGISTRY/dockercoins_$SERVICE:$TAG docker push $DOCKER_REGISTRY/dockercoins_$SERVICE done

- We’ll create a network for our application

docker network create --driver overlay dockercoins

- Let’s create the services

DOCKER_REGISTRY=localhost:5000 TAG=v0.1 for SERVICE in hasher rng webui worker; do docker service create --network dockercoins --name $SERVICE \ $DOCKER_REGISTRY/dockercoins_$SERVICE:$TAG done

- And validate it works by exposing the web ui

docker service update webui --publish-add 8000:80 - We can now scale part of our application, update it, …

docker service update --replicas 10 worker

- To update we will update the image, push it and then call

service update. But first, let’s update/define an upgrade policy.# Update task 2 by 2, separate by 5s docker service update --update-paralellism 2 --update-delay 5s worker # update docker service update --image $DOCKER_REGISTRY/dockercoins_worker:v0.2

If something wrong happens, you can rollback

docker service update --image $DOCKER_REGISTRY/dockercoins_worker:v0.1 # Using docker >= 1.13 docker service update --rollback

Building and pushing stack services

- We are going to use the build + image trick that we showed earlier:

docker-compose -f my_stack_file.yml build docker-compose -f my_stack_file.yml push docker stack deploy my_stack --compose-file my_stack_file.yml - To update, update your compose file and re-deploy

# Do some changes, update the compose file docker-compose -f my_stack_file.yml build docker-compose -f my_stack_file push docker stack deploy my_stack --compose-file my_stack_file.yml