Reinforcement Learning for Targeted 3D Object Top-Down Grasping with Emphasis on Selective Shape Manipulation

In this project, the main goal is to explore reinforcement learning algorithms and apply these algorithms to pick up specific shaped objects. While exploring the pybullet library, a few examples have been used as a reference point. The setup is based on one of these examples that has a Kuka Robot arm and a sample OpenAI's Gym environment. The reward function computes the rewards if the user-specified object is picked up; otherwise, if another object is grasped, a penalty is applied. Specific shapes like cube and cuboid are added for the robot arm to attempt pickups.

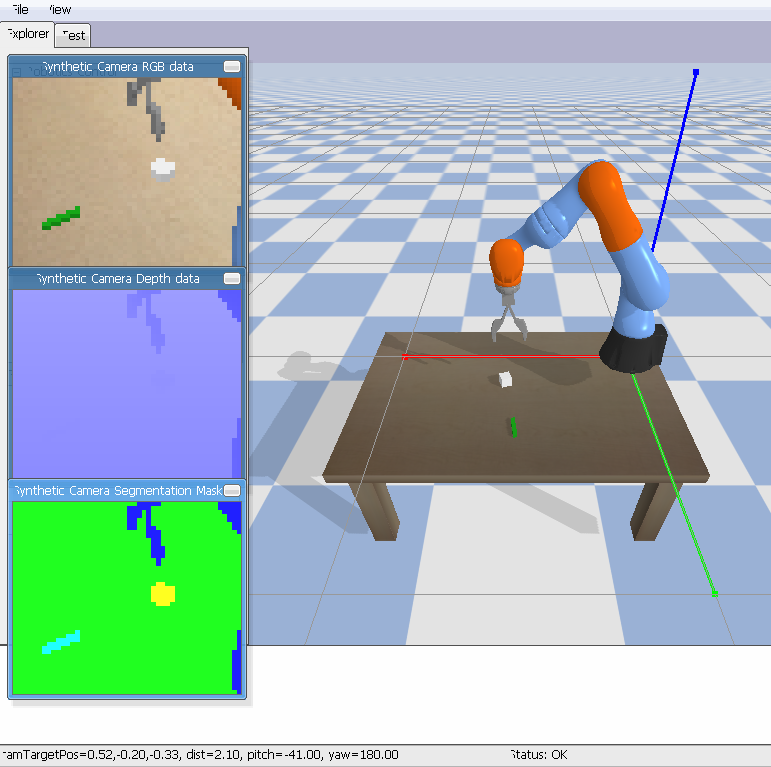

A sample Demo(Demo.mp4) is available in the root dir, but the simulation environment looks like -

The environment.yml (created from our conda env) contains all the requirements needed to run this project.

For training the DQN / DDPG model, config.json should be modified. For example, if the user wants to train the DQN model on a cube for 25,000 timesteps, the following configuration is required:

specific_object: cube_small.urdfalgorithm: DQNtimesteps: 25000

After this, one can execute the training by running:

python train.pyFor testing a model, on say 100 timesteps, following changes in the config.json are needed:

model_dir: ../models/DQN_75k_cube/best_model.zip,

test_episodes: 100

After this, one can execute the testing by running:

python test.pyThis will print out the success ratio of the 100 grasp attempts. Since the training of most of the models takes more than 10+ hours, we have added a few models so that it's easy to test on those - DDPG (10k, 25k) and DQN (10k, 25k, 50k, 75k) timesteps.

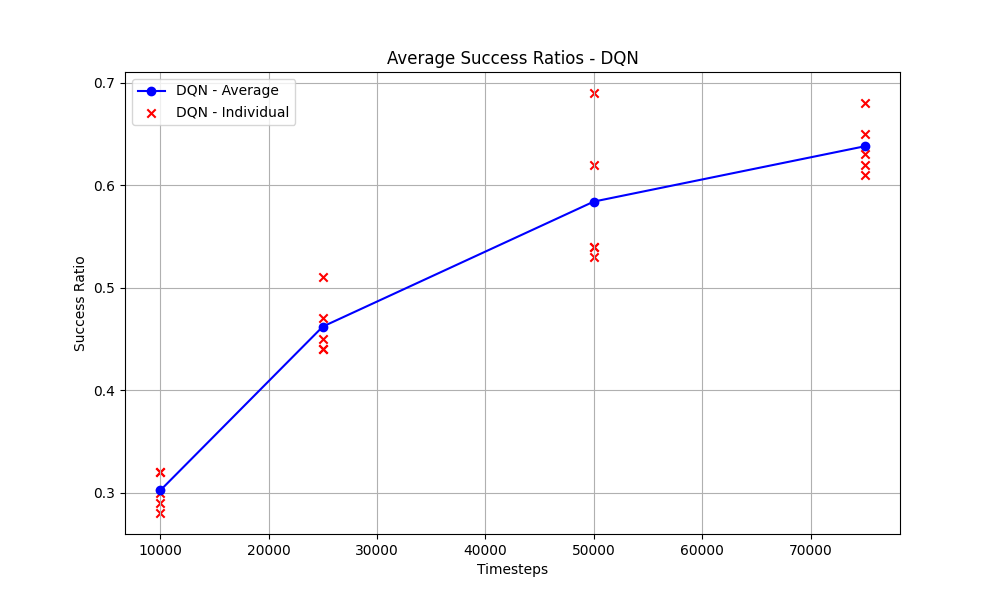

The script plot.py plots the average success ratios of the DQN model from the metrics.csv which is generated by test.py.

For the RL algorithm DQN, the plot for avg success ratios vs the timestamps (10k, 25k, 50k and 75k) looks like -

Feel free to contribute through a PR or report any issue.