TCCT-Net: Two-Stream Network Architecture for Fast and Efficient Engagement Estimation via Behavioral Feature Signals

This is the official repository for the paper "TCCT-Net: Two-Stream Network Architecture for Fast and Efficient Engagement Estimation via Behavioral Feature Signals," authored by Alexander Vedernikov, Puneet Kumar, Haoyu Chen, Tapio Seppänen, and Xiaobai Li. The paper has been accepted for the CVPR 2024 workshop (ABAW).

- Preprint

- About the Paper

- Requirements

- Pre-processing

- Dataset Files

- Hardware Recommendations

- Model Weights

- Instructions

- Usage

- Citing This Work

The preprint of our paper can be accessed on arXiv: TCCT-Net on arXiv

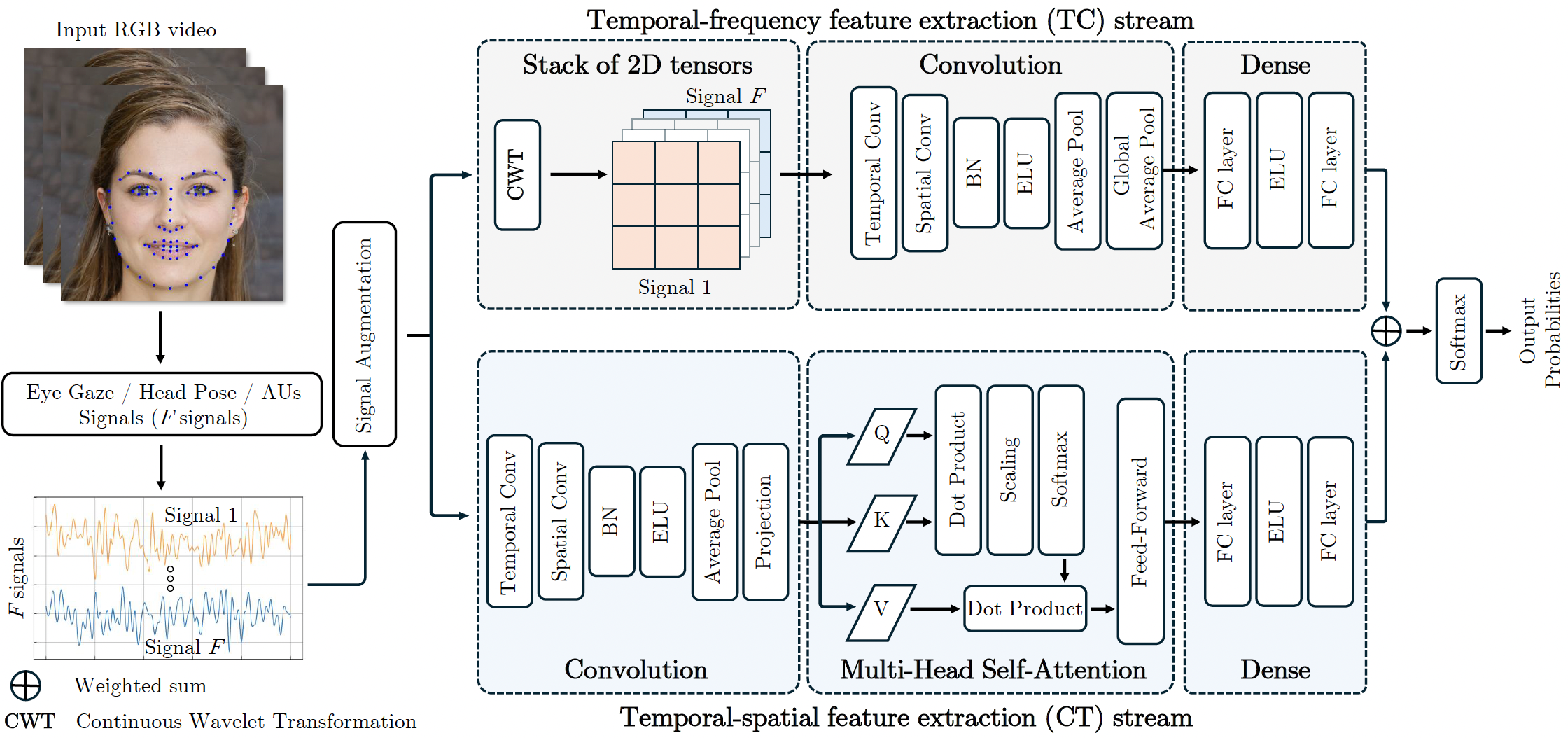

Engagement analysis finds various applications in healthcare, education, advertisement, services. Deep Neural Networks, used for analysis, possess complex architecture and need large amounts of input data, computational power, inference time. These constraints challenge embedding systems into devices for real-time use. To address these limitations, we present a novel two-stream feature fusion "Tensor-Convolution and Convolution-Transformer Network" (TCCT-Net) architecture. To better learn the meaningful patterns in the temporal-spatial domain, we design a "CT" stream that integrates a hybrid convolutional-transformer. In parallel, to efficiently extract rich patterns from the temporal-frequency domain and boost processing speed, we introduce a "TC" stream that uses Continuous Wavelet Transform (CWT) to represent information in a 2D tensor form. Evaluated on the EngageNet dataset, the proposed method outperforms existing baselines, utilizing only two behavioral features (head pose rotations) compared to the 98 used in baseline models. Furthermore, comparative analysis shows TCCT-Net's architecture offers an order-of-magnitude improvement in inference speed compared to state-of-the-art image-based Recurrent Neural Network (RNN) methods.

To install the necessary dependencies, please ensure you have the following packages:

einops==0.8.0matplotlib==3.6.2numpy==1.23.4pandas==1.5.2PyWavelets==1.4.1torch==1.13.0tqdm==4.64.1

You can install these dependencies using the following command:

pip install -r requirements.txtTo begin, you will need access to the EngageNet dataset.

Initially, videos in their original RGB format are preprocessed using the OpenFace library to extract behavioral features such as Action Units (AUs), eye gaze, and head pose. After preprocessing, the validation videos are stored in the test_files folder, while the training videos are stored in the train_files folder. Each RGB video is converted into a CSV file with F columns, where each column represents a specific behavioral feature, and rows correspond to frames.

The preprocessing steps result in 6852 training samples and 1071 validation samples, each with a length of 280 elements. For evaluation, all 1071 validation set videos were used, and any shorter signals were repeated to reach 280 elements. For detailed information, please refer to section 4.2 of the paper.

This repository includes the following key folders and files related to the training and validation data:

test_files: Contains CSV files after validation videos preprocessing. One CSV file is provided for reference.train_files: Contains CSV files after training videos preprocessing. One CSV file is provided for reference.inference_files: Contains CSV files after inference videos preprocessing. One CSV file is provided for reference.labels.csv: Stores labels for both training and validation videos. The providedlabels.csvfile contains 5 rows for reference.labels_inference.csv: Stores labels for inference videos. The providedlabels_inference.csvfile contains 5 rows for reference.

Please note that the filenames in the mentioned folders and the labels.csv/labels_inference.csv files are deliberately changed. Keep in mind that the filenames should follow the convention from the original EngageNet dataset.

For faster performance, it is recommended to use a CUDA-capable GPU. If no GPU is available, the code will run on the CPU, but it will be slower.

The pre-trained model weights are available in the repository as final_model_weights.pth. These weights ensure an accuracy of 68.91% on the validation set of 1071 samples. You can use these weights to load the model and perform inference using the inference.py file without the need to train from scratch. Please ensure that you have the labels_inference.csv label file and the corresponding CSV files in the inference_files folder. For more detailed information, please read the paper.

-

Clone the Repository:

git clone https://github.com/vedernikovphoto/TCCT-Net cd TCCT-Net -

Install Dependencies: Ensure you have all required packages installed:

pip install -r requirements.txt

-

Configuration File: Ensure that the

config.jsonfile is in the root directory. This file contains all the necessary configurations for training the model. -

Run the Training Script:

python main.py

-

View Results and Metrics: After running the script, the results will be saved to

test_results.csvin the root directory. The training process will also generate the following plots for metrics:train_metrics.png: Training loss and accuracy over epochs.test_accuracy.png: Test accuracy over epochs.

To use the pre-trained model for inference, follow these steps:

-

Ensure Pre-processed Data is Available: Make sure you have the necessary pre-processed CSV files in the

inference_filesfolder and the correspondinglabels_inference.csv. -

Run the Inference Script:

python inference.py

-

View Inference Results: The inference results will be saved in

inference_results.csv.

If you find our work useful or relevant to your research, please consider citing our paper. Below is the BibTeX entry for the paper:

@misc{vedernikov2024tcctnet,

title={TCCT-Net: Two-Stream Network Architecture for Fast and Efficient Engagement Estimation via Behavioral Feature Signals},

author={Alexander Vedernikov and Puneet Kumar and Haoyu Chen and Tapio Seppanen and Xiaobai Li},

year={2024},

eprint={2404.09474},

archivePrefix={arXiv},

primaryClass={cs.CV}

}