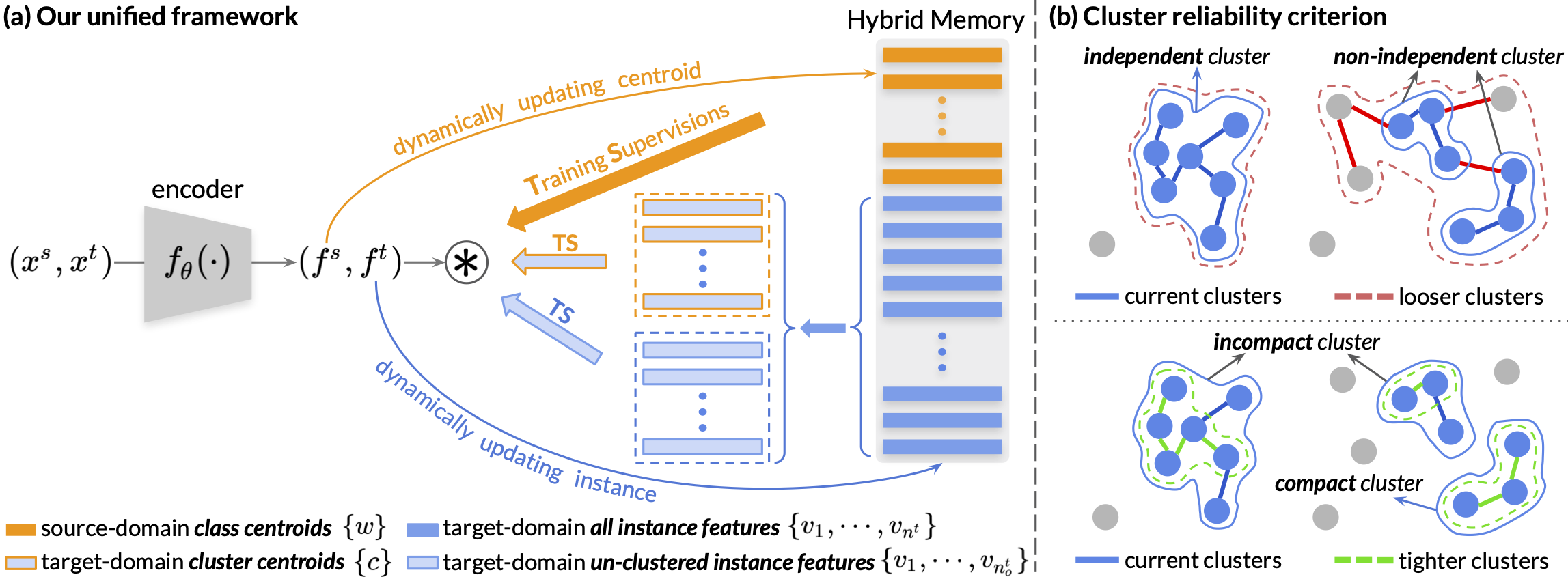

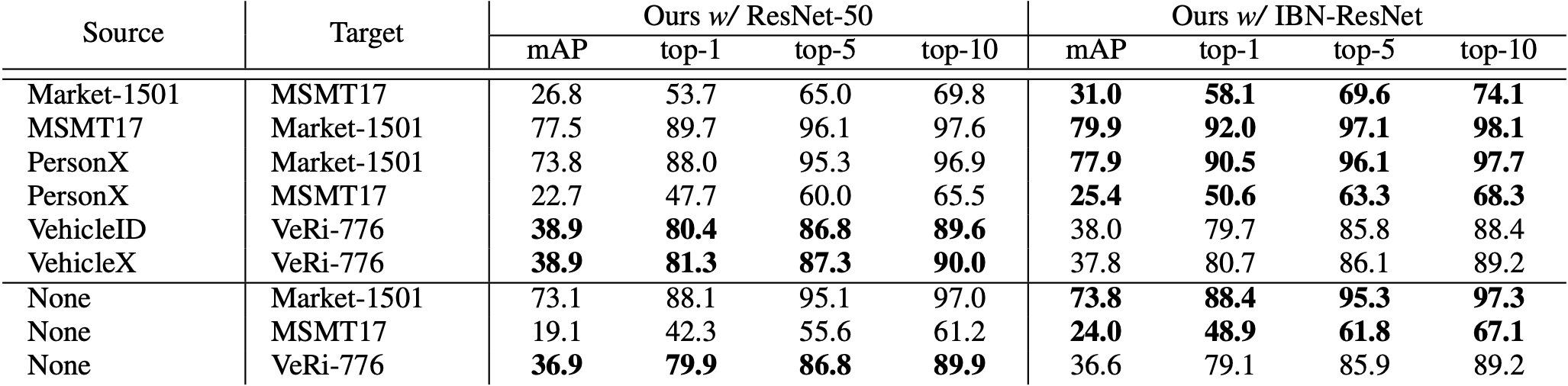

The official repository for Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID, which is accepted by NeurIPS-2020. SpCL achieves state-of-the-art performances on both unsupervised domain adaptation tasks and unsupervised learning tasks for object re-ID, including person re-ID and vehicle re-ID.

[2020-10-13] All trained models for the camera-ready version have been updated, see Trained Models for details.

[2020-09-25] SpCL has been accepted by NeurIPS on the condition that experiments on DukeMTMC-reID dataset should be removed, since the dataset has been taken down and should no longer be used.

[2020-07-01] We did the code refactoring to support distributed training, stronger performances and more features. Please see OpenUnReID.

git clone https://github.com/yxgeee/SpCL.git

cd SpCL

python setup.py developcd examples && mkdir dataDownload the person datasets Market-1501, MSMT17, PersonX, and the vehicle datasets VehicleID, VeRi-776, VehicleX. Then unzip them under the directory like

SpCL/examples/data

├── market1501

│ └── Market-1501-v15.09.15

├── msmt17

│ └── MSMT17_V1

├── personx

│ └── PersonX

├── vehicleid

│ └── VehicleID -> VehicleID_V1.0

├── vehiclex

│ └── AIC20_ReID_Simulation -> AIC20_track2/AIC20_ReID_Simulation

└── veri

└── VeRi -> VeRi_with_plate

When training with the backbone of IBN-ResNet, you need to download the ImageNet-pretrained model from this link and save it under the path of logs/pretrained/.

mkdir logs && cd logs

mkdir pretrainedThe file tree should be

SpCL/logs

└── pretrained

└── resnet50_ibn_a.pth.tar

ImageNet-pretrained models for ResNet-50 will be automatically downloaded in the python script.

We utilize 4 GTX-1080TI GPUs for training. Note that

- The training for

SpCLis end-to-end, which means that no source-domain pre-training is required. - use

--iters 400(default) for Market-1501 and PersonX datasets, and--iters 800for MSMT17, VeRi-776, VehicleID and VehicleX datasets; - use

--width 128 --height 256(default) for person datasets, and--height 224 --width 224for vehicle datasets; - use

-a resnet50(default) for the backbone of ResNet-50, and-a resnet_ibn50afor the backbone of IBN-ResNet.

To train the model(s) in the paper, run this command:

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_uda.py \

-ds $SOURCE_DATASET -dt $TARGET_DATASET --logs-dir $PATH_OF_LOGSSome examples:

### PersonX -> Market-1501 ###

# use all default settings is ok

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_uda.py \

-ds personx -dt market1501 --logs-dir logs/spcl_uda/personx2market_resnet50

### Market-1501 -> MSMT17 ###

# use all default settings except for iters=800

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_uda.py --iters 800 \

-ds market1501 -dt msmt17 --logs-dir logs/spcl_uda/market2msmt_resnet50

### VehicleID -> VeRi-776 ###

# use all default settings except for iters=800, height=224 and width=224

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_uda.py --iters 800 --height 224 --width 224 \

-ds vehicleid -dt veri --logs-dir logs/spcl_uda/vehicleid2veri_resnet50To train the model(s) in the paper, run this command:

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_usl.py \

-d $DATASET --logs-dir $PATH_OF_LOGSSome examples:

### Market-1501 ###

# use all default settings is ok

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_usl.py \

-d market1501 --logs-dir logs/spcl_usl/market_resnet50

### MSMT17 ###

# use all default settings except for iters=800

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_usl.py --iters 800 \

-d msmt17 --logs-dir logs/spcl_usl/msmt_resnet50

### VeRi-776 ###

# use all default settings except for iters=800, height=224 and width=224

CUDA_VISIBLE_DEVICES=0,1,2,3 \

python examples/spcl_train_usl.py --iters 800 --height 224 --width 224 \

-d veri --logs-dir logs/spcl_usl/veri_resnet50We utilize 1 GTX-1080TI GPU for testing. Note that

- use

--width 128 --height 256(default) for person datasets, and--height 224 --width 224for vehicle datasets; - use

--dsbnfor domain adaptive models, and add--test-sourceif you want to test on the source domain; - use

-a resnet50(default) for the backbone of ResNet-50, and-a resnet_ibn50afor the backbone of IBN-ResNet.

To evaluate the domain adaptive model on the target-domain dataset, run:

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py --dsbn \

-d $DATASET --resume $PATH_OF_MODELTo evaluate the domain adaptive model on the source-domain dataset, run:

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py --dsbn --test-source \

-d $DATASET --resume $PATH_OF_MODELSome examples:

### Market-1501 -> MSMT17 ###

# test on the target domain

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py --dsbn \

-d msmt17 --resume logs/spcl_uda/market2msmt_resnet50/model_best.pth.tar

# test on the source domain

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py --dsbn --test-source \

-d market1501 --resume logs/spcl_uda/market2msmt_resnet50/model_best.pth.tarTo evaluate the model, run:

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py \

-d $DATASET --resume $PATHSome examples:

### Market-1501 ###

CUDA_VISIBLE_DEVICES=0 \

python examples/test.py \

-d market1501 --resume logs/spcl_usl/market_resnet50/model_best.pth.tarYou can download the above models in the paper from [Google Drive] or [Baidu Yun](password: w3l9).

If you find this code useful for your research, please cite our paper

@inproceedings{ge2020selfpaced,

title={Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID},

author={Yixiao Ge and Feng Zhu and Dapeng Chen and Rui Zhao and Hongsheng Li},

booktitle={Advances in Neural Information Processing Systems},

year={2020}

}