This library is in the very initial state. Currently, it supports only A/B testings (two groups).

- Automatically generate charts from A/B testings

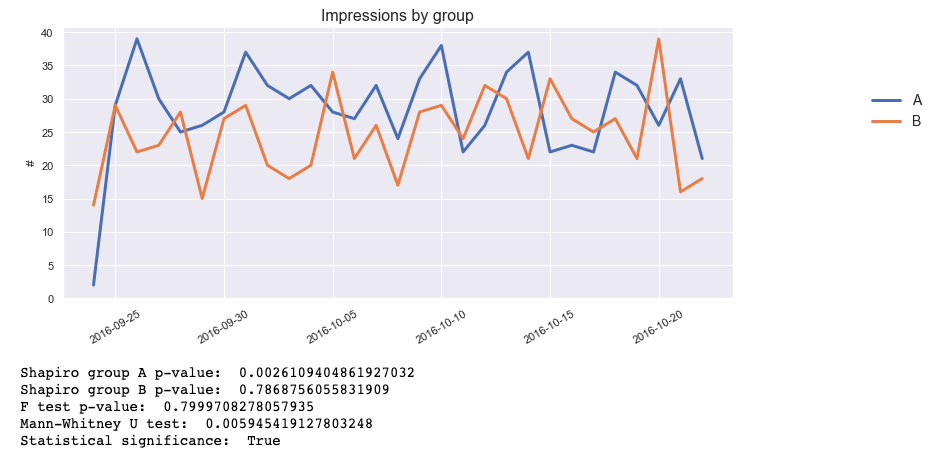

- Compute statistics in order to confirm a statistical significance between groups

You can directly install all of the requirements for AlphaB by running pip install -r requirements.txt from the root of the repository.

- Matplotlib - a library to generate charts from data sets

- Pandas - a library providing high-performance, easy-to-use data structures and data analysis tools

- Numpy - a library providing support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions

- Scipy - a library used for scientific computing and technical computing

- Pathlib - offers a set of classes to handle filesystem paths

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from scipy import stats

from pathlib import PathIt is highly recommended to use Jupyter to perform A/B testing analysis in Python, and AlphaB is built to be used in Jupyter Notebooks.

Here is an example usage for AlphaB (this example doesn't include specifying a data set for now):

#!/usr/bin/env python3

from alphab import BucketTest

import pandas as pd

def main():

df = pd.DataFrame()

bucket_test = BucketTest(

df=df,

variable='impressions',

group='design',

x_axis='date',

custom_title='Impressions by design',

custom_ylabel='#',

custom_day_interval=1

)

bucket_test.render()

bucket_test.compute_pvalues()When creating a bucket test, you can specify the following arguments:

df- data frame to be used for the bucket test. It is recommended to group the data frame before passing it (e.g.: When doing a bucket test on the groupdesign, you should group the data frame by design and date first)variable- specifies the values on the y-axis for the chart and statistical significance checkgroup- the name of the column which the data frame is grouped byx_axis(default:date) - specifies the values on the x_axis for the chartcustom_title(default:{variable} per {group}) - specifies the title for the chart

For the render() method, those options can be specified to customize your chart:

figure_size_x(default: 12) - the width of the chart (in inches)figure_size_y(default: 5) - the height of the chart (in inches)line_width(default: 3) - the line width in a line chart (in points)title_font_size(default: 16) - the font size of the title in the figurelegend_font_size(default: 14) - the font size of the legend in the figurerotation(default: 30) - the rotation of the x ticks (in degrees)

In the compute_pvalues() method, you can customize the p-value used to reject a null hypothesis by adjusting the alpha value (default: 0.01).

Recommended values are: 0.01, 0.05, 0.1.

Read more about statistical significance and p-value here.

This research paper is also a good place to start for those who want to better understand those topics.

A generated chart and statistical significance analysis example:

- Customize the number of groups that are taken into account A/B/C testings, A/B/C/D testings, A/B/C/D/E testings

- Render charts and compute p-values for data from more than one data frame

- Create tests for

renderandcompute_pvaluemethods - Handle other

x_axisthat date only - Customize names of images

plt.savefig(Path(""))

You can contribute by forking this repository, looking through the issues of the repository, and opening a PR on your fork. Please make sure to write a clear PR description and to provide examples for how your new feature works.

- The method for checking statistical significance was highly inspired by the work of Paulina Gralak @Loczi94.

Thanks a lot! The creator and maintainer: Julia Jakubczak @veliona