This project demonstrates a Daml Enterprise deployment's observability features, along with example Grafana dashboards.

The project is self-contained: providing scripts to generate requests for

which metrics are collected for display in the Grafana dashboards. It requires

Daml 2.6.5 or newer and has been tested on MacOS and Linux.

This project is provided for illustrative purposes only, the various configurations are tailored for local run and may not fit other use cases.

This repository does not accept contributions at the moment.

Use the Makefile — run make help for available commands!

- Docker

- Docker Compose V2 (as plugin

2.x) - (Optional) Digital Asset Artifactory credentials to access private Daml Enterprise container images

docker compose (V2 ✔️) VS docker-compose (V1 ❌).

Docker Compose will automatically build the image for the HTTP JSON API service from the release JAR file.

The .env file has environment variables to select which Canton and Daml SDK versions

are being used. See this section below for more details.

Please be aware that using a different Daml Enterprise version may not generate all the metrics used in

the Grafana dashboards, some things may not show up.

To quickly get up and running, make sure you have all the prerequisites installed and then:

- Ensure you have enough CPU/RAM/disk to run this project; if resource limits are reached, a container can be killed. Canton can use over 4GB of RAM for example.

- Start everything:

docker compose up - Create workload: there are various scripts that generate load, run them in different terminals:

scripts/generate-load.sh(generates gRPC traffic to the Ledger API running the conformance tests in loop)scripts/send-json-api-requests.sh(generates HTTP traffic to the HTTP JSON API Service)scripts/send-trigger-requests.sh(generates HTTP traffic to the Trigger Service)

- Log in to the Grafana at http://localhost:3000/ using the default

user and password

digitalasset. After you open any dashboard, you can lower the time range to 5 minutes and refresh to 10 seconds to see results quickly. - When you stop, it is recommended to cleanup everything and start fresh next time:

docker compose down -v

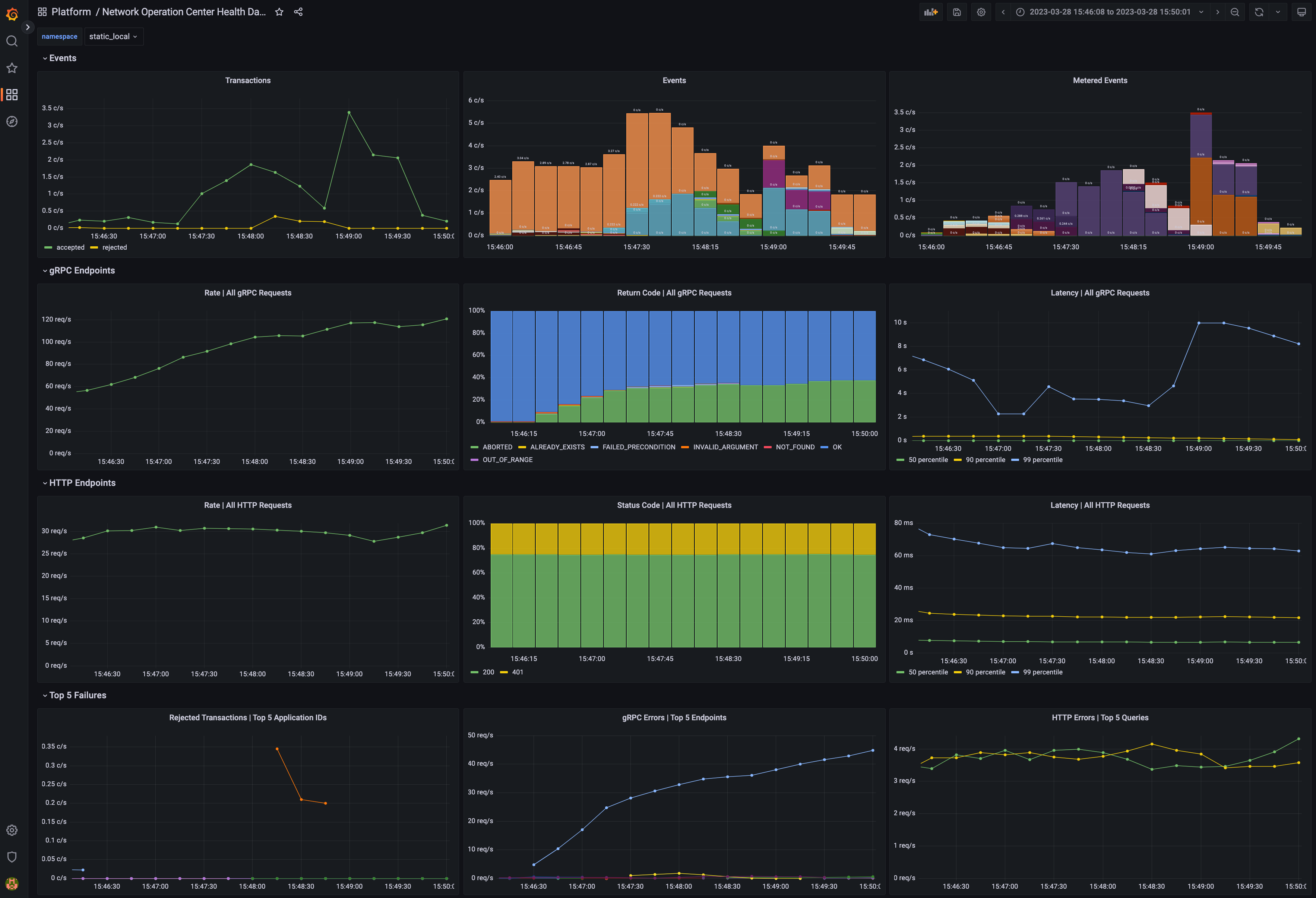

The "Operation Center" dashboard should look like this:

Docker Compose will start the following services:

- PostgreSQL database server

- Daml Platform

- All-in-one Canton node (domain topology manager, mediator, sequencer and participant)

- HTTP JSON API service

- Trigger service

- Monitoring

- Prometheus

2.x - Grafana

9.x - Node Exporter

- Loki + Promtail

2.x

- Prometheus

Prometheus and Loki are preconfigured as datasources for Grafana. You can add other services/exporters in the Docker compose file and scrape them changing the Prometheus configuration.

Start everything (blocking command, show all logs):

docker compose upStart everything (detached: background, not showing logs)

docker compose up -dIf you see the error message no configuration file provided: not found

please check that you are placed at the root of this project.

docker exec -it daml_observability_canton_console bin/canton -c /canton/config/console.conf-

If you used a blocking

docker compose up, just cancel via keyboard with[Ctrl]+[c] -

If you detached compose:

docker compose down

Stop everything, remove networks and all Canton, Prometheus & Grafana data stored in volumes:

docker compose down --volumes- Prometheus: http://localhost:9090/

- Grafana: http://localhost:3000/ (default user and password:

digitalasset) - Participant's Ledger API endpoint: http://localhost:10011/

- HTTP JSON API endpoint: http://localhost:4001/

Check all exposed services/ports in the different [Docker compose YAML] files:

docker exec -it daml_observability_canton_console bin/canton -c /canton/config/console.confdocker logs daml_observability_postgres

docker logs daml_observability_prometheus

docker logs daml_observability_grafanaYou can open multiple terminals and follow logs (blocking command) of a specific container:

docker logs -f daml_observability_postgres

docker logs -f daml_observability_prometheus

docker logs -f daml_observability_grafana

You can query Loki for logs using Grafana in the Explore section.

You can query Prometheus for metrics using Grafana in the Explore section.

For the Canton node and HTTP JSON API service only, you can change LOG_LEVEL in the .env file:

LOG_LEVEL=DEBUGprometheus.yml [documentation]

Reload or restart on changes:

- Reload:

- Signal:

docker exec -it daml_observability_prometheus -- kill -HUP 1 - HTTP:

curl -X POST http://localhost:9090/-/reload

- Signal:

- Restart:

docker compose restart prometheus

- Grafana itself:

grafana.ini[documentation] - Data sources:

datasources.yml[documentation] - Dashboard providers:

dashboards.yml[documentation]

Restart on changes: docker compose restart grafana

All dashboards (JSON files) are auto-loaded from directory

grafana/dashboards/

- Automatic: place your JSON files in the folder (loaded at startup, reloaded every 30 seconds)

- Manual: create/edit via Grafana UI

- Loki itself:

loki.yaml[documentation]

Restart on changes: docker compose restart loki

- Promtail:

promtail.yaml[documentation]

Restart on changes: docker compose restart promtail

-

Get credentials from Digital Asset support. You can get an access key by logging in to digitalasset.jfrog.io and generating an identity token in your Artifactory profile page. If your email is john.doe@digitalasset.com, your Artifactory username is

john.doe. -

Log in to Digital Asset's Artifactory at

digitalasset-docker.jfrog.io, you will be prompted for the password, use your identity token:

docker login digitalasset-docker.jfrog.io -u <username>- Set the

.envfile environment variablesCANTON_IMAGEandCANTON_VERSIONto the version you want.

- Using Daml open-source public container images (default), pulled from Docker Hub:

CANTON_IMAGE=digitalasset/canton-open-source

CANTON_VERSION=2.6.5

SDK_VERSION=2.6.5

LOG_LEVEL=INFO- Using Daml Enterprise private container images, pulled from Digital Asset's Artifactory:

CANTON_IMAGE=digitalasset-docker.jfrog.io/digitalasset/canton-enterprise

CANTON_VERSION=2.6.5

SDK_VERSION=2.6.5

LOG_LEVEL=INFOThe following optional services are also available:

- Participant Query Store (PQS)

To launch these additional services, follow these steps:

- Launch the other services, as described in the previous sections.

- Run the following script, which is required because the DARs must be uploaded before starting the PQS.

scripts/upload-test-dars.sh - Run the following script, which is required because the parties must be created before starting the PQS.

scripts/generate-load.sh 1 --concurrent-test-runs 1 --include TransactionService - Run the following to launch the optional services:

docker compose --file docker-compose-extras.yml up --detach - Explore the PQS database at http://localhost:8085, logging in with:

- System:

PostgreSQL - Server:

postgres - Username:

canton - Password:

supersafe - Database:

pqs

- System:

- To shutdown these optional containers, run the following:

docker compose --file docker-compose-extras.yml down

You may use the contents of this repository in parts or in whole according to the 0BSD license.

Copyright © 2023 Digital Asset (Switzerland) GmbH and/or its affiliates

Permission to use, copy, modify, and/or distribute this software for any purpose with or without fee is hereby granted.

THE SOFTWARE IS PROVIDED “AS IS” AND THE AUTHOR DISCLAIMS ALL WARRANTIES WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.