Towards robust facial action units detection

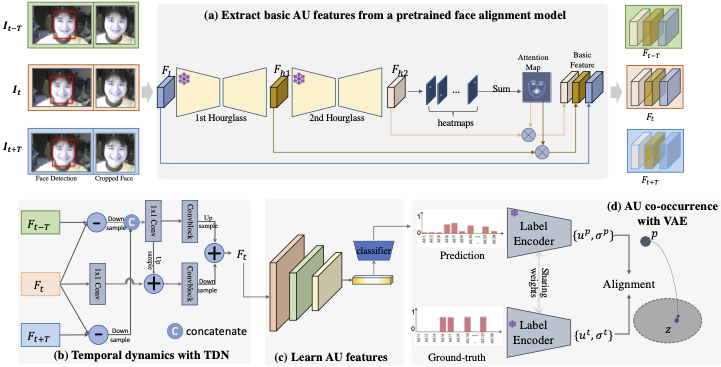

Overview of AU-Net

A simple yet strong baseline for facial AU detection:

- Extract basic AU features from a pretrained face alignment model

- Instantiate TDN to model temporal dynamics on static AU features

- Use VAE module to regulate the initial prediction

Requirements

- Python 3

- PyTorch

Data and Data Prepareing Tools

We use RetinaFace to do face detection:

Training and Testing

- train the VAE module on BP4D split 1, run:

python train_vae.py --data BP4D --subset 1 --weight 0.3

- train the AU-Net, run:

python train_video_vae.py --data BP4D --vae 'pretrained vae model'

- Pretrained models Test

| BP4D | Average F1-score(%) |

|---|---|

| bp4d_split* | 65.0 |

| DISFA | Average F1-score(%) |

|---|---|

| disfa_split* | 66.1 |

- Demo to predict 15 AUs Demo

Acknowledgements

This repo is built using components from JAANet and EmoNet

License

This project is licensed under the MIT License