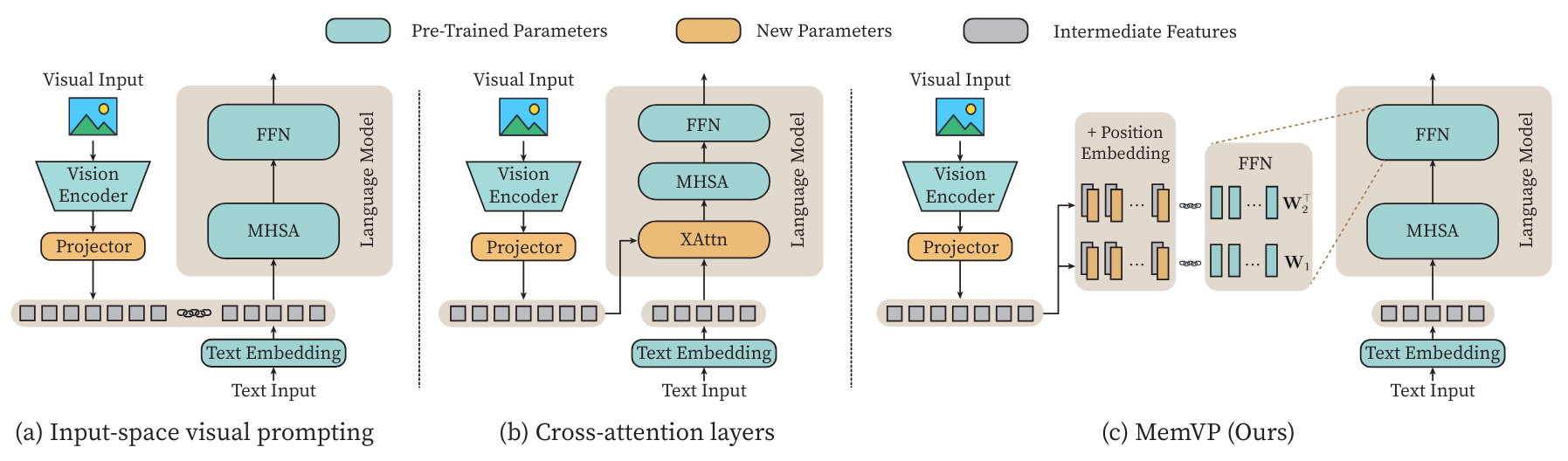

Official code of ''Memory-Space Visual Prompting for Efficient Vision-Language Fine-Tuning''

conda create -n memvp python==3.10

conda activate memvp

pip install -r requirements.txt

pip install -e .- Code of experiments on LLaMA.

- Code of experiments on BART and T5.

- For ScienceQA, please refer to the official repo.

- For the weights of LLaMA, please refer to the official form or unofficial HuggingFace repo LLaMA-7B and LLaMA-13B.

<your path>/

|-- memvp

|-- scripts

|-- train.py

|-- eval.py

......

|-- data/

|-- problem.json

|-- pid_splits.json

|-- captions.json

|-- images

|-- train # ScienceQA train image

|-- val # ScienceQA val image

|-- test # ScienceQA test image

|-- weights

|-- tokenizer.model

|--7B

|-- params.json

|-- consolidated.00.pth

|--13B

|-- params.json

|-- consolidated.00.pth

|-- consolidated.01.pth# LLaMA-7B

bash scripts/finetuning_sqa_7b.sh

bash scripts/eval_sqa_7b.sh

# LLaMA-13B

bash scripts/finetuning_sqa_13b.sh

bash scripts/eval_sqa_13b.shFine-tuning takes around 40 minutes for LLaMA-7B and 1 hour for LLaMA-13B on 8x A800 (80G).

@article{jie2024memvp,

title={Memory-Space Visual Prompting for Efficient Vision-Language Fine-Tuning},

author={Jie, Shibo and Tang, Yehui and Ding, Ning and Deng, Zhi-Hong and Han, Kai and Wang, Yunhe},

journal={arXiv preprint arXiv:2405.05615},

year={2024}

}