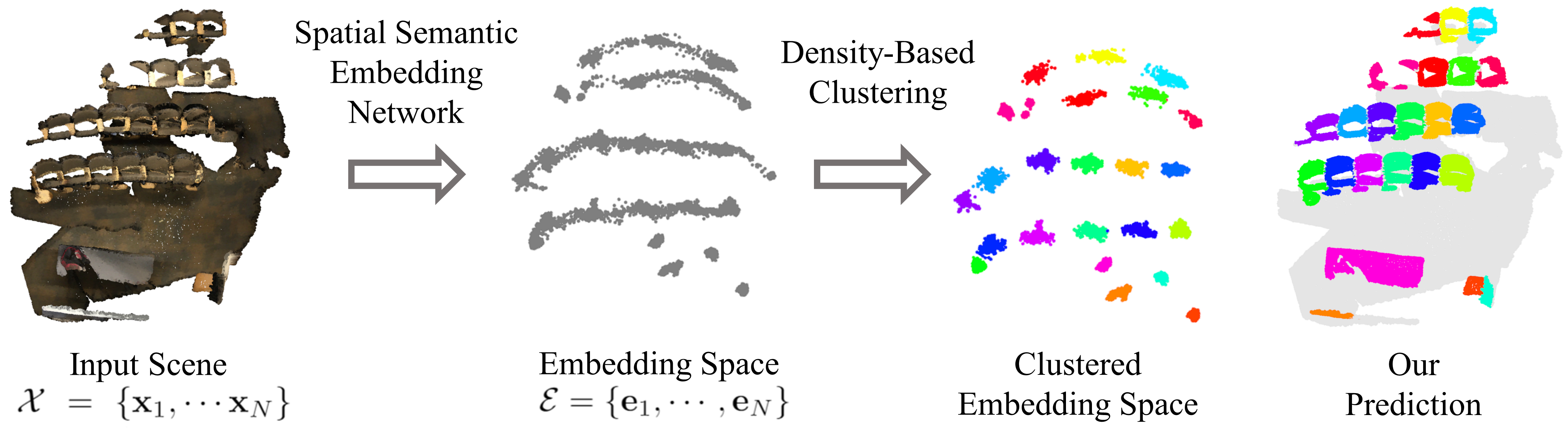

This repository contains code for Spatial Semantic Embedding Network:Fast 3D Instance Segmentation with Deep Metric Learning (submitted to IROS 2020) by Dongsu Zhang, Junha Chun, Sang Kyun Cha, Young Min Kim.

We are currently 3rd place on ScanNet 3D Instance Segmentation Challenge on AP.

Code for running pretrained network, visualizing the validation results on ScanNet, and training from scratch is available. The code has been tested on Ubuntu 18.04 and CUDA 10.0 environment. The following are guides for installation and execution of the code. Our project uses Minkowski Engine for constructing the sparse convolutional network.

Anaconda and environment installations

conda create -n ssen python=3.7

conda activate ssen

conda install openblas

pip install -r requirements.txt

conda install pytorch==1.3.1 torchvision==0.4.2 cudatoolkit=10.0 -c pytorch

pip install MinkowskiEngine==0.4.2

To visualize the outputs of our model,

- Download semantic segmentation model, instance segmentation model, example point cloud from google drive.

The semantic segmentation model was pretrained on Spatio Temporal Segmentation,

but it is slightly different from the Spatio Temporal Segmentation, since the semantic labels of the instance segmentation is slightly different from semantic segmentation in ScanNet.

Both of the models are pretrained on train and validation set and the

example_scene.ptpreprocessed point cloud from test set. - Place the files as below.

{repo_root}/

- data/

- models/

- instance_model.pt

- semantic_model.pt

- example_scene.pt

Then run

python eval.py

The validation results are on google drive Download the scenes and run

python visualize.py --scene_path {scene_path}

The semantic labels from the visualizations were ran with 10 rotations.

Download ScanNet from homepage and place them under ./data/scannet.

You need to sign the terms of use.

The data folders should be placed as following.

{repo_root}/

- data/

- scannet/

- scans/

- scans_test/

- scannet_combined.txt

- scannet_train.txt

- scannet_val.txt

Our model preprocesses all the point cloud into .pt file.

To preprocess data, run

python -m utils.preprocess_data

Our network also requires predicted semantic labels for training.

From Spatio Temporal Segmentation’s model zoo, download ScanNet pretrained model (train only) and place them at ./data/models/MinkUNet34C-train-conv1-5.pth.

We found that using transfer learning from semantic segmentation model greatly reduced time for training.

python -m utils.preprocess_semantic

The output tensor created has postfix of {scene_name}_semantic_segment.pt which is tensor of N x 8 where N is the number of points.

Indices [0:3] are coordinates, [3:6] are color features, [7:8] are (ground truth) semantic and instance label and the [8] is the predicted semantic label by pretrained semantic label.

To train the instance segmenation model, run

python main.py

For using transfer learning, download pretrained model from Spatio Temporal Segmentation.

For different training hyperparameters, you may change the configs in configs/ folder.

For logging the training and visualizing the embedding space, run

tensorboard --logdir log --port 8123