- Github: https://github.com/vietnlp/etnlp

- Video: https://vimeo.com/317599106

- Paper: https://arxiv.org/abs/1903.04433

Please CITE paper the Arxiv paper whenever ETNLP (or the pre-trained embeddings) is used to produce published results or incorporated into other software:

@inproceedings{vu:2019n,

title={ETNLP: A Visual-Aided Systematic Approach to Select Pre-Trained Embeddings for a Downstream Task},

author={Vu, Xuan-Son and Vu, Thanh and Tran, Son N and Jiang, Lili},

booktitle={Proceedings of the International Conference Recent Advances in Natural Language Processing (RANLP)},

year={2019}

}

To compare quality of embedding models on the word analogy task.

- Input: a pre-trained embedding vector file (word2vec format), and word analogy file.

- Output: (1) evaluate quality of the embedding model based on the MAP/P@10 score, (2) Paired t-tests to show significant level between different word embeddings.

- Adopt from the English list by selecting suitable categories and translating to the target language (i.e., Vietnamese).

- Removing inappropriate categories (i.e., category 6, 10, 11, 14) in the target language (i.e., Vietnamese).

- Adding custom category that is suitable for the target language (e.g., cities and their zones in Vietnam for Vietnamese). Since most of this process is automatically done, it can be applied in other languages as well.

- capital-common-countries

- capital-world

- currency: E.g., Algeria | dinar | Angola | kwanza

- city-in-zone (Vietnam's cities and its zone)

- family (boy|girl | brother | sister)

- gram1-adjective-to-adverb (NOT USED)

- gram2-opposite (e.g., acceptable | unacceptable | aware | unaware)

- gram3-comparative (e.g., bad | worse | big | bigger)

- gram4-superlative (e.g., bad | worst | big | biggest)

- gram5-present-participle (NOT USED)

- gram6-nationality-adjective-nguoi-tieng (e.g., Albania | Albanian | Argentina | Argentinean)

- gram7-past-tense (NOT USED)

- gram8-plural-cac-nhung (e.g., banana | bananas | bird | birds) (NOT USED)

- gram9-plural-verbs (NOT USED)

-

Analogy: Word Analogy Task

-

NER (w): NER task with hyper-parameters selected from the best F1 on validation set.

-

NER (w.o): NER task without selecting hyper-parameters from the validation set.

| Model | NER.w | NER.w.o | Analogy |

|---|---|---|---|

| BiLC3 + w2v | 89.01 | 89.41 | 0.4796 |

| BiLC3 + Bert_Base | 88.26 | 89.91 | 0.4609 |

| BiLC3 + w2v_c2v | 89.46 | 89.46 | 0.4796 |

| BiLC3 + fastText | 89.65 | 89.84 | 0.4970 |

| BiLC3 + Elmo | 89.67 | 90.84 | 0.4999 |

| BiLC3 + MULTI_WC_F_E_B | 91.09 | 91.75 | 0.4906 |

- Input: (1) list of input embeddings, (2) a vocabulary file.

- Output: embedding vectors of the given vocab file in

.txt, i.e., each line conains the embedding for a word. The file then be compressed in .gz format. This format is widely used in existing NLP Toolkits (e.g., Reimers et al. [1]).

-input-c2v: character embedding filesolveoov:1: to solve OOV words of the 1st embedding. Similarly for more than one embedding: e.g.,solveoov:1:2.

[1] Nils Reimers and Iryna Gurevych, Reporting Score Distributions Makes a Difference: Performance Study of LSTM-networks for Sequence Tagging, 2017, http://arxiv.org/abs/1707.09861, arXiv.

From source codes (Python 3.6.x):

- cd src/codes/

- pip install -r requirements.txt

- python setup.py install

From pip (python 3.6.x)

- sudo apt-get install python3-dev

- pip install cython

- pip install git+git://github.com/vietnlp/etnlp.git

OR:

- pip install etnlp

- cd src/examples

- python test1_etnlp_preprocessing.py

- python test2_etnlp_extractor.py

- python test3_etnlp_evaluator.py

- python test4_etnlp_visualizer.py

-

- Install: https://github.com/epfml/sent2vec

01. git clone https://github.com/epfml/sent2vec

02. cd sent2vec; pip install .

-

- Extract embeddings for sentences (no requirement for tokenization before extracting embedding of sentences).

import sent2vec

model = sent2vec.Sent2vecModel()

model.load_model('opendata_wiki_lowercase_words.bin')

emb = model.embed_sentence("tôi là sinh viên đh công nghệ, đại học quôc gia hà nội")

embs = model.embed_sentences(["tôi là sinh viên", "tôi là nhà thơ", "tôi là bác sĩ"])

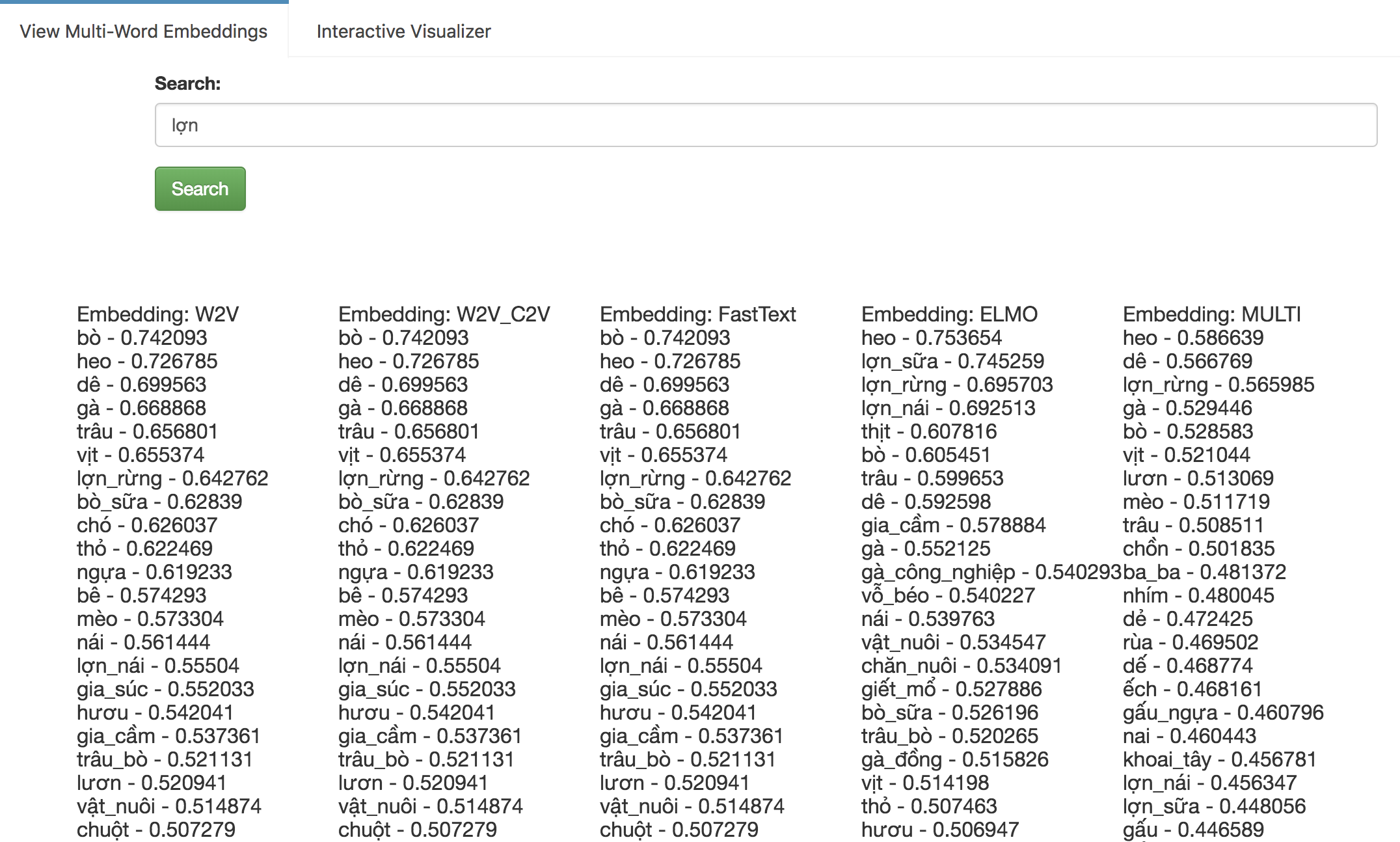

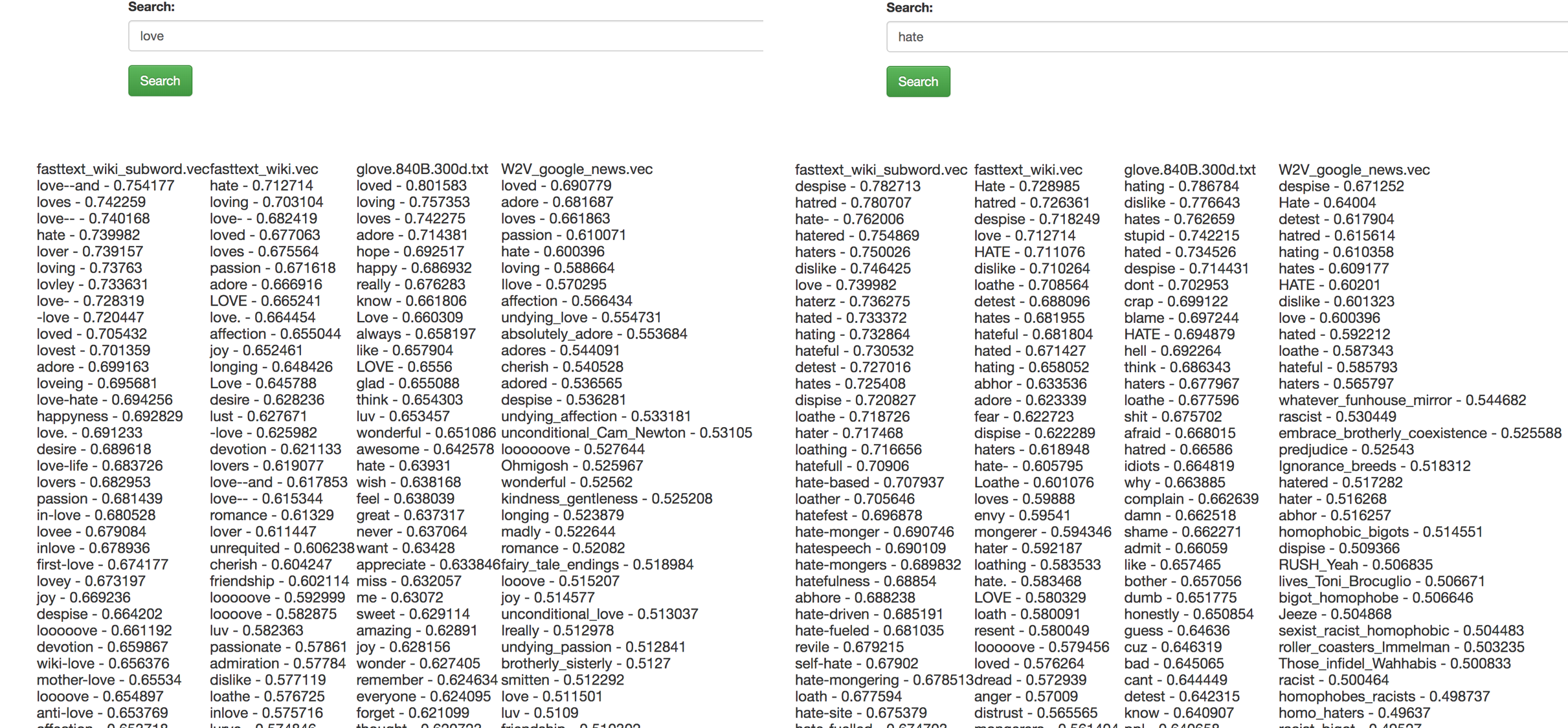

Side-by-side visualization:

- sh src/codes/04.run_etnlp_visualizer_sbs.sh

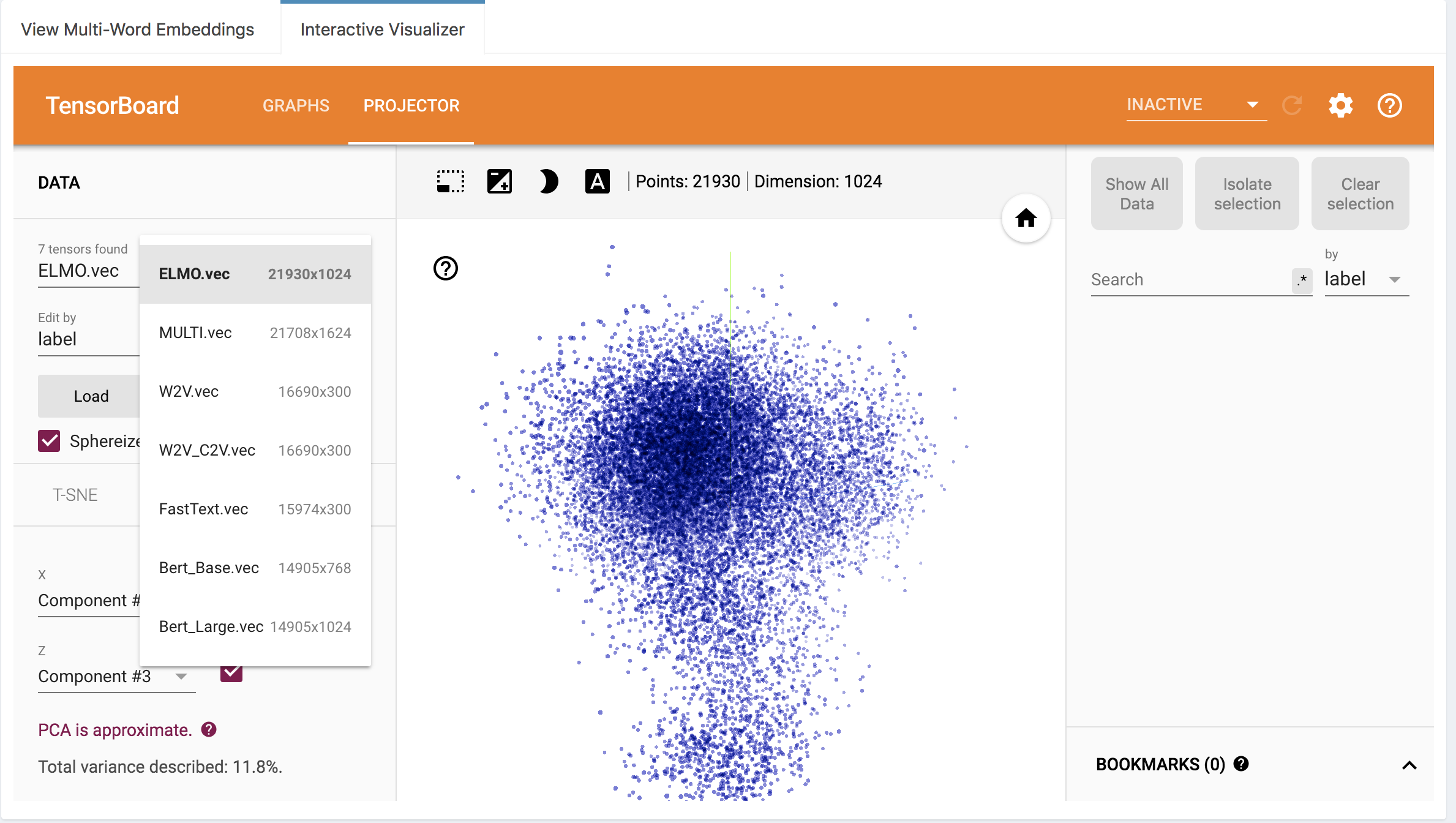

Interactive visualization:

- sh src/codes/04.run_etnlp_visualizer_inter.sh

| Word Analogy List | Download Link (NER Task) | Download Link (General) |

|---|---|---|

| Vietnamese (This work) | Link1 | Link1 |

| English (Mirkolov et al. [2]) | [Link2] | Link2 |

| Portuguese (Hartmann et al. [3]) | [Link3] | Link3 |

- Training data: Wiki in Vietnamese:

| # of sentences | # of tokenized words |

|---|---|

| 6,685,621 | 114,997,587 |

- Download Pre-trained Embeddings:

(Note: The MULTI_WC_F_E_B is the concatenation of four embeddings: W2V_C2V, fastText, ELMO, and Bert_Base.)

| Embedding Model | Download Link (NER Task) | Download Link (AIVIVN SentiTask) | Download Link (General) |

|---|---|---|---|

| w2v | Link1 (dim=300) | [Link1] | [Link1] |

| w2v_c2v | Link2 (dim=300) | [Link2] | [Link2] |

| fastText | Link3 (dim=300) | [Link3] | [Link3] |

| fastText-Sent2Vec | [Link3] | [Link3] | Link3 (dim=300, 6GB, trained on 20GB of news data and Wiki-data of ETNLP. |

| Elmo | Link4 (dim=1024) | Link4 (dim=1024) | Link4 (dim=1024, 731MB and 1.9GB after extraction.) |

| Bert_base | Link5 (dim=768) | [Link5] | [Link5] |

| MULTI_WC_F_E_B | Link6 (dim=2392) | [Link6] | [Link6] |

For transparency and insight into our release cycle, and for striving to maintain backward compatibility, ETNLP will be maintained under the Semantic Versioning guidelines as much as possible.

Releases will be numbered with the following format:

<major>.<minor>.<patch>

And constructed with the following guidelines:

- Breaking backward compatibility bumps the major (and resets the minor and patch)

- New additions without breaking backward compatibility bumps the minor (and resets the patch)

- Bug fixes and misc changes bumps the patch

For more information on SemVer, please visit http://semver.org/.