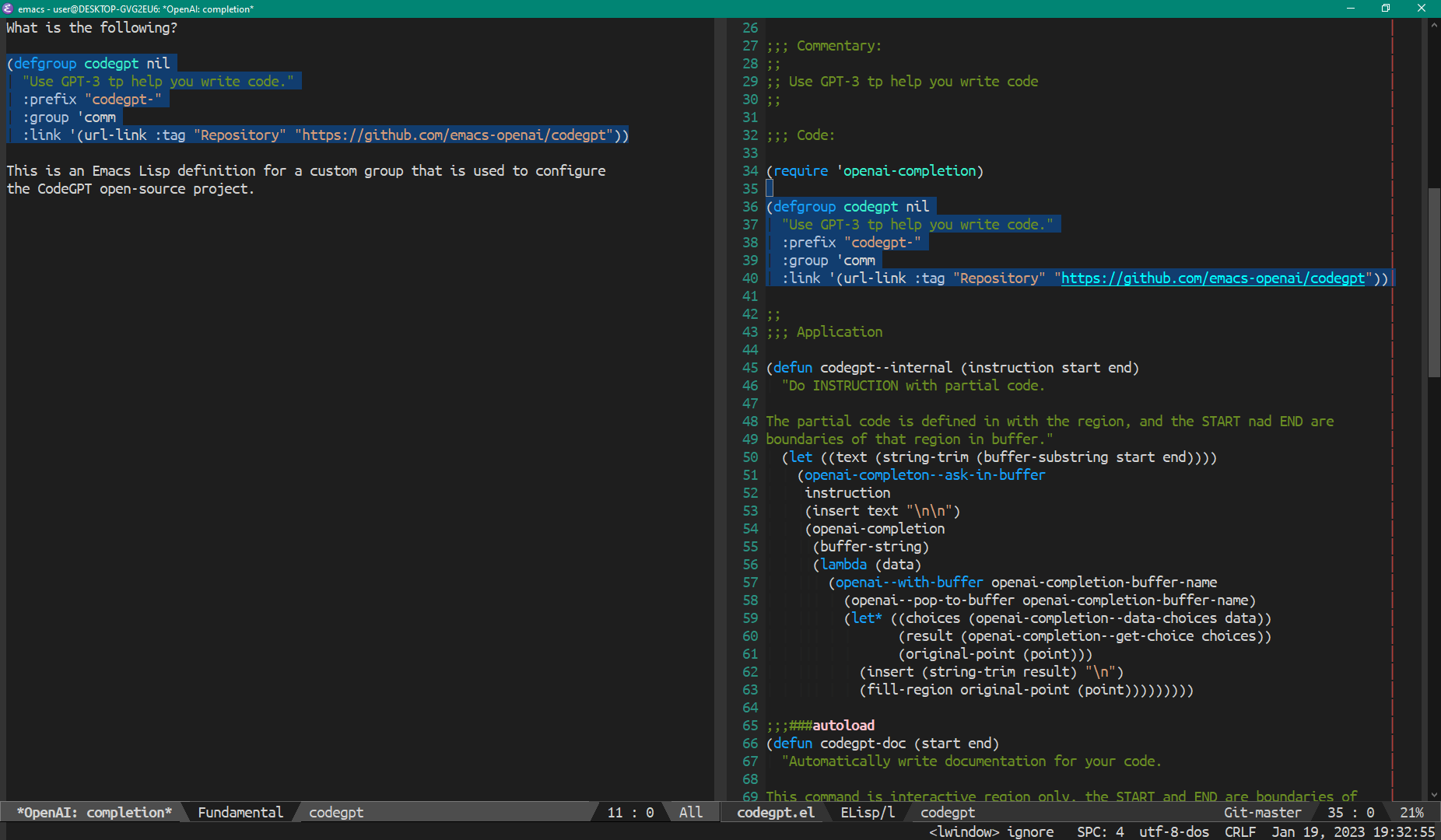

Use GPT-3 inside Emacs

This Emacs Code extension allows you to use the official OpenAI API to generate code or natural language responses from OpenAI's GPT-3 to your questions, right within the editor.

This package is available from JCS-ELPA. Install from these repositories then you should be good to go!

Normally, you don't need to add (require 'codegpt) to your configuration since

most codegpt commands are autoload and can be called without loading the module!

If you are using use-package,

add the following to your init.el file:

(use-package codegpt :ensure t)or with straight.el:

(use-package codegpt

:straight (codegpt :type git :host github :repo "emacs-openai/codegpt"))Copy all .el files in this repository to ~/.emacs.d/lisp and add the following:

(add-to-list 'load-path "~/.emacs.d/lisp/")

(require 'codegpt)To use this extension, you will need an API key from OpenAI. To obtain one, follow these steps:

- Go to OpenAI's website. If you don't have an account, you will need to create one or sign up using your Google or Microsoft account.

- Click on the

Create new secret keybutton. - Copy the key and paste into the 'API Key' field under the 'openai' custom group settings.

When you create a new account, you receive $18 in free credits for the API which you must use in the first 90 days. You can see pricing information here. 1000 tokens are about 700 words, and you can see the token count for each request at the end of the response in the sidebar.

Highlight or select code using the set-mark-command, then do:

M-x codegpt

List of supported commands,

| Commad | Description |

|---|---|

codegpt |

The master command |

codegpt-custom |

Write your own instruction |

codegpt-doc |

Automatically write documentation for your code |

codegpt-fix |

Find problems with it |

codegpt-explain |

Explain the selected code |

codegpt-improve |

Improve, refactor or optimize it |

The default is completing through the Completions tunnel. If you want to use ChatGPT, do the following:

(setq codegpt-tunnel 'chat ; The default is 'completion

codegpt-model "gpt-3.5-turbo") ; You can pick any model you want!codegpt-tunnel- Completion channel you want to use. (Default:completion)codegpt-model- ID of the model to use.codegpt-max-tokens- The maximum number of tokens to generate in the completion.codegpt-temperature- What sampling temperature to use.

If you would like to contribute to this project, you may either clone and make pull requests to this repository. Or you can clone the project and establish your own branch of this tool. Any methods are welcome!

To run the test locally, you will need the following tools:

Install all dependencies and development dependencies:

$ eask install-deps --devTo test the package's installation:

$ eask package

$ eask installTo test compilation:

$ eask compile🪧 The following steps are optional, but we recommend you follow these lint results!

The built-in checkdoc linter:

$ eask lint checkdocThe standard package linter:

$ eask lint package📝 P.S. For more information, find the Eask manual at https://emacs-eask.github.io/.

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program. If not, see https://www.gnu.org/licenses/.

See LICENSE for details.