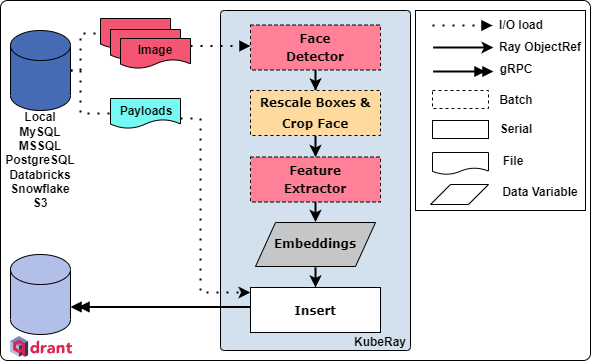

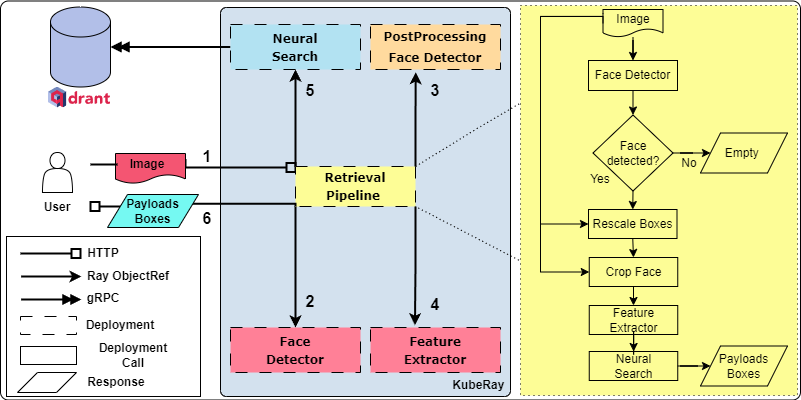

The goal this project was to build a lightweight and scalable face recognition system. To solve bottlenecks as serial pre and post processing and slow face matching some strategies were adopted.

Efficient and lightweight models were selected. Ultra LightWeight face detector and Mobile FaceNet to feature extraction.

Preprocessing steps were fusioned on both models. NMS layers were add to face detector using ONNX. IoU thresh was 0.5 and conf thresh were 0.95. The models graphs are simplified using ONNX-sim.

ONNX Runtime with OpenVINO are the inference engine for model execution.

Qdrant is used as a Vector Search Engine (Vector Database) to efficient face matching and data retrieval.

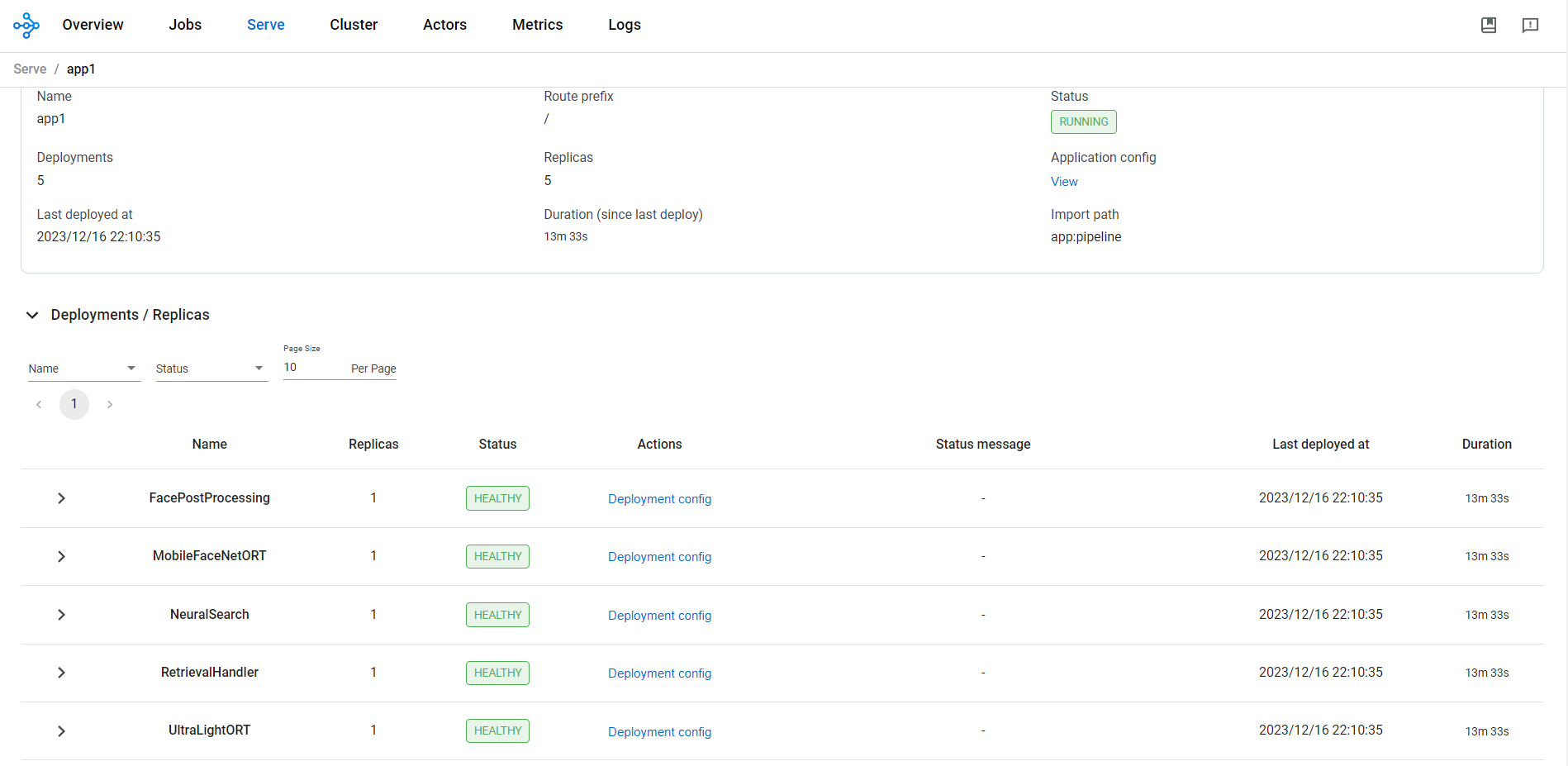

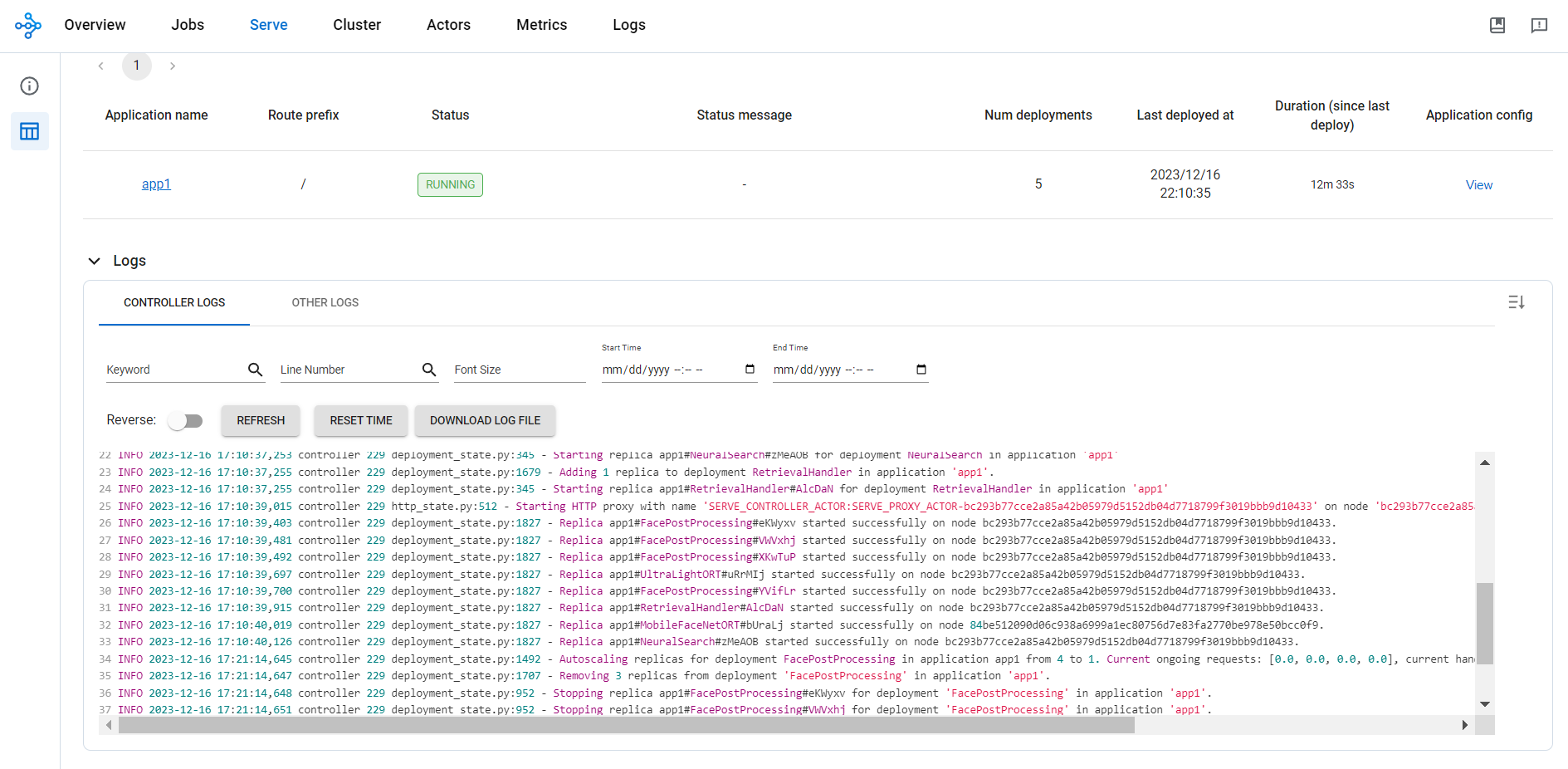

Ray is a python lib to distributed execution in large-scale. Ray runs on laptop, clouds, Kubernetes or on-promise. In this project, Ray Data ingests and processes images. Ray Serve model composition API join five services to face retrieval. FastAPI is integrate to recive HTTP requests.

The recommendation for deploying this service is in Kubernetes via KubeRay. You need Kubernetes and Helm to follow these steps

KubeRay is way to deploy Ray's applications in Kubernetes clusters

chmod +x install_kuberay_deps.sh./install_kuberay_deps.shKubeRay project offers support to integrate Prometheus and Grafana. To use, install

chmod +x install_prometheus_grafana.sh./install_prometheus_grafana.shCheck the installation

kubectl get all -n prometheus-systemKubeRay exposes a Prometheus metrics endpoint in port 8080. Please check Ray documentation to configure ports to Prometheus and Grafana, and integrate to Ray Dashboard.

See how to configure an Ingress for Ray Dashboard.

You can install Qdrant locally via Helm or use Qdrand Cloud

chmod +x install_qdrant_helm.sh./install_qdrant_helm.shQdrant default ports are 6333 to HTTP and 6334 to gRPC

To forward Qdrant's ports execute one of the following commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=qdrant,app.kubernetes.io/instance=qdrant" -o jsonpath="{.items[0].metadata.name}")If you want to use Qdrant via HTTP execute the following commands in other terminal tab:

kubectl --namespace default port-forward $POD_NAME 6333:6333If you want to use Qdrant via gRPC execute the following commands in other terminal tab:

kubectl --namespace default port-forward $POD_NAME 6334:6334Via HTTP, you can view the Qdrand dashboard <your-ip>:6333/dashboard

Ray Data is use to datasource consuming and to efficient batch processing from images with map_batches API.

Many DB connectors are implemented: MySQL, MSSQL, Postegres, Databricks and Snowflake. In jobs directory, some examples were demonstrated of how to develop a Job. In kubernetes directory some RayJobs are described.

The embeddings generated are stored in vector search engine (Qdrant).

Attention, RayJob can be not initialized if limits no are available in Kubernetes cluster. Check and adapt for your cluster

kubectl apply -f kubernetes/job_lfw.yamlList all RayJob custom resources in the default namespace

kubectl get rayjobList all RayCluster custom resources in the default namespace

kubectl get rayclusterList all Pods in the default namespace. The Pod created by the Kubernetes Job will be terminated after the Kubernetes Job finishes

kubectl get podsCheck the status of the RayJob. The field jobStatus in the RayJob custom resource will be updated to SUCCEEDED once the job finishes

kubectl get rayjobs.ray.io rayjob-lfw -o json | jq '.status.jobStatus'Check the RayJob logs

kubectl logs -l=job-name=rayjob-lfwDelete RayJob

kubectl delete -f job_lfw.yaml The online service will information retrieval based-on an input image. Check API routers:

| Method | Router | Data Type |

|---|---|---|

| POST | /base64 | String |

| POST | /uploadfile | Bytes |

| GET | / | - |

The API output is a JSON with two fields: payloads and boxes.

CORS are configured by default. JWT tokens are supporteds. You need configure two envs: AUTH_ALGORITHM and AUTH_SECRET_KEY. Check RayService manifest to configure Qdrant params and more.

kubectl apply -f kubernetes/face-recog-svc.yamlList all RayService custom resources in the default namespace

kubectl get rayserviceList all RayCluster custom resources in the default namespace

kubectl get rayclusterList all Ray Pods in the default namespace

kubectl get pods -l=ray.io/is-ray-node=yesList services in the default namespace

kubectl get servicesCheck the status of the RayService

kubectl describe rayservices rayservice-face-recogExpose Ray Serve port

kubectl port-forward svc/rayservice-face-recog-serve-svc --address 0.0.0.0 8000:8000Expose Ray Dashboard port

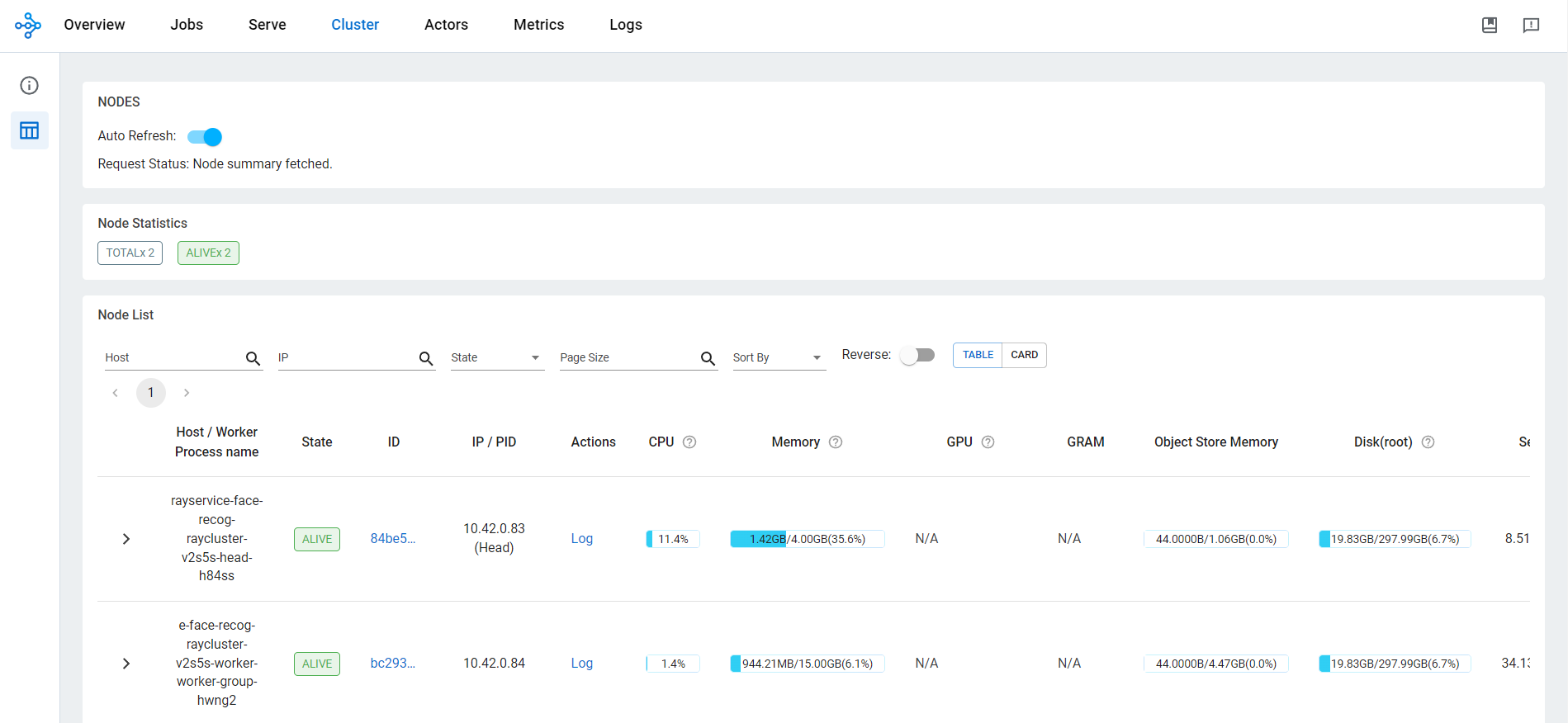

kubectl port-forward svc/rayservice-face-recog-head-svc --address 0.0.0.0 8265:8265Ray Dashboard screenshots

Test / router

curl 0.0.0.0:8000/Test /uploadfile router

curl -X POST -H "Content-Type: multipart/form-data" -H "Authorization: Bearer TOKEN" -F "file=@your_image.jpg" localhost:8000/uploadfileLoad test with locust

pip install locustDATASET_PATH="/.../images" locust -f locustfile.py \

--headless -u NUM_CLIENTES -r RATE_CLIENTS_PER_SECOUND \

--run-time EXECUTION_TIME -H 0.0.0.0:8000 --csv CSV_NAME Delete the RayService

kubectl delete -f kubernetes/face-recog-svc.yamlUninstall the KubeRay operator

helm uninstall kuberay-operator

kubectl delete crd rayclusters.ray.io

kubectl delete crd rayjobs.ray.io

kubectl delete crd rayservices.ray.ioUninstall the Qdrant

helm uninstall qdrantYou can perform local tests using Ray. Check and set necessary envs

pip install ray[data,serve]==2.7.1Install necessary libs (check in dockerfile)

mv jobs/job_hf.py .

python job_hf.pyInstall necessary deps

pip install -r requirements-svc-local.txtServe Config Files define Ray Serve app

serve build app:pipeline -o config.yamlserve run config.yamlYou can edit Jobs or Service to adapt to your scenario

In the project source

docker build -t face-recog-sys:job-description-version -f dockerfiles/Dockerfile.job.your.customizes .docker build -t face-recog-sys:svc-version -f dockerfiles/Dockerfiles.service . Use Serve Config File into Kubernetes RayService to define application

Many steps were based heavily on Ray documentation

-

Add Face Segmentation using U2net-p

-

Add support to TensorRT

-

Add support to OpenTelemetry