| Our method | Baseline method |

|---|

| Gibson | Matterport3D |

|---|

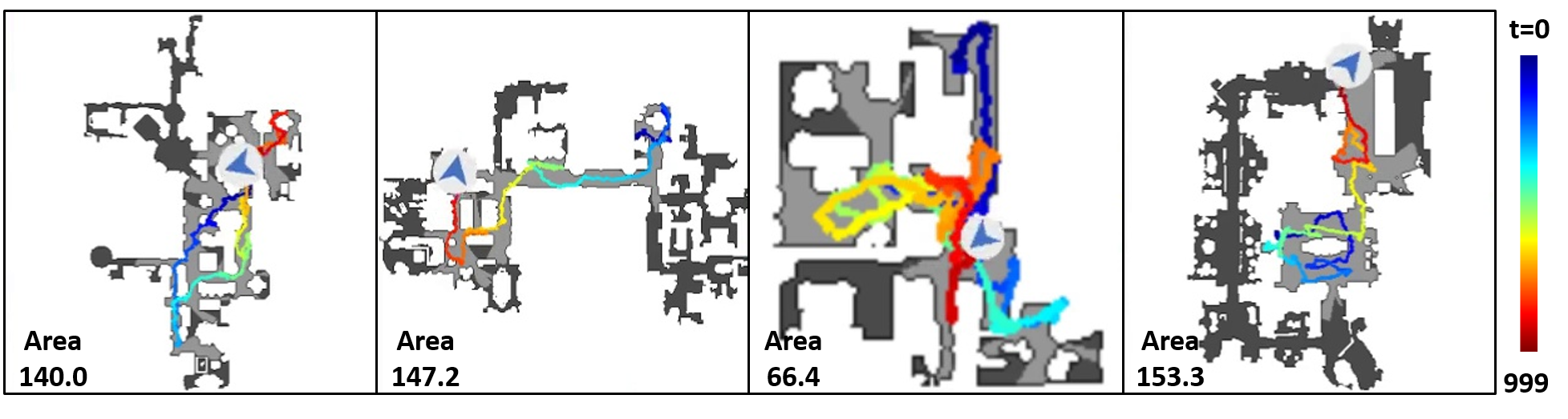

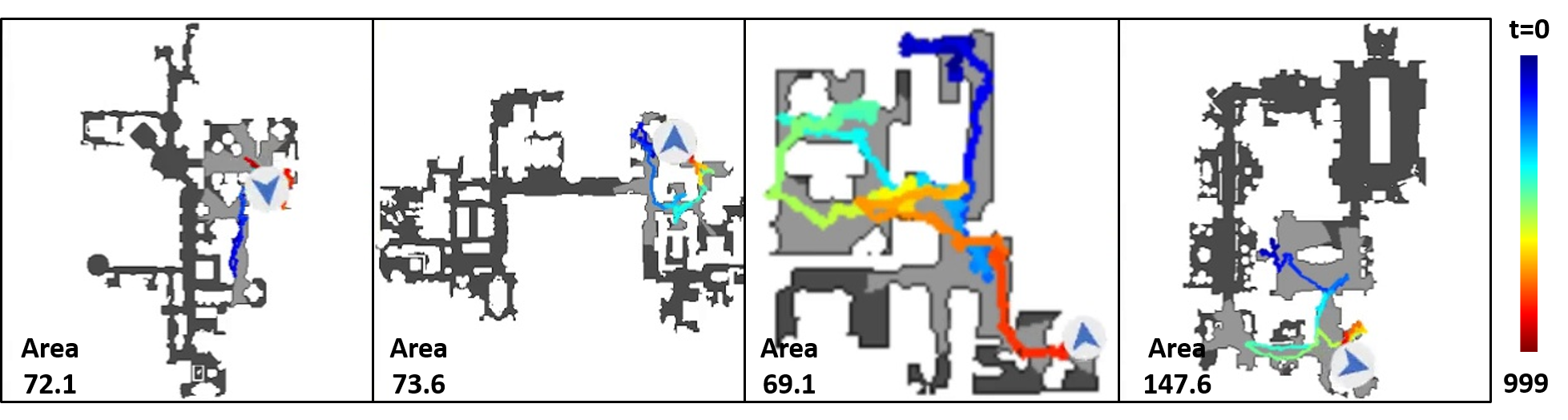

Visual exploration is a task that seeks to visit all the navigable areas of an environment as quickly as possible. The existing methods employ deep reinforcement learning (RL) as the standard tool for the task. However, they tend to be vulnerable to statistical shifts between the training and test

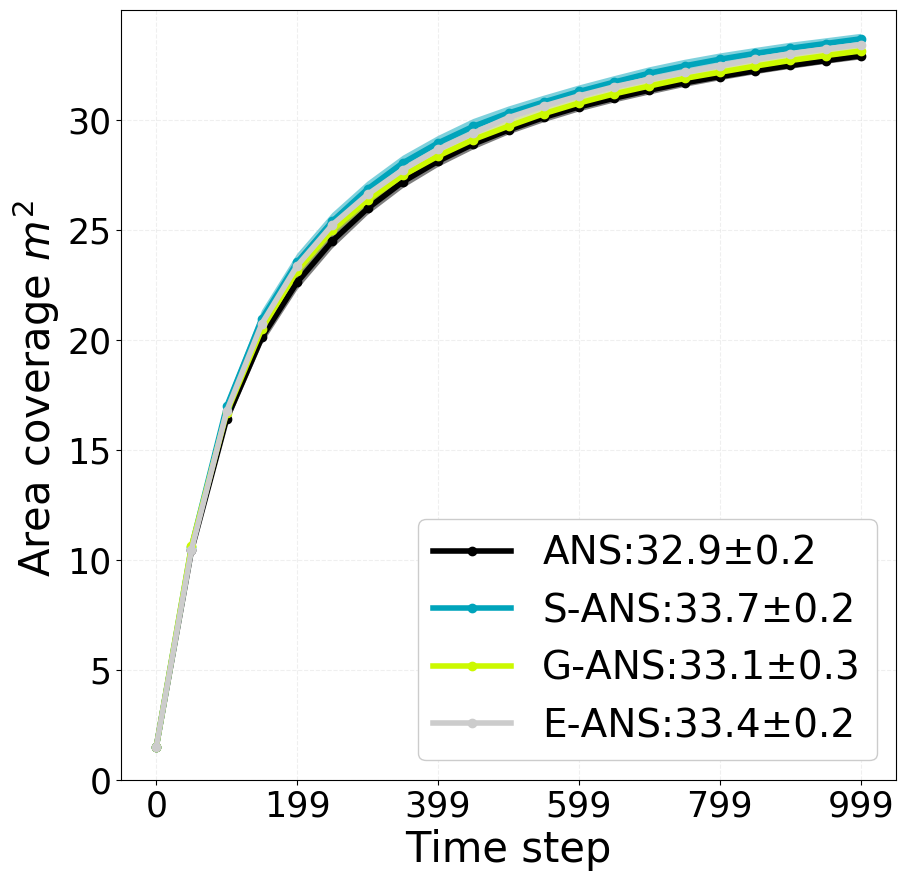

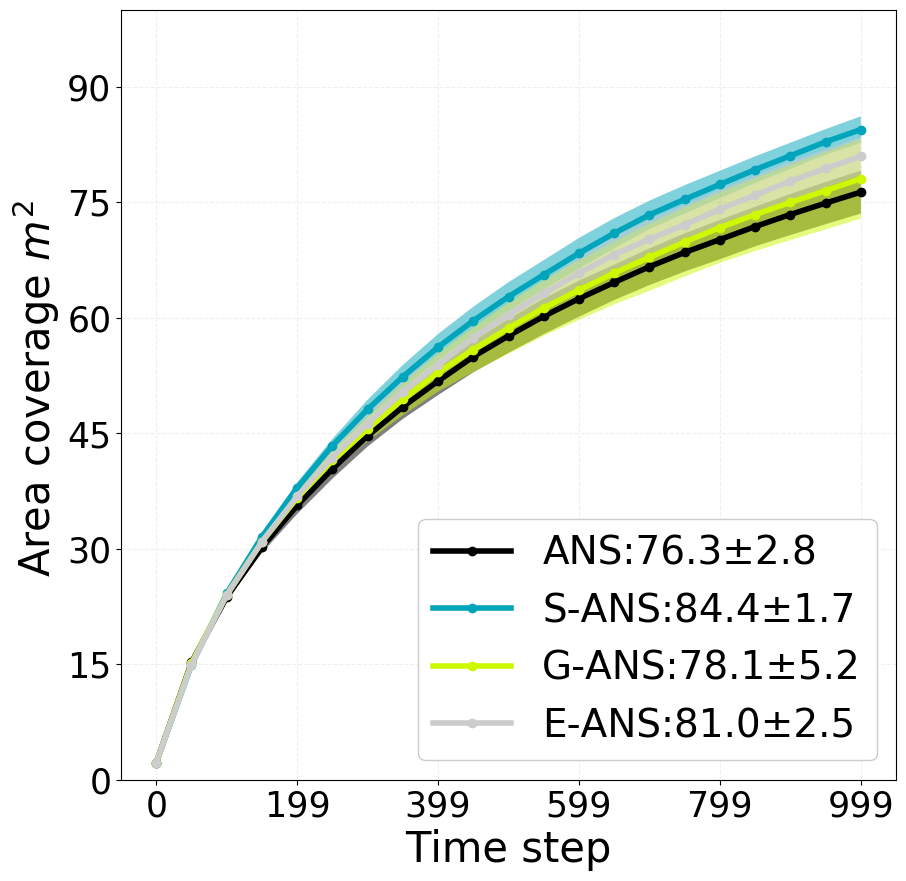

data, resulting in poor generalization over novel environments that are out-of-distribution (OOD) from the training data. In this paper, we attempt to improve the generalization ability by utilizing the inductive biases available for the task. Employing the active neural SLAM (ANS) that learns exploration policies with the advantage actor-critic (A2C) method as the base framework, we first point out that the mappings represented by the actor and the critic should satisfy specific symmetries. We then propose a network design for the actor and the critic to inherently attain these symmetries. Specifically, we use G-convolution instead of the standard convolution and insert the semi-global polar pooling (SGPP) layer, which we newly design in this study, in the last section of the critic network. Experimental results show that our method increases area coverage by

In order to run the scripts, please follow the steps below;

- Please download and install the implementation of Occupancy as required.

- Replace

ans.py,policy.pyandoccant_exp_trainer.pyinoccant_baselines/rlwithans.py,policy.pyandoccant_exp_trainer.pyin our directory. - Copy

./SANS/config/train/*yamland./SANS/config/test/*yamltoconfigs/model_configs/ans_depth.

- To train ANS, please run

python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_cat_allolocal_alloglobal_cart_ansnet.yaml --run-type train

- To train S-ANS, please run

python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_allolocal_alloglobal_cart_ansnetexact_shareconv_rllocal__actorBP_criticBPGPP.yaml --run-type train

- The trained model can be downloaded via link.

- To evaluate ANS on Gibson, please run

python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_myeval_novideo_cat_allolocal_alloglobal_cart_ansnet.yaml --run-type eval

- To evaluate ANS on MP3D, please run python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_myeval_mp3d_novideo_cat_allolocal_alloglobal_cart_ansnet.yaml --run-type eval

- To evaluate S-ANS on Gibson, please run

python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_myeval_video_allolocal_alloglobal_cart_ansnetexact_shareconv_rllocal__actorBP_criticBPGPP.yaml --run-type eval

- To evaluate S-ANS on MP3D, please run

python run.py --exp-config configs/model_configs/ans_depth/ppo_exploration_myeval_mp3d_video_allolocal_alloglobal_cart_ansnetexact_shareconv_rllocal__actorBP_criticBPGPP.yaml --run-type eval

We use parts of the code from Occupancy Anticipation and Active Neural SLAM. We thank the authors for sharing their code publicly.