Video Frame Prediction using Spatio Temporal Convolutional LSTM

Explore the repository»

View Report

tags : video prediction, frame prediction, spatio temporal, convlstms, generative networks, discriminative networks, movingmnist, deep learning, pytorch

Video Frame Prediction is the task of predicting future frames given a set of past frames. Despite the fact that humans can easily and effortlessly solve the future frame prediction problem, it is extremely challenging for a machine. Complexities such as occlusions, camera movement, lighting conditions, or clutter make this task difficult for a machine. Predicting the next frames requires an accurate learning of the representation of the input frame sequence or the video. This task is of high interest as it caters to many applications such as autonomous navigation and self-driving. We present a novel Adversarial Spatio-Temporal Convolutional LSTM architecture to predict the future frames of the Moving MNIST Dataset. We evaluate the model on long-term future frame prediction and its performance of the model on out-of-domain inputs by providing sequences on which the model was not trained. A detailed description of algorithms and analysis of the results are available in the Report.

This project was built with

- python v3.8.5

- PyTorch v1.7

- The environment used for developing this project is available at environment.yml.

Clone the repository into a local machine and enter the src directory using

git clone https://github.com/vineeths96/Video-Frame-Prediction

cd Video-Frame-Prediction/srcCreate a new conda environment and install all the libraries by running the following command

conda env create -f environment.ymlThe dataset used in this project (Moving MNIST) will be automatically downloaded and setup in data directory during execution.

To train the model on m nodes and g GPUs per node run,

python -m torch.distributed.launch --nnode=m --node_rank=n --nproc_per_node=g main.py --local_world_size=gThis trains the frame prediction model and saves it in the model directory.

This generates folders in the results directory for every log frequency steps. The folders contains the ground truth and predicted frames for the train dataset and test dataset. These outputs along with loss and metric are written to Tensorboard as well.

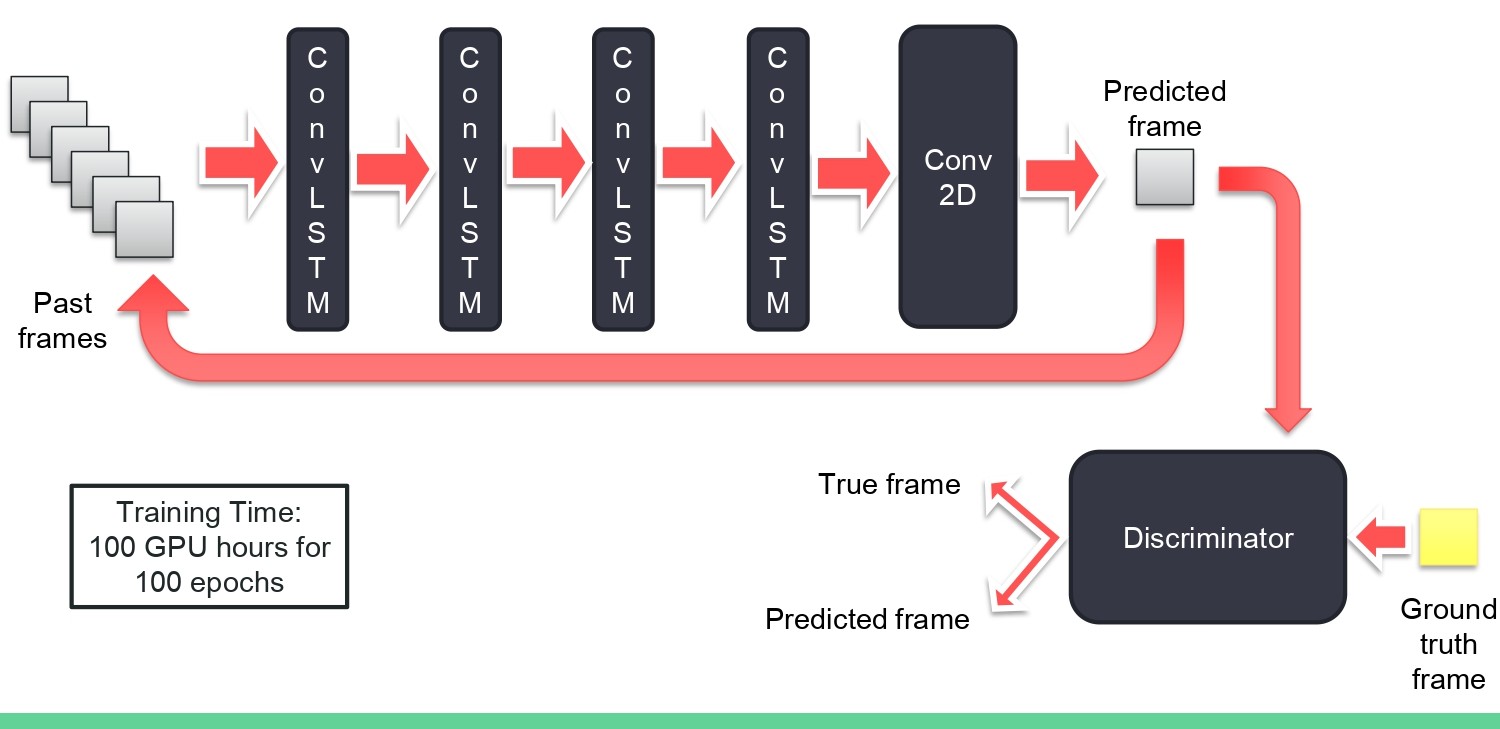

The architecture of the model is shown below. The frame predictor model takes in the first ten frames as input and predicts the future ten frames. The discriminator model tries to classify between the true future frames and predicted future frames. For the first ten time instances, we use the ground truth past frames as input, where as for the future time instances, we use the past predicted frames as input.

Detailed results and inferences are available in report here.

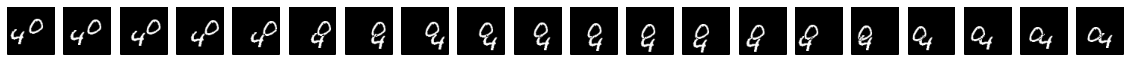

We evaluate the performance of the model for long-term predictions to reveal its generalization capabilities. We provide the first 20 frames as input and let the model predict for the next 100 frames.

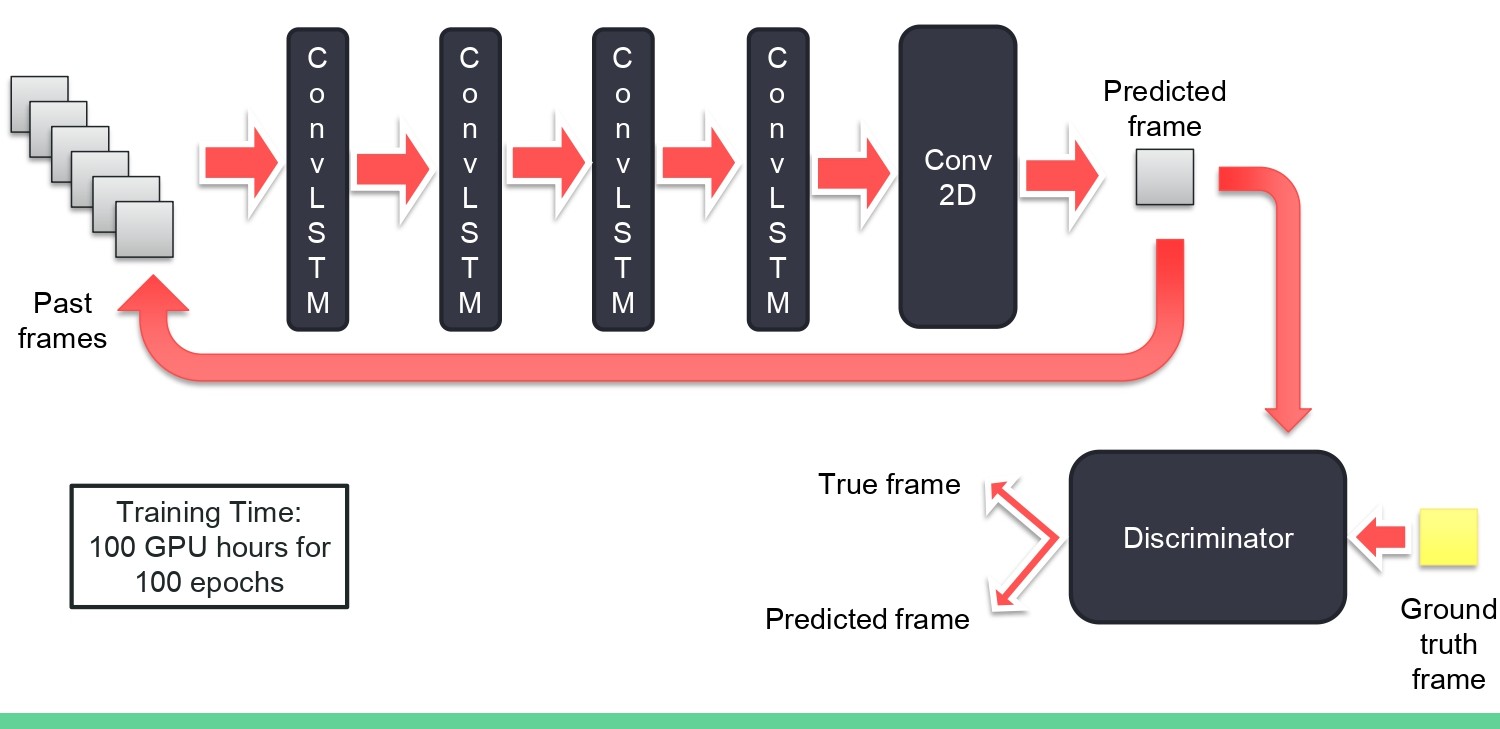

Ground truth frames (1-10):

Predicted frames (2-101):

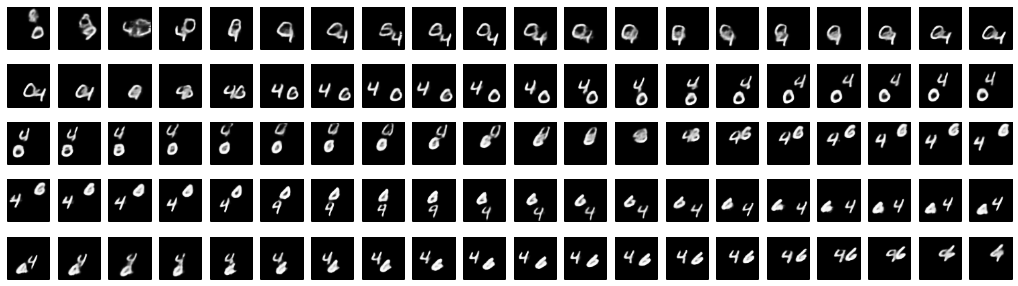

We evaluate the performance of the model on out-of-domain inputs which the model has not seen during the training. We provide a frame sequence with one moving digit as input and observe the outputs from the model.

Ground truth frames (1-10):

Predicted frames (2-41):

Distributed under the MIT License. See LICENSE for more information.

Vineeth S - vs96codes@gmail.com

Project Link: https://github.com/vineeths96/Video-Frame-Prediction

Base code is taken from:

https://github.com/JaMesLiMers/Frame_Video_Prediction_Pytorch