Authors: Harish Pal Chauhan, Vishnuram Hariharan

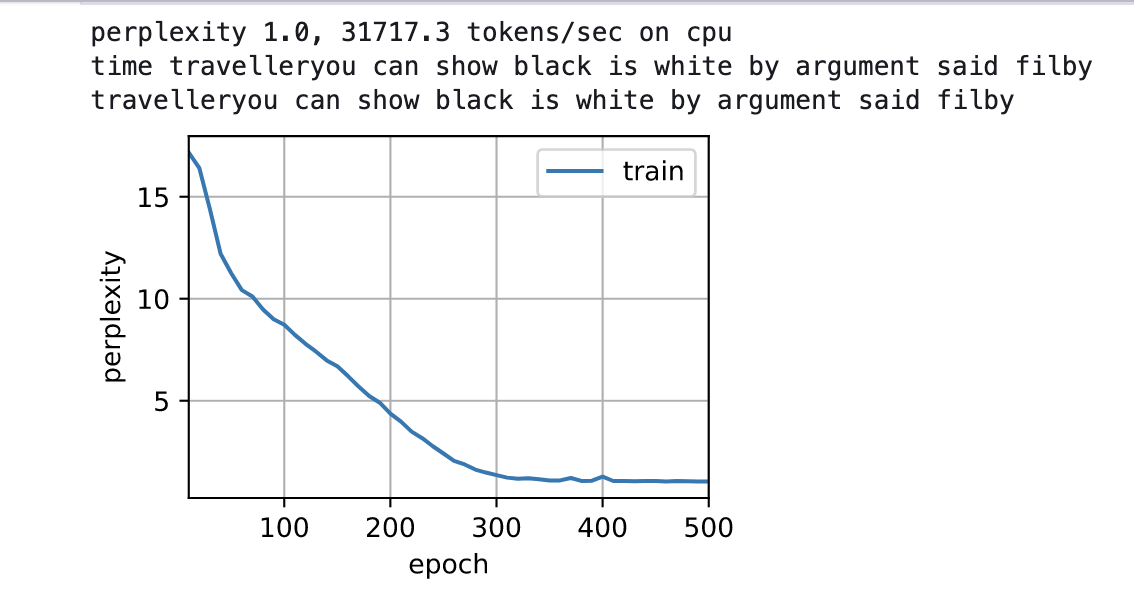

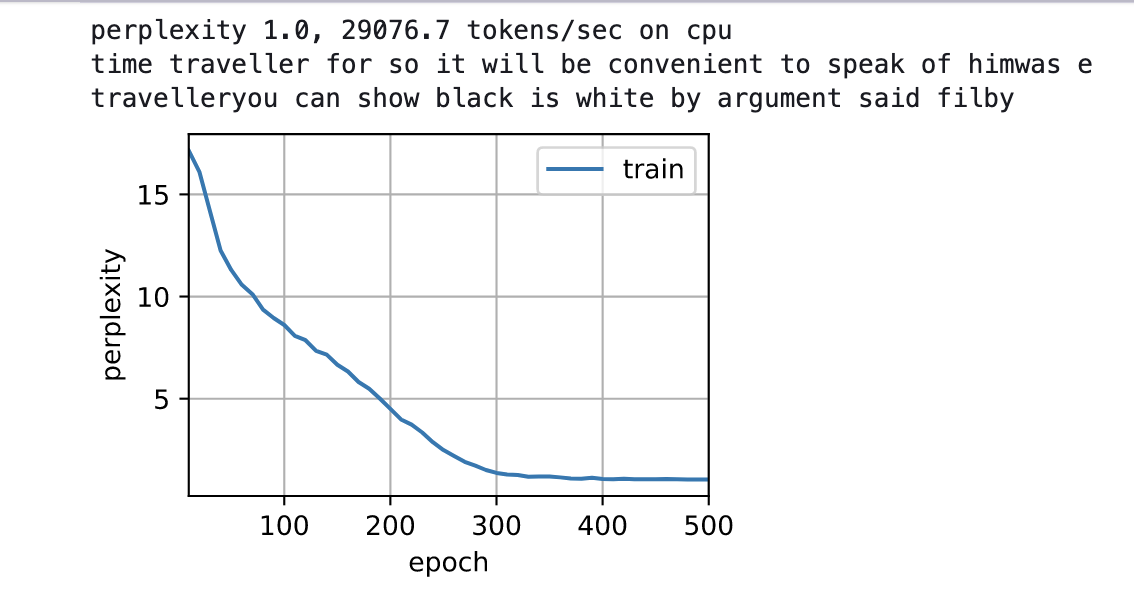

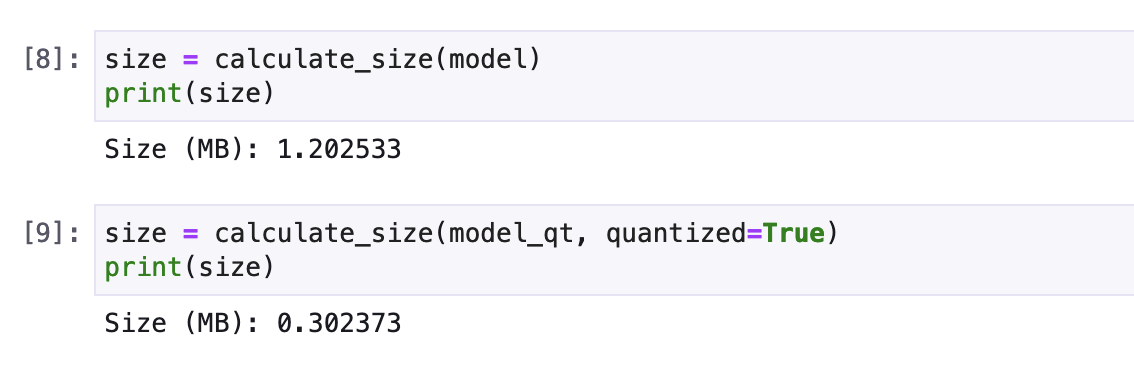

Summary: We created a quantized version of a LSTM (Language Model) model through Quantization Aware Training using python and pytorch. This quantized model is 25% of the original model in size and performs with same accuracy.

pip install -r requirements.txt

Run main.ipynb notebook