This repository contains the code used to produce the experimental results of the article "A Multilabel Framework for Approximate Nearest Neighbor Search" (2024) published in Journal of Machine Learning Research (JMLR).

To download the data sets and convert them to the binary format used by the experiment scripts, run:

./get_data.sh

Observe that the data sets require 37G disc space (20G if you remove the downloaded files).

Then compute the nearest neighbors (i.e., the labels) for the training, validation, and test sets:

./get_ground_truth.sh

To install the dependencies (FAISS library for HNSW and IVF-PQ, and its requirement lz4), first add the full path to the root of this repo to the DIR-variable in config.sh, and then run (requires CMake):

./get_algos.sh

The linear algebra library Eigen and the approximate nearest neighbor search library ANNOY are bundled.

We use GCC 5.4.0-2.26 to compile all C++ code; any GCC version supporting C++14 standard and OpenMP should work. We use OpenBLAS 0.2.18 as a low-level linear algebra library.

To build the index structures and evaluate them on a set of test queries for a fashion data set, run:

./comparison.sh fashion

The data sets for the main comparisons of the article are

fashion, gist-small, trevi, and stl10.

The full grids of hyperparameters for each algorithm and data set are found on the parameters folder. The hyperparameters for the baseline algorithms were taken from the leaderboard project ANN-benchmarks.

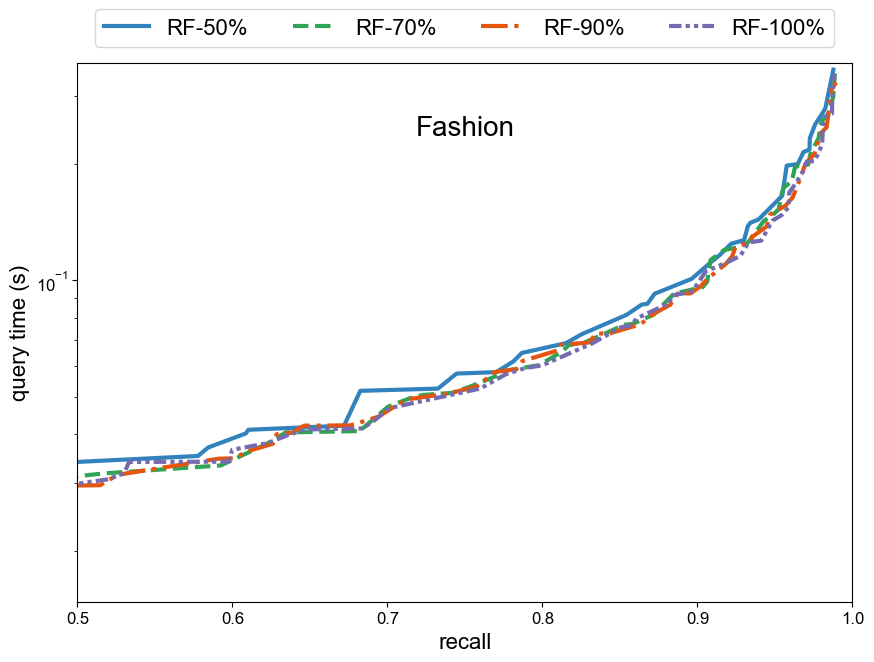

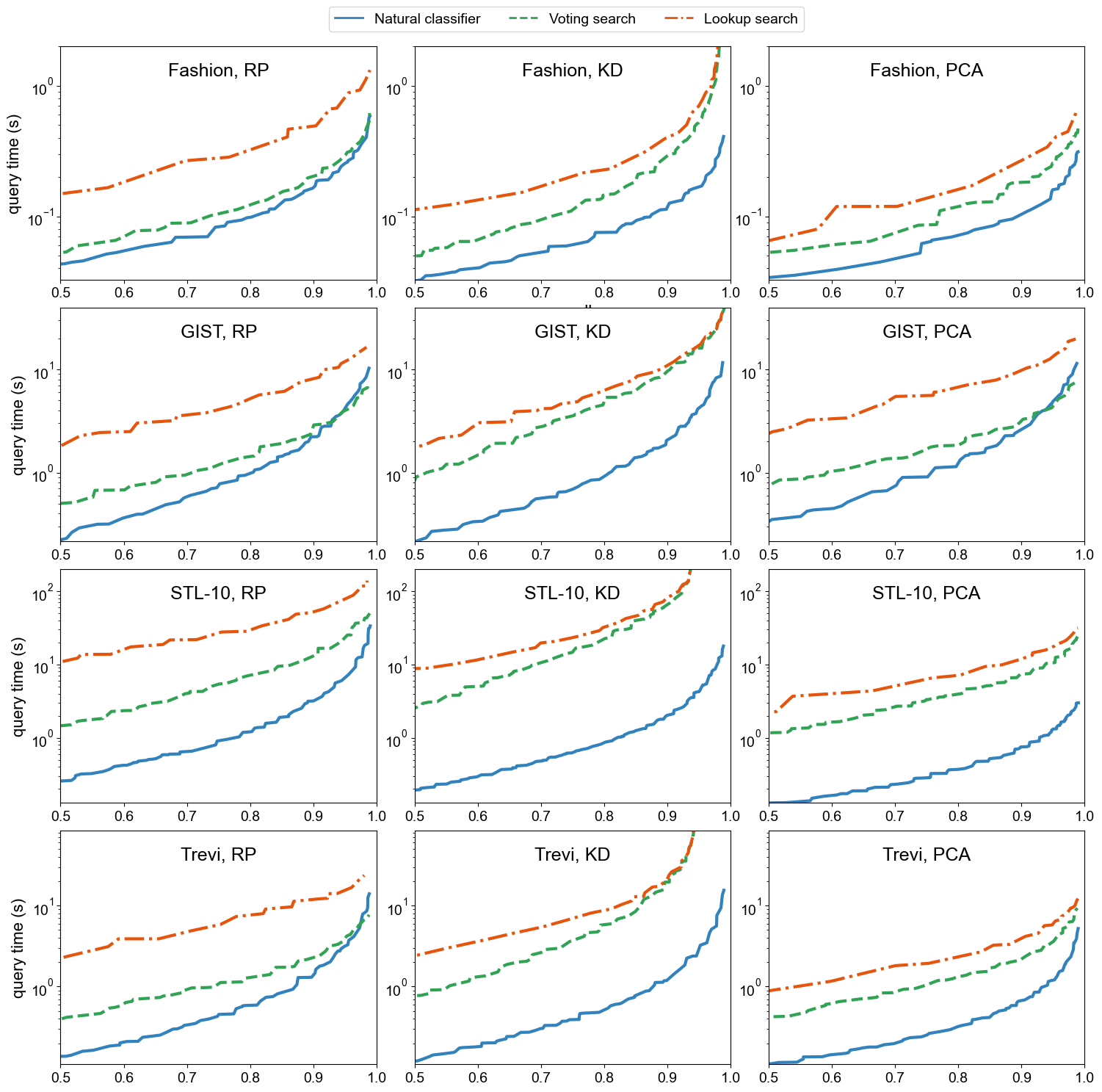

Comparison of the different candidate set selection methods for the unsupervised (RP, KD, PCA) trees:

The performance is measured by average query time for average recall level. Further down is better (faster queries for the given recall level).

The original results used to produce the figures and tables of the article are saved to the folder results.

To generate the above figure (Figure 5 of the main article), run:

python plotting/plot_rf_all-even.py results

To generate the figure where precision is plotted on the y-axis instead of the actual query time (Figure 5 of the main article), run:

python plotting/plot_precision.py results

To generate the table (Table 2 of the main article) of the above results at the recall levels 0.8, 0.9, and 0.95 in Latex, run:

python plotting/make_table.py results

The compared algorithms were ANNOY, HNSW and IVF-PQ.

To generate Figure 6 of Appendix, run:

python plotting/plot_ann2.py results

The comparison scripts for each data set take from couple of hours to couple of days. This is why we recommend running them on a server. All the experiments require less than 32G memory. You can get (conservative) upper bounds of the running times and memory consumption from -t and --mem-parameters of the slurm scripts. We ran the experiments on regular nodes with 28 cores, 2 threads and 256 GB RAM. The combined computation time for the experiments was few weeks (on a single CPU node).

We include example scripts to run the experiments on a cluster with the slurm scheduling system. To download the data sets and convert them to to the binary format used by the experiment scripts, run:

sbatch get_data.job

To compute the nearest neighbors for the training, validation, and test sets:

sbatch get_ground_truth.job

To build index structures and evaluate them on a set of test queries on (for instance) a fashion data set, run:

sbatch fashion.job