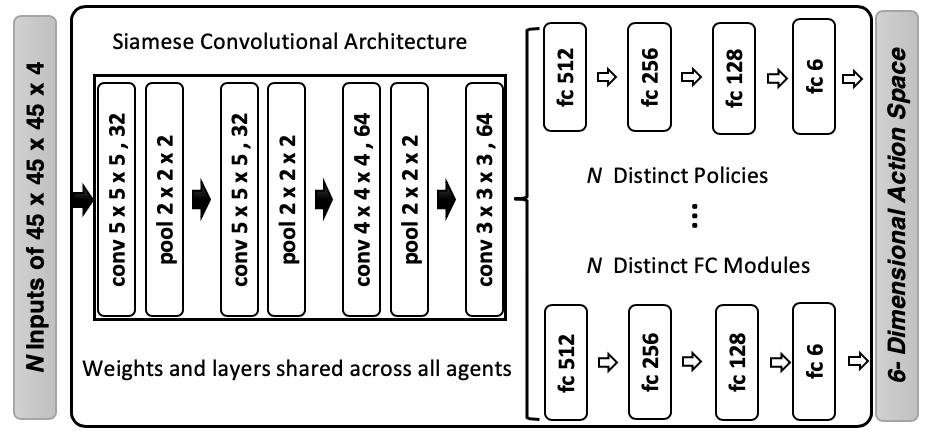

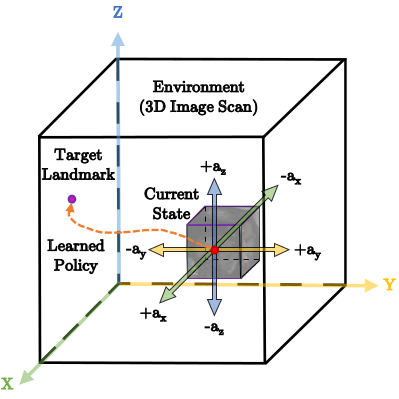

Automatic detection of anatomical landmarks is an important step for a wide range of applications in medical image analysis. The location of anatomical landmarks is interdependent and non-random in a human anatomy, hence locating one is able to help locate others. In this project, we formulate the landmark detection problem as a cocurrent partially observable markov decision process (POMDP) navigating in a medical image environment towards the target landmarks. We create a collaborative Deep Q-Network (DQN) based architecture where we share the convolutional layers amongst agents, sharing thus implicitly knowledge. This code also supports both fixed- and multi-scale search strategies with hierarchical action steps in a coarse-to-fine manner.

- Code is part of Tensorpack-medical project.

Here are few examples of the learned agent for landmark detection on unseen data:

-

Detecting the apex point and center of mitral valve in short-axis cardiac MRI

-

Detecting the anterior commissure (AC) and posterior commissure (PC) point in adult brain MRI

-

Detecting the left and right cerrebellum point in fetal head ultrasound

usage: DQN.py [-h] [--gpu GPU] [--load LOAD] [--task {play,eval,train}]

[--files FILES [FILES ...]] [--saveGif] [--saveVideo]

[--logDir LOGDIR] [--name NAME] [--agents AGENTS]

optional arguments:

-h, --help show this help message and exit

--gpu GPU comma separated list of GPU(s) to use.

--load LOAD load model

--task {play,eval,train}

task to perform. Must load a pretrained model if task

is "play" or "eval"

--files FILES [FILES ...]

Filepath to the text file that comtains list of

images. Each line of this file is a full path to an

image scan. For (task == train or eval) there should

be two input files ['images', 'landmarks']

--saveGif save gif image of the game

--saveVideo save video of the game

--logDir LOGDIR store logs in this directory during training

--name NAME name of current experiment for logs

--agents AGENTS number of agents to be trained simulteniously

python DQN.py --task train --gpu 0 --files './data/filenames/image_files.txt' './data/filenames/landmark_files.txt'

python DQN.py --task eval --gpu 0 --load data/models/DQN_multiscale_brain_mri_point_pc_ROI_45_45_45/model-600000 --files './data/filenames/image_files.txt' './data/filenames/landmark_files.txt'

python DQN.py --task play --gpu 0 --load data/models/DQN_multiscale_brain_mri_point_pc_ROI_45_45_45/model-600000 --files './data/filenames/image_files.txt'

To cite this work use the below bibtex item. https://arxiv.org/pdf/1907.00318.pdf

@article{Vlontzos2019,

author = {Vlontzos, Athanasios and Alansary, Amir and Kamnitsas, Konstantinos and Rueckert, Daniel and Kainz, Bernhard},

title = {Multiple Landmark Detection using Multi-Agent Reinforcement Learning},

journal={MICCAI}

year = {2019}

}