Official implementation and pre-trained models for paper MGC: "Multi-Grained Contrast for Data-Efficient Unsupervised Representation Learning".

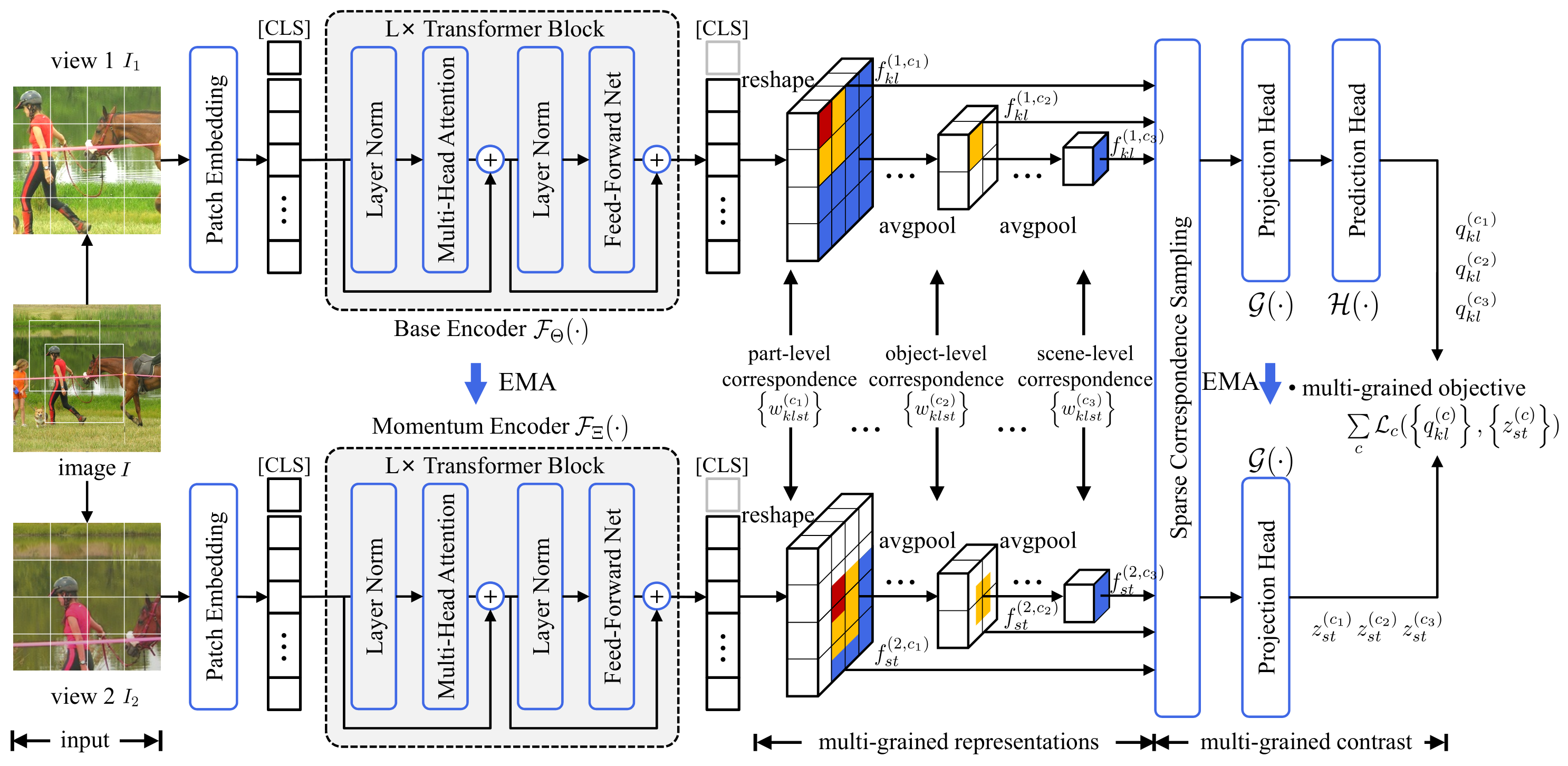

MGC is a novel multi-grained contrast method for unsupervised representation learning. MGC constructs delicate multi-grained correspondences between positive views and then conduct multi-grained contrast by the correspondences to learn more general unsupervised representations. Without pretrained on large-scale dataset, MGC significantly outperforms the existing state-of-the-art methods on extensive downstream tasks, including object detection, instance segmentation, scene parsing, semantic segmentation and keypoint detection.

conda create -n mgc python=3.8

pip install -r requirements.txt

MGC is pretrained on COCO 2017, VOC and ADE20K datasets, respectively. The root paths of data are respectively set to ./dataset/coco2017, ./dataset/vocand ./dataset/ade20k.

To start the MGC pre-training, run MGC pretraining code on COCO for 800 epochs with the following command.

python main_pretrain.py --arch='vit-small' --dataset='coco2017' --data-root='./dataset/coco2017' --nepoch=800All models are trained on ViT-S/16 for 800 epochs. For detection, segmentation and keypoint detection downstream tasks, please check evaluation/detection, evaluation/segmentation and evaluation/pose.

Note:

-

If you don't have an mircosoft office account, you can download the trained model weights by this link.

-

If you have an mircosoft office account, you can download the trained model weights by the links in the following tables.

| pretrained | checkpoint |

|---|---|

| COCO2017 | download |

| VOC | download |

| ADE20K | download |

Our method are evaluate on the following downstream tasks, including object detection, instance segmentation, semantic segmentation and keypoint detection.

step 1. Install mmdetection

git clone https://github.com/open-mmlab/mmdetection.git

cd mmdetection

git checkout v2.26.0step 2. Fine-tune on the COCO dataset

tools/dist_train.sh configs/mgc/mask_rcnn_vit_small_12_p16_1x_coco.py [number of gpu] --seed 0 --work-dir /path/to/saving_dir --options model.pretrained=/path/to/model_dirstep 3. Fine-tune on the Cityscapes dataset

tools/dist_train.sh configs/mgc/mask_rcnn_vit_small_12_p16_1x_cityscape.py [number of gpu] --seed 0 --work-dir /path/to/saving_dir --options model.pretrained=/path/to/model_dirModel weights finetuned on COCO 2017 and Cityscapes:

| pretrained | finetune | arch | bbox mAP | mask mAP | checkpoint |

|---|---|---|---|---|---|

| COCO2017 | COCO 2017 | ViT-S/16 + Mask R-CNN | 42.0 | 38.0 | download |

| COCO 2017 | Cityscapes | ViT-S/16 + Mask R-CNN | 33.2 | 29.4 | download |

step 1. Install mmsegmentation

git clone https://github.com/open-mmlab/mmsegmentation.git

cd mmsegmentation

git checkout v0.30.0step 2. Fine-tune on the ADE20K dataset

tools/dist_train.sh configs/mgc/vit_small_512_160k_ade20k.py [number of gpu] --seed 0 --work-dir /path/to/saving_dir --options model.pretrained=/path/to/model_dirstep 3. Fine-tune on the VOC07+12 dataset

tools/dist_train.sh configs/mgc/mask_rcnn_vit_small_12_p16_1x_voc.py [number of gpu] --seed 0 --work-dir /path/to/saving_dir --options model.pretrained=/path/to/model_dirModel weights finetuned on ADE20K and VOC:

| pretrained | finetune | arch | iterations | mIoU | checkpoint |

|---|---|---|---|---|---|

| COCO 2017 | ADE20K | ViT-S/16 + Semantic FPN | 40k | 37.7 | download |

| ADE20K | ADE20K | ViT-S/16 + Semantic FPN | 40k | 31.2 | download |

| COCO 2017 | VOC | ViT-S/16 + Semantic FPN | 40k | 64.5 | download |

| VOC | VOC | ViT-S/16 + Semantic FPN | 40k | 54.5 | download |

step 1. Install mmpose

git clone https://github.com/open-mmlab/mmpose.git

cd mmpose

git checkout v1.2.0step 2. Fine-tune on the COCO dataset

tools/dist_train.sh configs/mgc/td-hm_ViTPose-small_8xb64-210e_coco-256x192.py [number of gpu] --seed 0 --work-dir /path/to/saving_dir --options model.pretrained=/path/to/model_dirModel weights finetuned on COCO 2017:

| pretrained | finetune | arch | AP | AR | checkpoint |

|---|---|---|---|---|---|

| COCO 2017 | COCO 2017 | ViTPose | 71.6 | 76.9 | download |

This code is built on mmdetection, mmsegmentation and mmpose libray.

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.

@article{shen2024multi,

author = {Shen, Chengchao and Chen, Jianzhong and Wang, Jianxin},

title = {Multi-Grained Contrast for Data-Efficient Unsupervised Representation Learning},

journal = {arXiv preprint arXiv:2407.02014},

year = {2024},

}