This is the official implementation of paper: "Inter-Instance Similarity Modeling for Contrastive Learning".

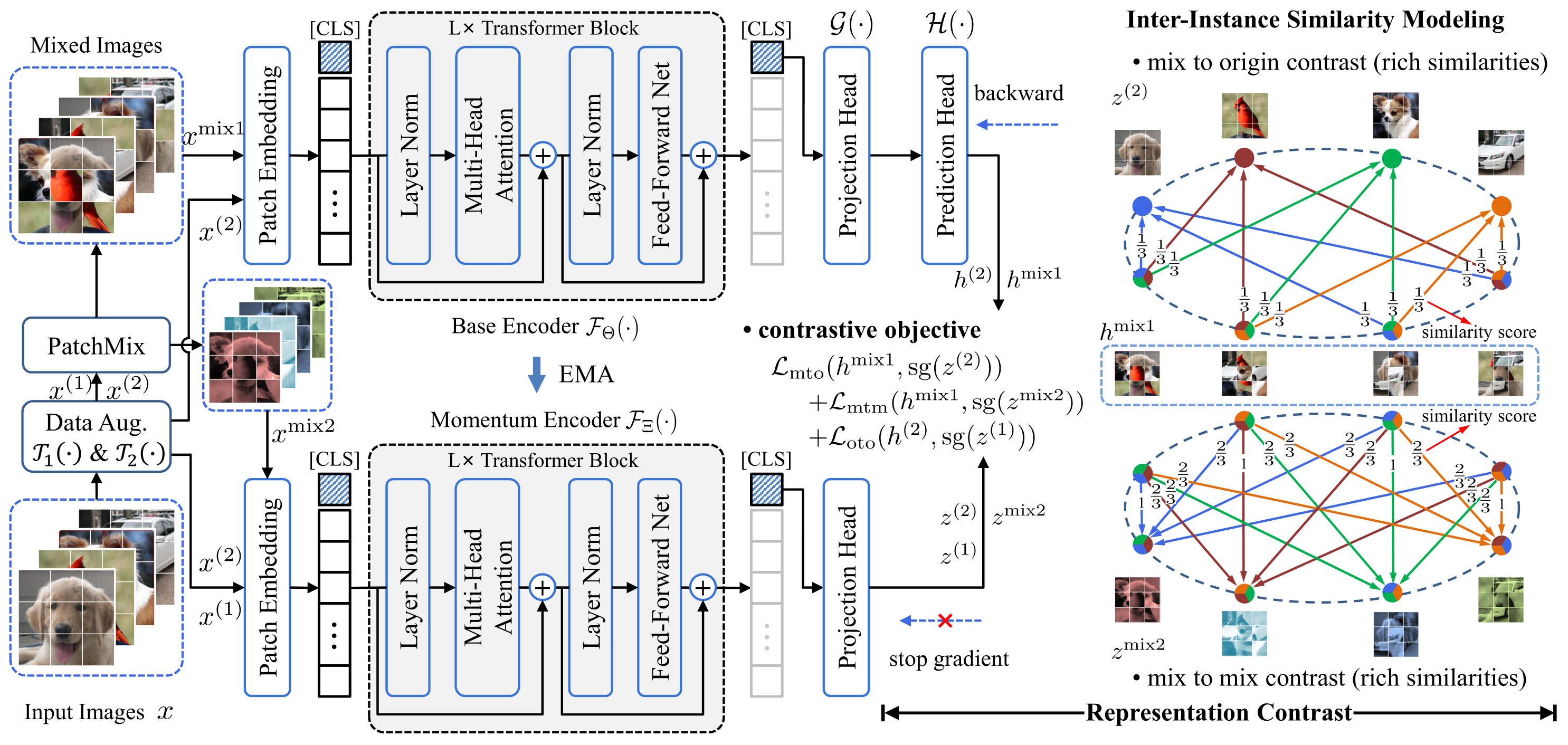

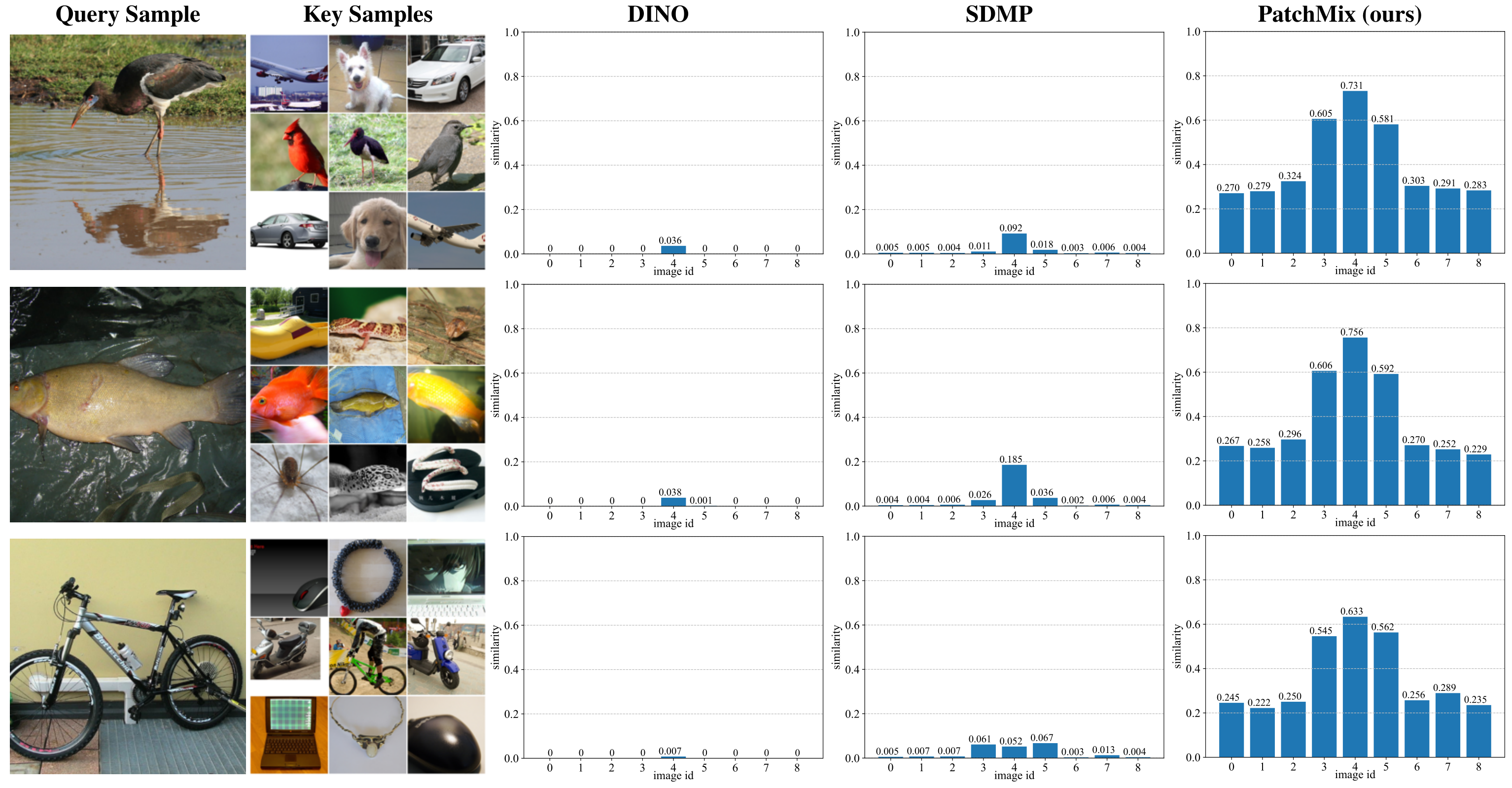

PatchMix is a novel image mix strategy, which mixes multiple images in patch level. The mixed image contains massive local components from multiple images and efficiently simulates rich similarities among natural images in an unsupervised manner. To model rich inter-instance similarities among images, the contrasts between mixed images and original ones, mixed images to mixed ones, and original images to original ones are conducted to optimize the ViT model. Experimental results demonstrate that our proposed method significantly outperforms the previous state-of-the-art on both ImageNet-1K and CIFAR datasets, e.g., 3.0% linear accuracy improvement on ImageNet-1K and 8.7% kNN accuracy improvement on CIFAR100.

conda create -n patchmix python=3.8

pip install -r requirements.txtPlease set the root paths of dataset in the *.py configuration file under the directory: ./config/.

CIFAR10, CIFAR100 datasets provided by torchvision. The root paths of data are set to /path/to/dataset . The root path of ImageNet-1K (ILSVRC2012) is /path/to/ILSVRC2012

Set hyperparameters, dataset and GPU IDs in ./config/pretrain/vit_small_pretrain.py and run the following command

python main_pretrain.py --arch vit-smallSet hyperparameters, dataset and GPU IDs in ./config/knn/knn.py and run the following command

python main_knn.py --arch vit-small --pretrained-weights /path/to/pretrained-weights.pthSet hyperparameters, dataset and GPU IDs in ./config/linear/vit_small_linear.py and run the following command:

python main_linear.py --arch vit-small --pretrained-weights /path/to/pretrained-weights.pthSet hyperparameters, dataset and GPUs in ./config/finetuning/vit_small_finetuning.py and run the following command

python python main_finetune.py --arch vit-small --pretrained-weights /path/to/pretrained-weights.pthIf you don't have a mircosoft office account, you can download the trained model weights by this link.

If you have a mircosoft office account, you can download the trained model weights by the links in the following tables.

| Arch | Batch size | #Pre-Epoch | Finetuning Accuracy | Linear Probing Accuracy | kNN Accuracy |

|---|---|---|---|---|---|

| ViT-S/16 | 1024 | 300 | 82.8% (link) | 77.4% (link) | 73.3% (link) |

| ViT-B/16 | 1024 | 300 | 84.1% (link) | 80.2% (link) | 76.2% (link) |

| Arch | Batch size | #Pre-Epoch | Finetuning Accuracy | Linear Probing Accuracy | kNN Accuracy |

|---|---|---|---|---|---|

| ViT-T/2 | 512 | 800 | 97.5% (link) | 94.4% (link) | 92.9% (link) |

| ViT-S/2 | 512 | 800 | 98.1% (link) | 96.0% (link) | 94.6% (link) |

| ViT-B/2 | 512 | 800 | 98.3% (link) | 96.6% (link) | 95.8% (link) |

| Arch | Batch size | #Pre-Epoch | Finetuning Accuracy | Linear Probing Accuracy | kNN Accuracy |

|---|---|---|---|---|---|

| ViT-T/2 | 512 | 800 | 84.9% (link) | 74.7% (link) | 68.8% (link) |

| ViT-S/2 | 512 | 800 | 86.0% (link) | 78.7% (link) | 75.4% (link) |

| ViT-B/2 | 512 | 800 | 86.0% (link) | 79.7% (link) | 75.7% (link) |

The query sample and the image with id 4 in key samples are from the same category. The images with id 3 and 5 come from category similar to query sample.

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.

@article{shen2023inter,

author = {Shen, Chengchao and Liu, Dawei and Tang, Hao and Qu, Zhe and Wang, Jianxin},

title = {Inter-Instance Similarity Modeling for Contrastive Learning},

journal = {arXiv preprint arXiv:2306.12243},

year = {2023},

}