Welcome to the official repository for our paper: Visual Haystacks: A Vision-Centric Needle-In-A-Haystack Benchmark. Explore our project page here and the benchmark toolkits here!

Authors: Tsung-Han Wu, Giscard Biamby, Jerome Quenum, Ritwik Gupta, Joseph E. Gonzalez, Trevor Darrell, David M. Chan at UC Berkeley.

Visual Haystacks (VHs) Benchmark Dataset: 🤗 tsunghanwu/visual_haystacks, 🐙 Github Repo

Model Checkpoint: 🤗tsunghanwu/mirage-llama3.1-8.3B

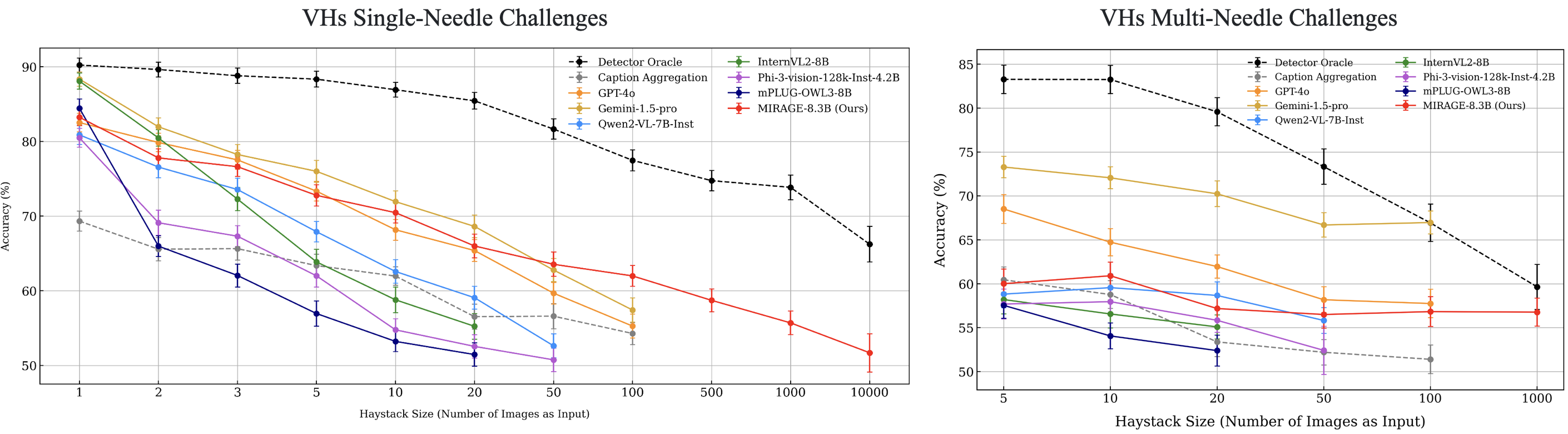

This paper addresses the challenge of answering questions across tens of thousands of images. Through extensive experiments through our Visual Haystacks (VHs) benchmark, we demonstrated that existing Large Multimodal Models (LMMs) struggle with inputs exceeding 100 images due to API limitations, context overflow, or hardware constraints on 4 A100 GPUs. These models often face issues such as visual distractions, cross-image reasoning difficulties, and positional biases. To overcome these challenges, we developed MIRAGE (8.3B), a pioneering, open-source visual-RAG baseline model based on LMMs capable of handling tens of thousands of images. In brief, MIRAGE integrates a compressor module that reduces image tokens by 18x, a dynamic query-aware retriever to filter irrelevant images, and a custom-trained LMM that can do multi-image reasoning. MIRAGE sets a new standard in open-source performance on the Visual Haystacks (VHs) benchmark and delivers solid results on both single- and multi-image question answering tasks.

- Clone this repository and navigate to mirage folder

git clone https://github.com/visual-haystacks/mirage.git

cd mirage- Install Package

conda create -n mirage python=3.10 -y

conda activate mirage

pip install --upgrade pip # enable PEP 660 support

pip install -e .- Install additional packages for training cases

pip install -e ".[train]"

pip install flash-attn --no-build-isolation --no-cache-dir

- Model Checkpoint: 🤗tsunghanwu/mirage-llama3.1-8.3B

- Demo code (single test case):

CUDA_VISIBLE_DEVICES=X python3 demo.py --model-path [huggingface model id or local path] --image-folder [local image folder] --prompt [prompt path] - Here’s a sample output from MIRAGE using some photos I took on my iPhone. (Feel free to give it a star if you think my cat is adorable! 😺✨)

- For Visual Haystacks (VHs), download the data from 🤗 tsunghanwu/visual_haystacks and place it in

playground/data/eval/visual_haystacks. - For single-image QA, download all data according to LLaVA's instructions.

- For multi-image QA, download RETVQA's test set and place it in

playground/data/eval/retvqa. For evaluation, refer to RETVQA's GitHub Repo.

In summary, the data structure of playground/data/eval should look like this:

Show Data Structure

playground/data/eval/

├── gqa

│ ├── answers

│ ├── data # directory

│ ├── llava_gqa_testdev_balanced.jsonl

├── mmbench

│ ├── answers

│ ├── answers_upload

│ └── mmbench_dev_20230712.tsv

├── mmbench_cn

│ ├── answers

│ ├── answers_upload

│ └── mmbench_dev_cn_20231003.tsv

├── mm-vet

│ ├── answers

│ ├── images # directory

│ ├── llava-mm-vet.jsonl

│ └── results

├── pope

│ ├── answers

│ ├── coco # directory (point to COCO2014)

│ └── llava_pope_test.jsonl

├── retvqa

│ ├── answers

│ ├── vg # directory (point to Visual Genome directory)

│ └── retvqa_test_mirage.json

├── textvqa

│ ├── answers

│ ├── llava_textvqa_val_v051_ocr.jsonl

│ ├── TextVQA_0.5.1_val.json

│ └── train_images # directory (download from their website)

├── visual_haystacks

│ ├── coco # directory (point to COCO2017)

│ └── VHs_qa # directory (download from VHs' huggingface)

├── vizwiz

│ ├── answers

│ ├── answers_upload

│ ├── llava_test.jsonl

│ └── test # directory (download from their website)

└── vqav2

├── answers

├── answers_upload

├── llava_vqav2_mscoco_test2015.jsonl

├── llava_vqav2_mscoco_test-dev2015.jsonl

└── test2015 # directory (download from their website)

# Visual Haystacks

CUDA_VISIBLE_DEVICES=0 bash scripts/eval/{vhs_single,vhs_multi}.sh

# VQAv2, GQA, RetVQA

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 bash scripts/eval/{vqav2,gqa,retvqa}.sh

# Vizwiz, TextVQA, POPE, MMBench, MMBench-CN, MM-Vet

CUDA_VISIBLE_DEVICES=0 bash scripts/eval/{vizwiz,textvqa,pope,mmbench,mmbench_cn,mmvet}.sh

| Checkpoint | VQAv2 | GQA | VizWiz | TextVQA | POPE | MM-Bench | MM-Bench-CN | MM-Vet |

|---|---|---|---|---|---|---|---|---|

| 🤗 tsunghanwu/mirage-llama3.1-8.3B | 76.56 | 59.13 | 40.52 | 56.24 | 85.40 | 69.24 | 66.92 | 33.4 |

- Please download the dataset from 🤗 tsunghanwu/MIRAGE-training-set.

- For stage-1 pre-training (training Q-Former and MLP projector), download datasets such as CC-12M, LAION-400M, and COCO.

- For stages 2 and 3 pre-training, which involve training Q-Former/MLP projector with high-quality captions and training the downstream retriever module with augmented LLaVA data, download SAM, VG, COCO, TextVQA, OCR_VQA, and GQA to

playground/data. - For instruction tuning, download SAM, VG, COCO, TextVQA, OCR_VQA, GQA, slidevqa, and webqa to

playground/data.

Below is the expected data structure for playground/data/eval:

Show Data Structure

playground/data/

├── coco

│ ├── annotations

│ ├── test2017

│ ├── train2017

│ └── val2017

├── gqa

│ └── images

├── ocr_vqa

│ └── images

├── sam

│ └── images

├── share_textvqa

│ └── images

├── slidevqa

│ └── images (download from https://drive.google.com/file/d/11bsX48cPpzCfPBnYJgSesvT7rWc84LpH/view)

├── textvqa

│ └── train_images

├── vg

│ ├── VG_100K

│ └── VG_100K_2

└── webqa

└── webqa_images (download from https://drive.google.com/drive/folders/1ApfD-RzvJ79b-sLeBx1OaiPNUYauZdAZ and convert them to .jpg format)

Run the following script with minor modifications as needed. Note: During the finetuning, we found that freezing the downstream retriever but only updating Q-Former/LLM leads to better performance on LLama-3.1-8b, whereas unfreezing the retriever yields better results on vicuna-v1.5-7b.

# Stage 1-3 Pretraining

bash scripts/pretrain_stage{1,2,3}.sh

# Instruction Finetuning

bash scripts/finetune_qformer_lora.shPlease merge LoRA weights back to the original checkpoint using the following code:

python scripts/merge_lora_weights.py \

--model-path checkpoints/mirage_qformer_ft \

--model-base meta-llama/Meta-Llama-3.1-8B-Instruct \

--save-model-path your_output_pathWe are grateful for the foundational code provided by LLaVA and LLaVA-More. Utilizing their resources implies agreement to their respective licenses. Our project benefits greatly from these contributions, and we acknowledge their significant impact on our work.

If you use our work or our implementation in this repo, or find them helpful, please consider giving a citation.

@article{wu2024visual,

title={Visual Haystacks: A Vision-Centric Needle-In-A-Haystack Benchmark},

author={Wu, Tsung-Han and Biamby, Giscard and and Quenum, Jerome and Gupta, Ritwik and Gonzalez, Joseph E and Darrell, Trevor and Chan, David M},

journal={arXiv preprint arXiv:2407.13766},

year={2024}

}