Terraform is an open source tool developed by Hashicorp, which allows you to codify your infrastructure. This means that you can write configuration files, instead of having to click around in the AWS or any cloud provider's console. The files are in pure text format, and therefore can be shared, versioned, peer-reviewed just like any other code. Basically, Terraform is a tool that helps you with achieving Infrastructure as Code (IaC). The orchestration space is very green, but I think Terraform is the standout option.

This repository contains a terraform module for provisioning EC2-based Spot Instances on AWS, specifically for Deep Learning workloads on Amazon's GPU instances, by taking advantage of automation and friendly declarative configurations.

Development and testing was done on a macOS High Sierra version 10.13.3

- Terraform (tested on v0.11.7)

- Amazon Web Services CLI (aws-cli)

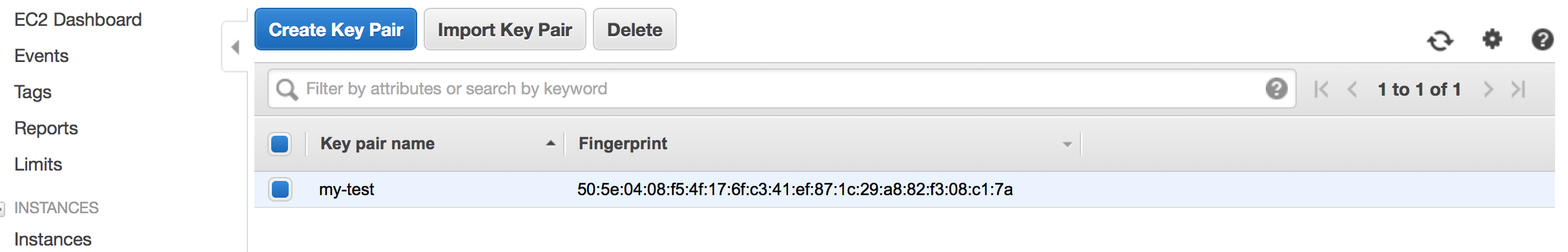

- AWS Key Pair

Note: Terraform and aws-cli can be installed with brew install on Mac.

The instructions for creating an AWS Key Pair are

here.

This key needs be created in the corresponding AWS region you are working in.

The name of the key pair has to be the same as the one listed in the AWS

console. You will need to specify this in the my_key_pair_name variable (see

Section Variables).

AWS Key Pair in the AWS Management Console.

This demo terraform script creates makes a Spot Instance request for a

p2.xlarge in AWS and allows you to connect to a Jupyter notebook running on

the server. This script could be more generic, but for now its only been tested

on my own AWS setup, so I'm open to more contribution to the repo :)

In the variables.tf file some of the variables you can configure for your

setup are:

* my_region (default = us-east-1) # N. Virginia

* avail_zone (default = us-east-1a)

* my_key_pair_name (default = my-test)

* instance_type (default = p2.xlarge)

* num_instances (default = 1)

* spot_price (default = 0.30)

* ebs_volume_size (default = 1)

* ami_id (default = ami-dff741a0) # AWS Deep Learning AMI (Ubuntu)Note: The minimum spotPrice should follow the AWS EC2 Spot Instances Pricing, otherwise your request will not be fulfilled because the price is too low.

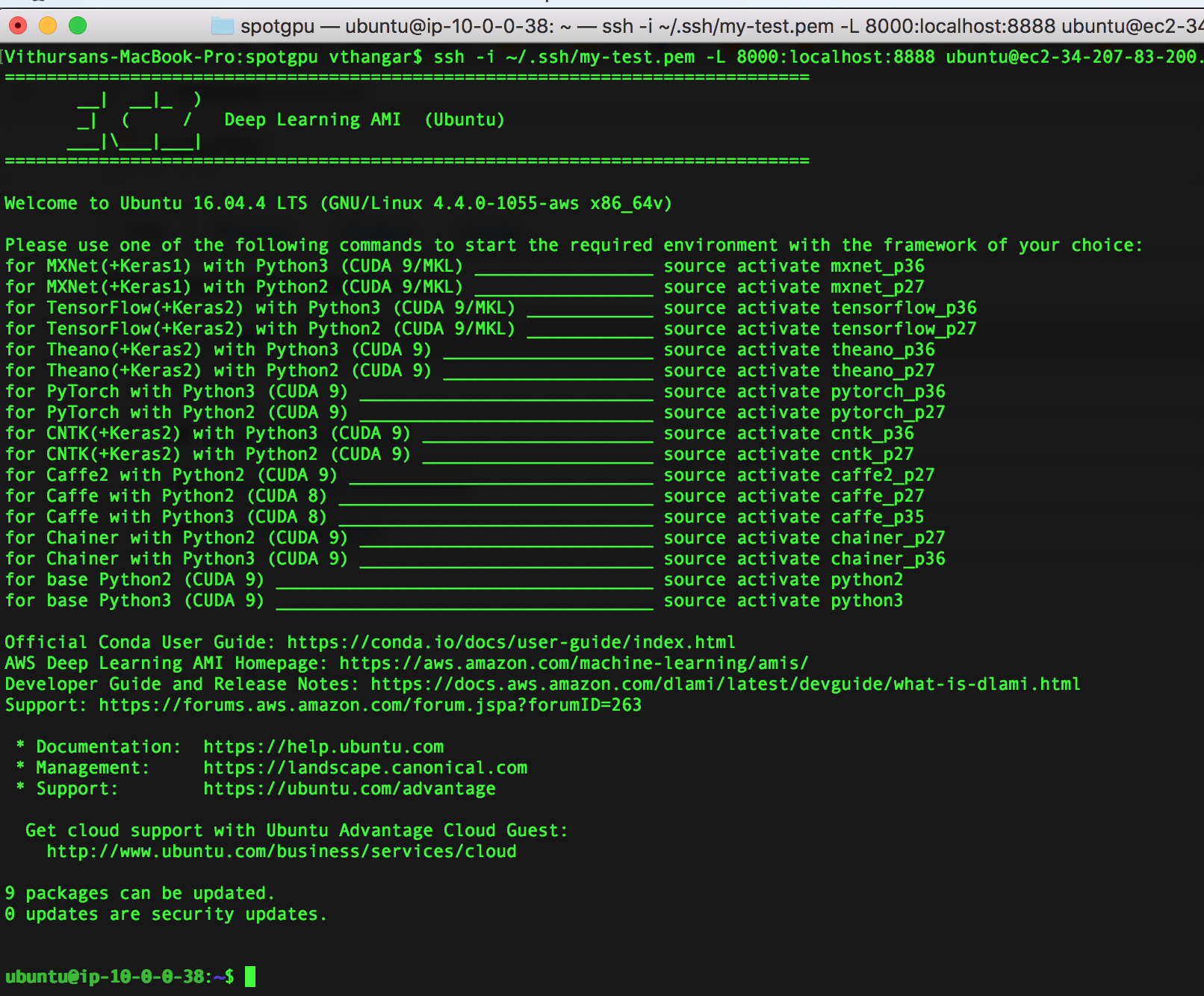

In this demo, I am using the AWS Deep Learning

AMI, because its free and

provides you with Anaconda environments for most of the popular DL frameworks

(see image below). Also, the software cost is $0.00/hr, and you don't have

to worry about installing the NVIDIA drivers and DL software (i.e. TensorFlow,

PyTorch, MXNet, Caffe, Caffe2, etc) manually.

AWS Deep Learning AMI (Ubuntu) - a list of conda environments for deep learning frameworks optimized for CUDA/MKL.

- Configure your

AWS Access Key,AWS Secret Access Key, andregion name:

$ aws configure

AWS Access Key ID [None]: ********

AWS Secret Access Key [None]: ********

Default region name [None]: us-east-1

Default output format [None]: - Check to see if Terraform is installed properly:

$ terraform- Initalize the working directory containing the Terraform configuration files:

$ terraform init- Validate the syntax of the terraform files:

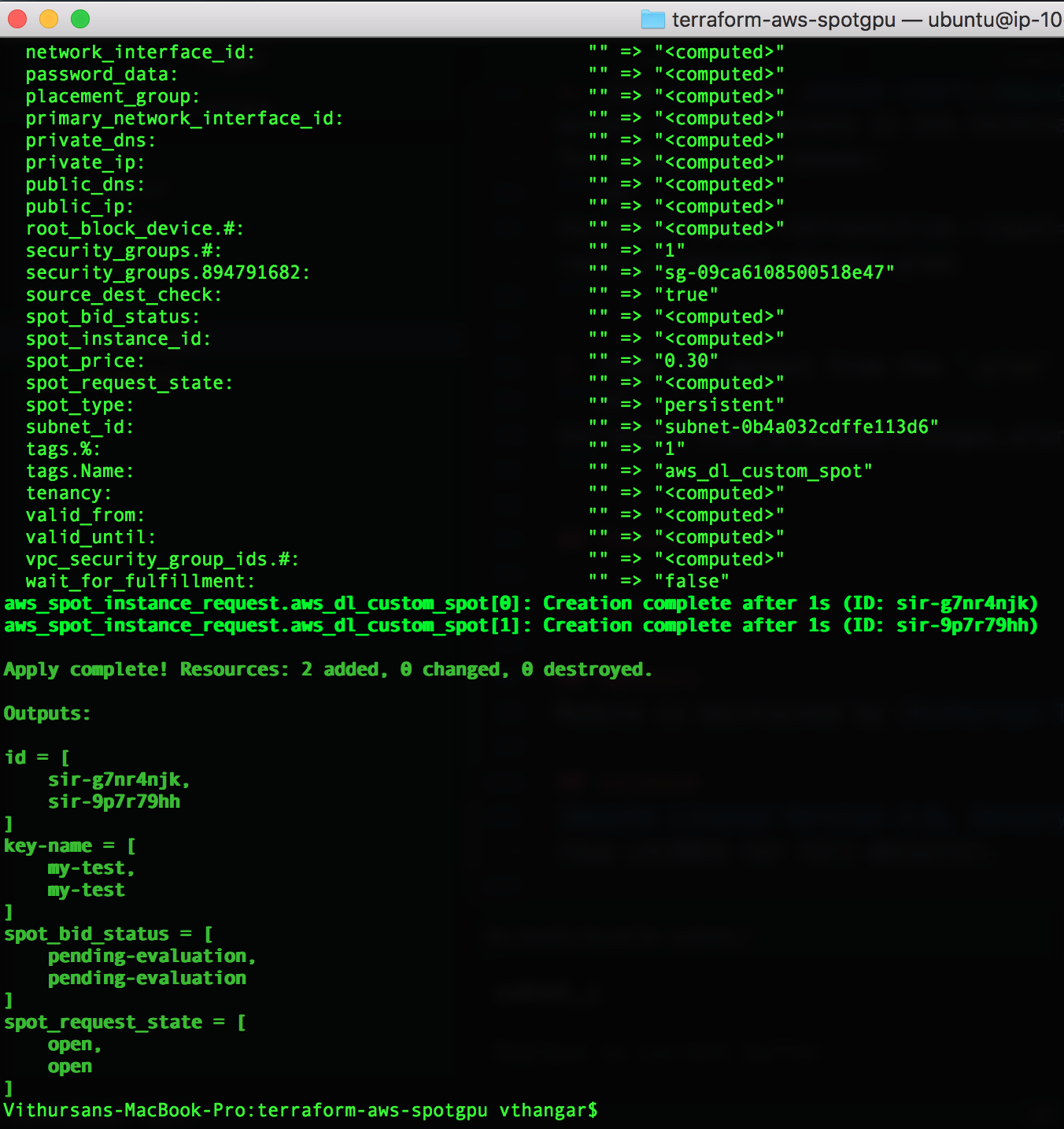

$ terraform validate- Create the terraform execution plan, which is an easy way to check what actions are needed to be taken to get the desired state:

$ terraform plan- Provision the instance(s) by applying the changes to get the desired state based on the plan:

$ terraform applySample output showing requests for two p2.xlarge AWS EC2 Spot instances.

-

Login to your EC2 Management Console and you should see your Spot Instance Request. You should also see all of the instances and volumes that were provisioned.

-

Once done with the infrastructure, you can destroy it:

$ terraform destroy- Step 3 in the Quick Start section allows you to view the output configurations in the terminal, but you can also save the execution plan for debugging purposes:

$ terraform plan -refresh=true -input=False -lock=true

-out=./proposed-changes.plan- View the output from the

.planfile in human-readable format:

$ terraform show proposed-changes.plan- Terraform module for provisioning AWS On-Demand instances

- Terraform module for setting up an AWS Elastic Container Service (ECS) cluster and run a service on the cluster

- Keras with GPU on Amazon EC2 – a step-by-step instruction

- Benchmarking Tensorflow Performance and Cost Across Different GPU Options

Module is maintained by Vithursan Thangarasa

Apache License Version 2.0, January 2004 (See LICENSE for full details).