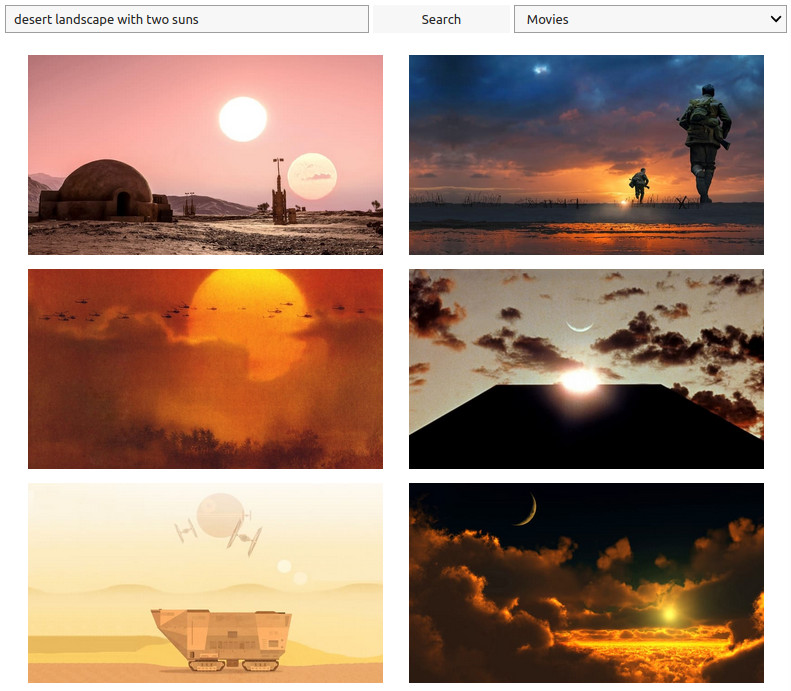

OpenAI's CLIP is a deep learning model that can estimate the "similarity" of an image and a text. In this way, you can search images matching a natural language query even though your image corpus doesn't include titles, descriptions, keywords...

This repository includes a simple demo built on 25,000 Unsplash images and 7,685 movie images.

Click here to try the included notebook in Google Colab. Connect with your Google account and execute the first (and only) code cell.

Alternatively, an equivalent demo can be found as a Hugging Face's Space (and it works without an account).

Thanks to:

- OpenAI for sharing CLIP

- Hugging Face for the transformers library

- Unsplash and Movie Database (TMDB) for allowing hotlinking to their images

I was inspired by Unsplash Image Search from Vladimir Haltakov and Alph, The Sacred River from Travis Hoppe.