This repository contains the PyTorch implementation of the paper:

Single View Point Cloud Generation via Unified 3D Prototype.

Yu Lin, Yigong Wang, Yifan Li, Yang Gao, Zhuoyi Wang, Latifur Khan

In AAAI 2021

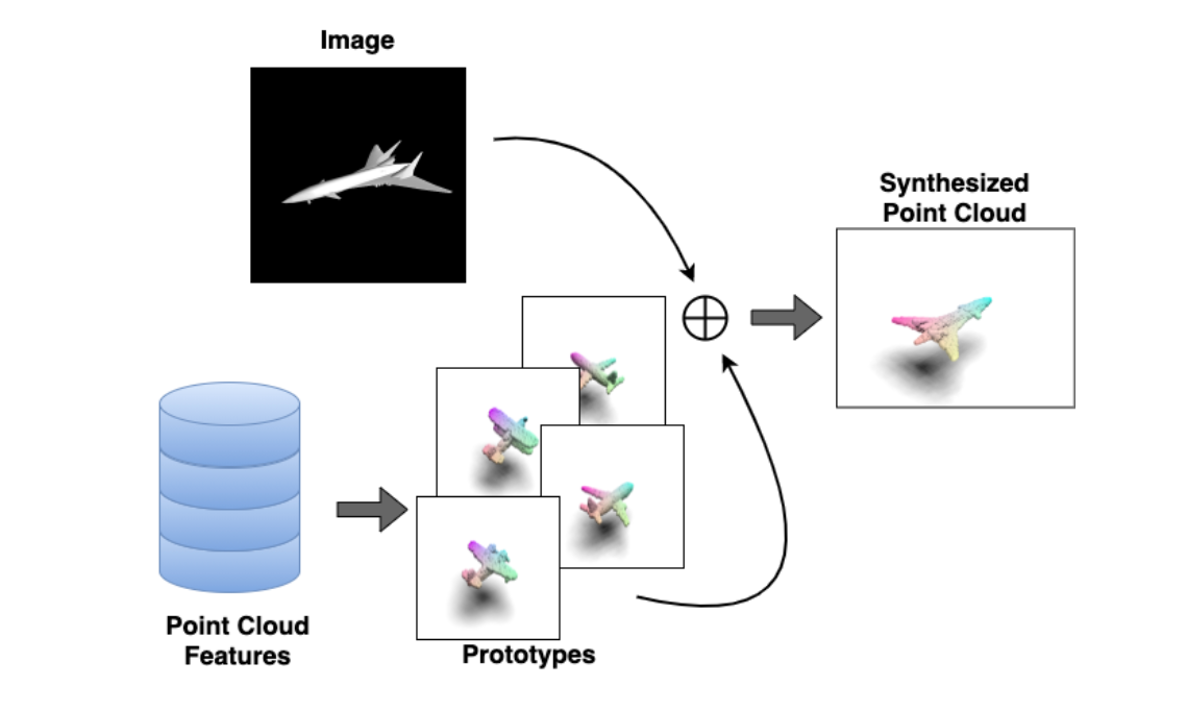

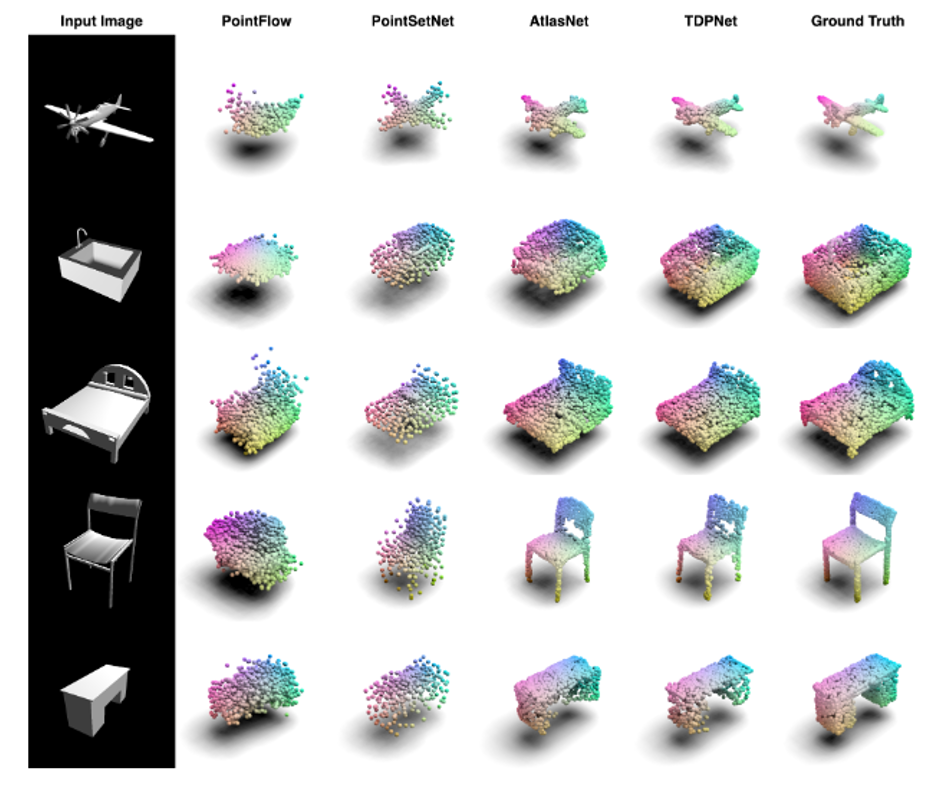

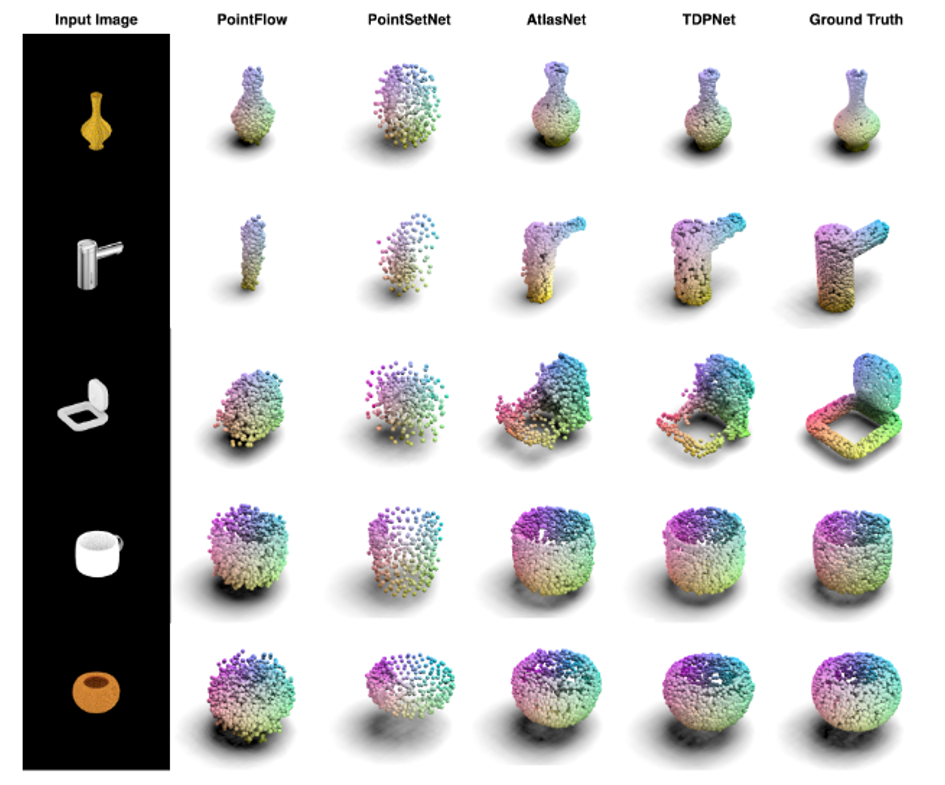

In this project, we are focusing on the point cloud reconstruction from a single image using prior 3D shape information, we called it 3D prototype. Previous methods usually consider 2D information only, or treat 2D information and 3D information equally. However, 3D information are more informative and should be utilized during the reconstruction process. Our solution is that we pre-compute a set of 3D prototype features from a point cloud dataset and infuse them with the incoming image features. We also designed a hierarchical point cloud decoder that treat each prototype separately. Empirically, we show that TDPNet achieves SOTA performance in point cloud single view reconstruction. We additionally found that a good quantitative results does not guarantee a good visual result.

- Clone this repo:

git clone https://github.com/voidstrike/TDPNet.git

- Install the dependencies:

- Python 3.6

- CUDA 11.0

- G++ or GCC5

- PyTorch. Codes are tested with version 1.2.0

- Scikit-learn 0.24.0

- Pymesh 1.0.2

- Compile CUDA kernel for CD/EMD loss

cd src/metrics/pytorch_structural_losses/

make clean

make

ModelNet dataset can be downloaded from ModelNet_views and ModelNet_pcs.

The 2D projections of ModelNet are from MVCNN

For the ShapeNet dataset, please download the dataset from their website and render it via Blender. We provide src/view_generator.py and src/train_test_split.py for the image generation and TRAIN/TEST split, respectively.

- Train a model

# General

CUDA_VISIBLE_DEVICES=X python3 trainTDP.py --root IMG_ROOT --proot PC_ROOT --cat TGT_CATEGORY --from_scratch --reclustering

# Concrete

CUDA_VISIBLE_DEVICES=0 python3 trainTDP.py --root ~/Desktop/modelnet_views/ --proot ~/Desktop/modelnet_pcs/ --cat airplane --from_scratch --reclustering

- There are more hyper parameter options, please refer to the source code for more detail

# Modify the number of prototype and number of MLP slaves -- An example

CUDA_VISIBLE_DEVICES=X python3 trainTDP.py --root IMG_ROOT --proot PC_ROOT --cat TGT_CATEGORY --from_scratch --reclustering --num_prototypes 1 --num_slaves 1

- Please remember to modify the CUDA device number X, IMG_ROOT, PC_ROOT and TGT_CATEGORY accordingly.

- Eval a model (Actually, the evaluation code will be executed at the end of the training)

CUDA_VISIBLE_DEVICES=X python3 evaluate.py --root IMG_ROOT --proot PC_ROOT -- cat TGT_CATEGORY

We provide some qualitative results of our model.

We provide the code for the single-category experiment.

UPDATE 2021 June Complied CD & EMD seems outdated, please try to use the Kaolin repo and update the code accordlingly.

UPDATE 2021 Sep "Phong.py" for image rendering is uploaded for reference.

If you use this code for your research, please consider cite our paper:

@inproceedings{lin2021single,

title={Single View Point Cloud Generation via Unified 3D Prototype},

author={Lin, Yu and Wang, Yigong and Li, Yi-Fan and Wang, Zhuoyi and Gao, Yang and Khan, Latifur},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={35},

number={3},

pages={2064--2072},

year={2021}

}