Training Word Embeddings and using them to perform Sentiment Analysis

Word Embeddings are vector representations of words of a language, and are trained over large collection of raw text. Here, we have trained word embeddings using the approach specified by the Neural Probabalistic Language Model. We use a large corpus of english text, drawn from a collection of novels from the 1880s, and use it to create a large number of context-target pair examples. The Embeddings are 64 dimensional, and are built over a vocabulary of the 25000 most frequent words of the corpus.

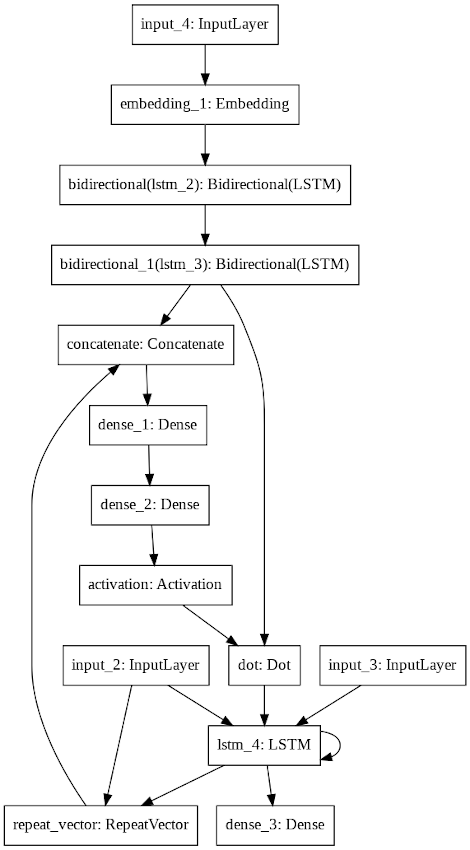

The trained embeddings are evaluated by a set of simple analogical reasoning tasks, and then mapped to 2-D using the t-SNE algorithm for visualization. Further, the trained embeddings are then used to perform Sentiment Analysis on the IMDB dataset of reviews. An attention based LSTM model, with a maximum input sequence length of 300 is used for the task of Sentiment Analysis. Its architecture is specified below

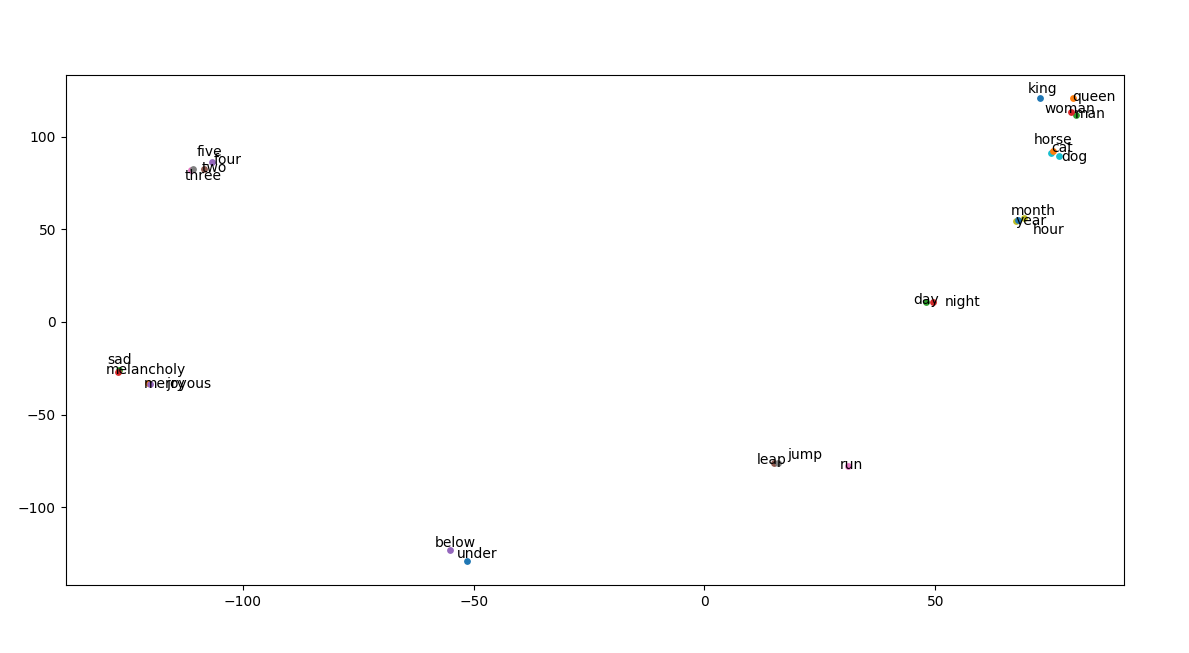

Here is a 2D plot of the embeddings of some words. (The high dimensional embeddings are converted to 2-D using the t-SNE algorithm)

As you can see, words conveying emotions such "sad", "melancholy", "happy" and "merry" are grouped together. Number words such as "two", "three", "four" and "five" are also grouped together. Words with similar meanings such as "under" and "beneath", and "run", "jump" and "leap" are also grouped together. Animal such as "cat", "dog", and "horse" are grouped together. Words carrying temporal meanings such as "month", "year" and "hour" are also grouped together. Further, words such "man", "woman", "king" and "queen" are grouped together as well.