IEEE Transactions on Robotics (TRO) 2023 [Paper]

Fukang Liu1,2,Fuchun Sun2,Bin Fang2,Xiang Li2,Songyu Sun3,Huaping Liu2

1Carnegie Mellon University

2Tsinghua University

3 University of California, Los Angeles

If you have any questions, please let me know: Fukang Liu fukangl[at]andrew[dot]cmu[dot]edu

The implementation requires the following dependencies:

-

The soft multimodal gripper was developed based on our previous work, please find more details: "Multimode Grasping Soft Gripper Achieved by Layer Jamming Structure and Tendon-Driven Mechanism".

-

Checkout this repository and download the datasets. UnZip it and put the unzipped file to the /code directory.

-

Run CoppeliaSim (navigate to your CoppeliaSim directory and run

./coppeliaSim.sh). From the main menu, selectFile>Open scene..., and open the filecode/simulation/simulation-lc.ttt(lightly-cluttered) orsimulation-hc.ttt(highly-cluttered) from this repository. Choose theVortexphysics engine for simulation (you can also choose other physics engines that CoppeliaSim supports (e.g.,Bullet,ODE), butVortexworks best for the simulation model of SMG). -

In another terminal window, run the following example:

python main.py --is_sim --method 'reinforcement' --is_ets --is_pe --is_oo --explore_rate_decayTo train an Reactive Enveloping and Sucking Policy (E+S Reactive) in simulation with lightly cluttered environment, run the following:

python main.py --is_sim --method 'reactive' --is_pe --is_oo --explore_rate_decayTo train an Reactive Enveloping, Sucking and Enveloping_then_Sucking Policy (E+S+ES Reactive) in simulation with lightly cluttered environment, run the following:

python main.py --is_sim --method 'reactive' --is_ets --is_pe --is_oo --explore_rate_decayTo train a DRL Enveloping and Sucking Policy (E+S DRL) in simulation with lightly cluttered environment, run the following:

python main.py --is_sim --method 'reinforcement' --is_pe --is_oo --explore_rate_decayTo train a DRL multimodal grasping policy (E+S+ES DRL(PE+OO)) in simulation with lightly cluttered environment, run the following:

python main.py --is_sim --method 'reinforcement' --is_ets --is_pe --is_oo --explore_rate_decayTranining policies in highly cluttered environment, add --is_cluttered. For example, For training a DRL multimodal grasping policy (E+S+ES DRL(PE+OO)) in simulation with highly cluttered environment, run the following:

python main.py --is_sim --is_cluttered --method 'reinforcement' --is_ets --is_pe --is_oo --explore_rate_decayTo test your own pre-trained model, simply change the location of --snapshot_file. For example, for testing the pre-trained E+S+ES DRL(PE+OO) model in simulation with lightly cluttered environment, run the following:

python main.py --is_sim --method 'reinforcement' --is_ets --is_pe --is_oo --explore_rate_decay \

--is_testing \

--load_snapshot --snapshot_file 'YOUR-SNAPSHOT-FILE-HERE'To test the three ablation baselines, remove --is_pe or --is_oo.

For testing a DRL multimodal grasping policy that executes actions without either preenveloping or orientation optimization (E+S+ES DRL) in simulation with lightly cluttered environment, remove both --is_pe and --is_oo:

python main.py --is_sim --method 'reinforcement' --is_ets --explore_rate_decay \

--is_testing \

--load_snapshot --snapshot_file 'YOUR-SNAPSHOT-FILE-HERE'For testing a DRL multimodal grasping policy that executes actions with only preenveloping (E+S+ES DRL(PE)) in simulation with lightly cluttered environment, remove --is_oo:

python main.py --is_sim --method 'reinforcement' --is_ets --is_pe --explore_rate_decay \

--is_testing \

--load_snapshot --snapshot_file 'YOUR-SNAPSHOT-FILE-HERE'To test a DRL multimodal grasping policy that executes actions with only orientation optimization (E+S+ES DRL(OO)) in simulation with lightly cluttered environment, remove --is_pe:

python main.py --is_sim --method 'reinforcement' --is_ets --is_oo --explore_rate_decay \

--is_testing \

--load_snapshot --snapshot_file 'YOUR-SNAPSHOT-FILE-HERE'If you find the code or gripper design useful, please cite:

@article{liu2023hybrid,

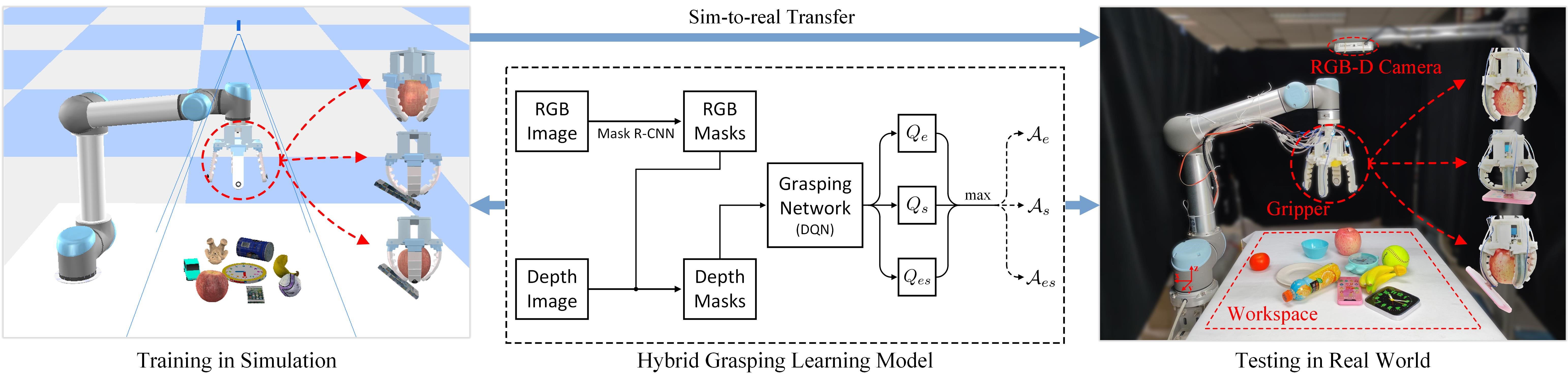

title={Hybrid Robotic Grasping with a Soft Multimodal Gripper and a Deep Multistage Learning Scheme},

author={Liu, Fukang and Fang, Bin and Sun, Fuchun and

Li, Xiang and Sun, Songyu and Liu, Huaping},

journal={IEEE Transactions on Robotics},

year={2023},

publisher={IEEE}

}

and

@article{fang2022multimode,

title={Multimode grasping soft gripper achieved by layer jamming structure and tendon-driven mechanism},

author={Fang, Bin and Sun, Fuchun and Wu, Linyuan and Liu, Fukang and

Wang, Xiangxiang and Huang, Haiming and Huang, Wenbing and Liu, Huaping and Wen, Li},

journal={Soft Robotics},

volume={9},

number={2},

pages={233--249},

year={2022}

}

This code was developed using visual-pushing-grasping.