This repository contains the source code to run PyDNet on mobile devices (Android and iOS)

If you use this code in your projects, please cite our paper:

@inproceedings{pydnet18,

title = {Towards real-time unsupervised monocular depth estimation on CPU},

author = {Poggi, Matteo and

Aleotti, Filippo and

Tosi, Fabio and

Mattoccia, Stefano},

booktitle = {IEEE/JRS Conference on Intelligent Robots and Systems (IROS)},

year = {2018}

}

More info about the work can be found at these links:

The network has been trained on MatterPort dataset for 1.2M steps, using the BerHu loss on Microsoft Kinect depth labels offered by the dataset as supervision.

The code is based on Google android examples.

Android target version is 26 while minimum is 21. Android Studio is required.

Currently, we use tensorflow-android instead of tf-lite, and no gpu-optimisation is supported.

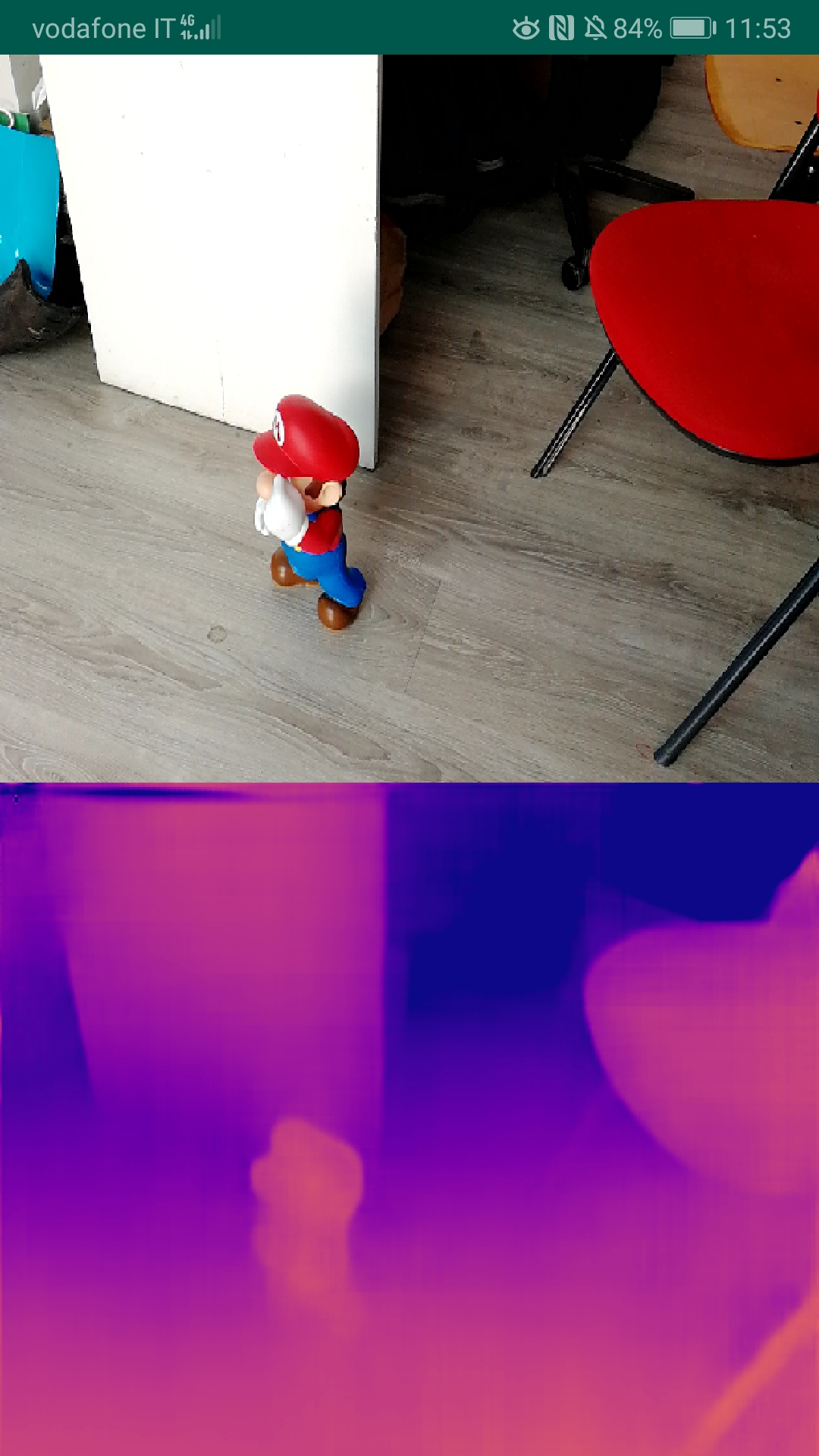

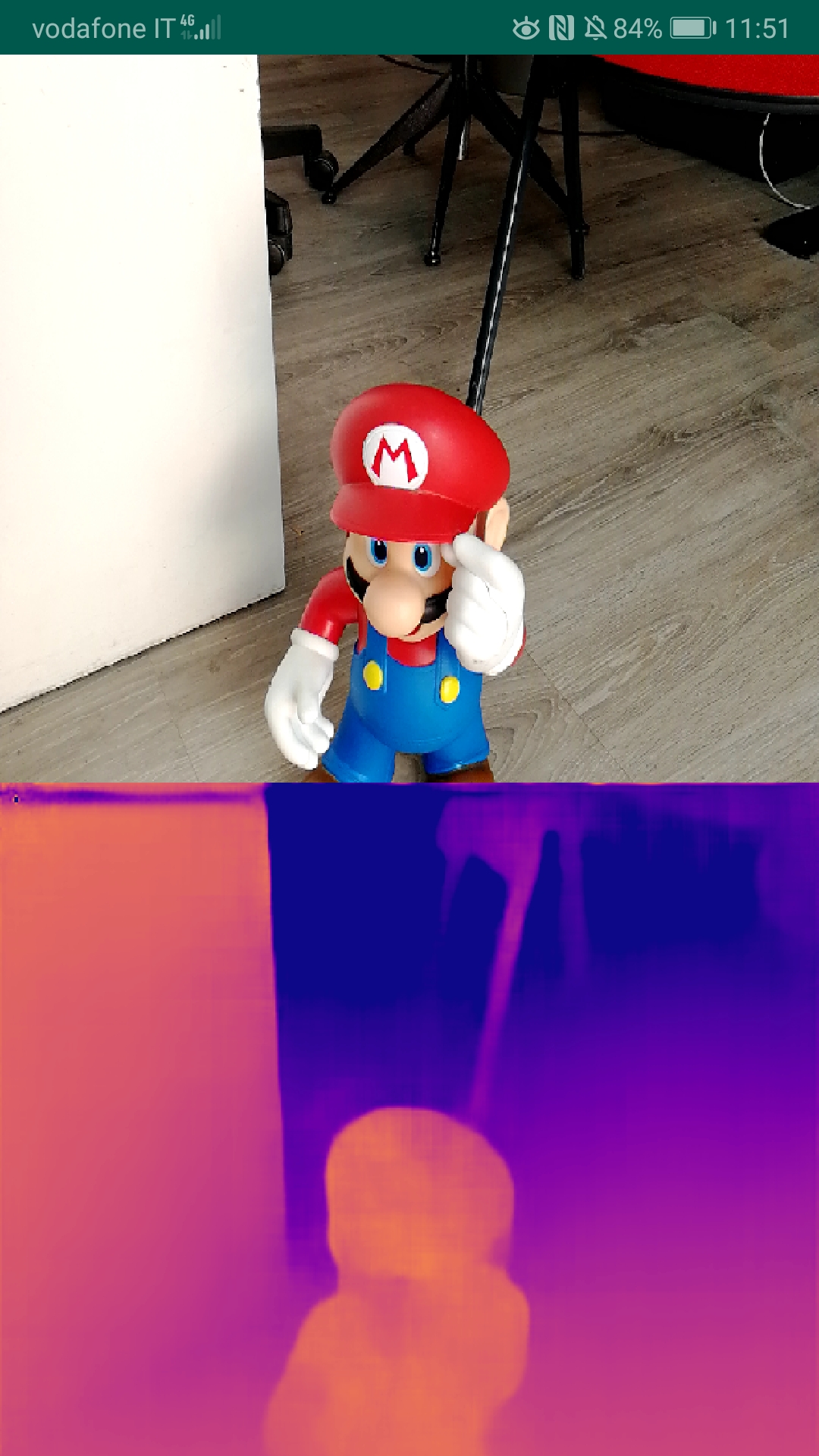

Mode: portrait

The demo on iOS has been developed by Giulio Zaccaroni.

XCode is required to build the app, moreover you need to sign in with your AppleID and trust yourself as certified developer.

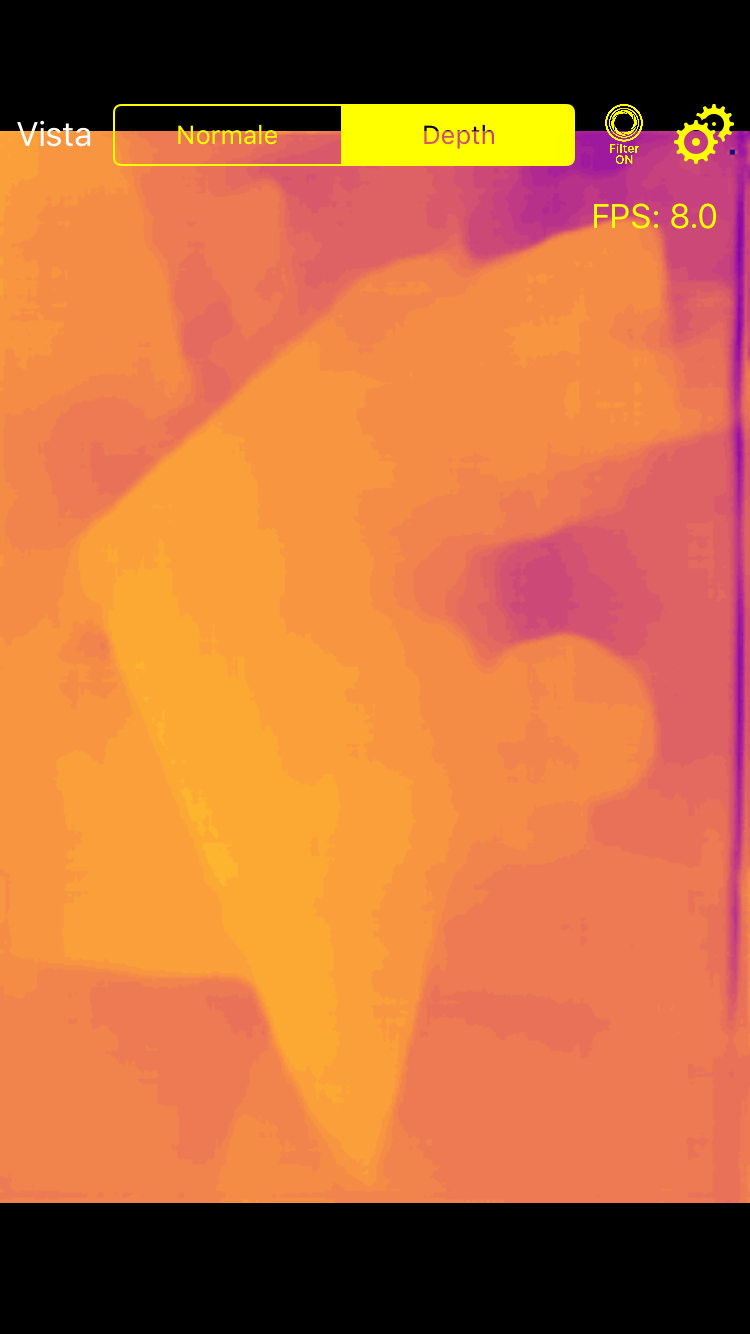

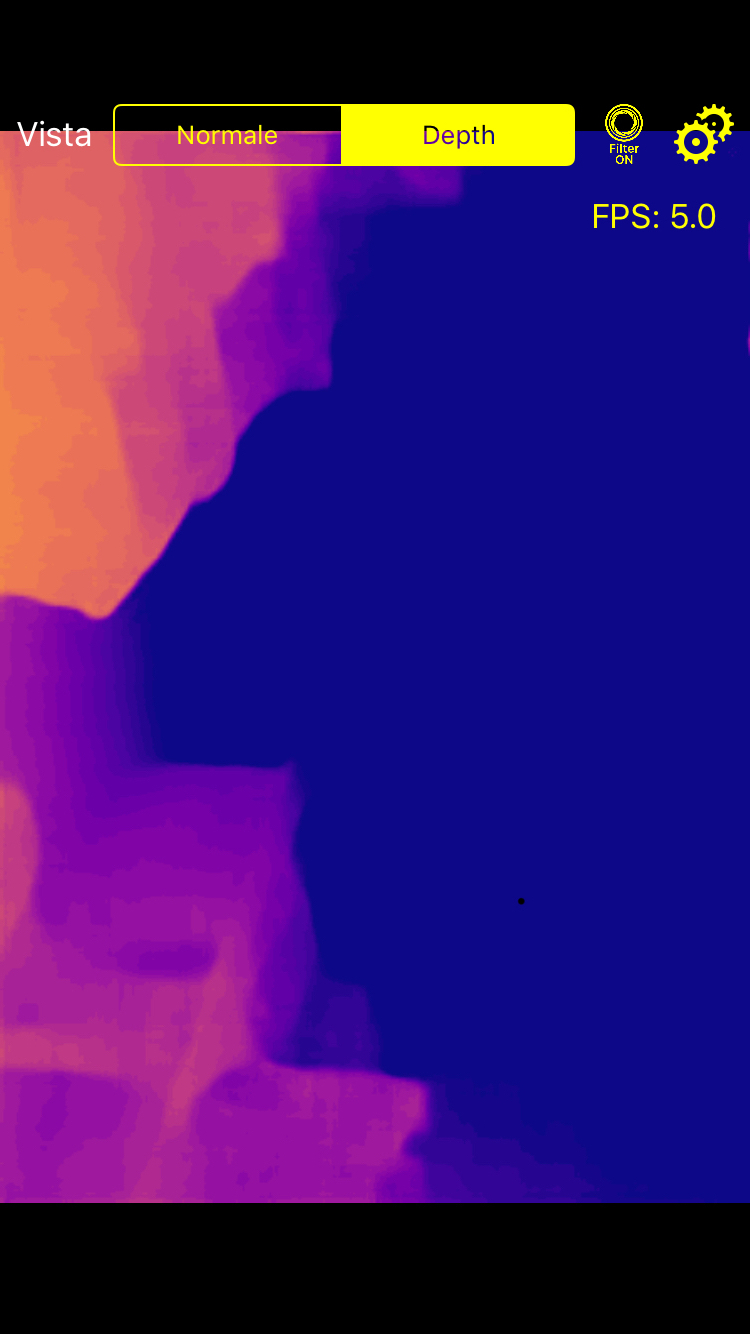

Mode: landscape

The code provided in this repository has a demonstrative and academic purposes only. Even if we released this code using Apache License v2, pre-trained model on specific datasets may not be used for scopes not covered by their licenses.

- MatterPort’s license