Implementing different reinforcement learning algorithms on different gym environments.

These algorithms are implemented in this repo:

And tested on these environments.

Cartpole |

Pendulum |

Acrobat |

Lunar Lander Continuous |

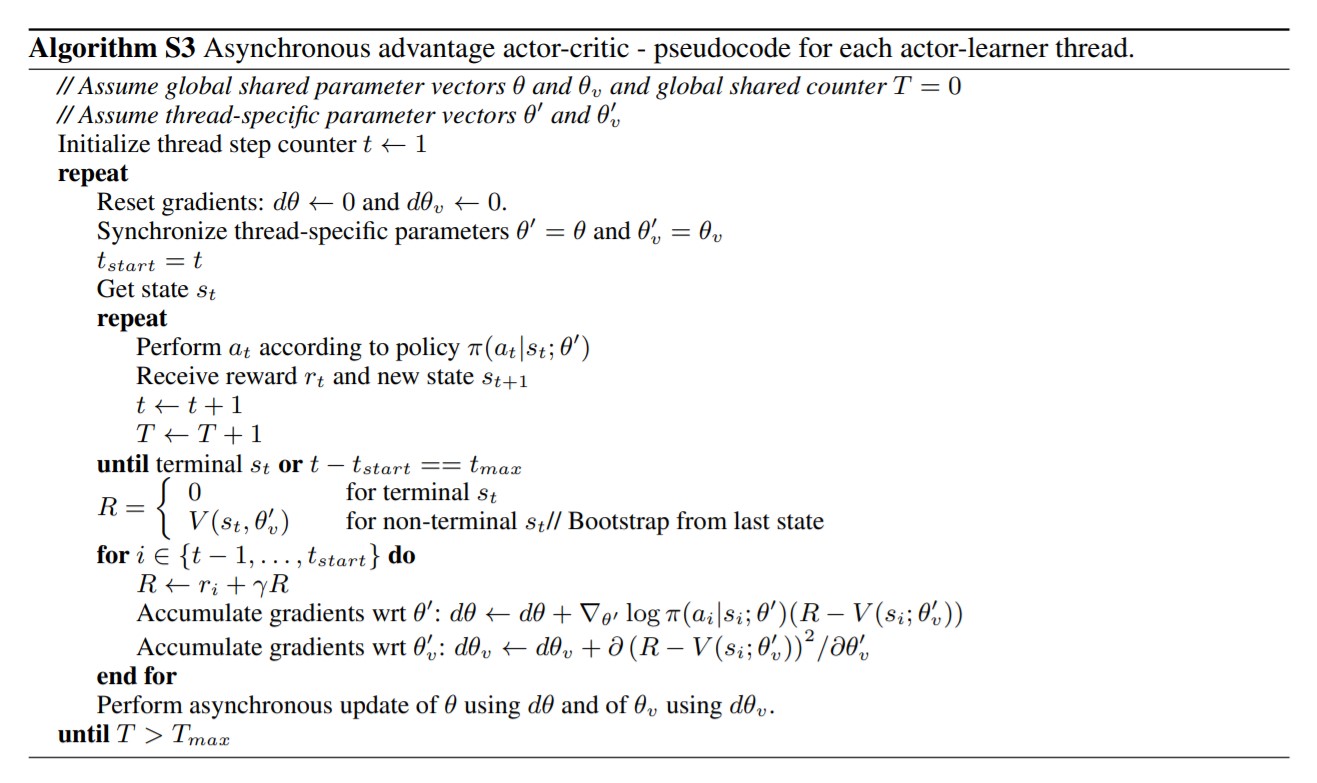

A2C is a on-policy, model-free reinforcement learning algorithm. Here is the pseudo code for A3C which is almost similar to A2C.

DDPG is a off-policy, model-free reinforcement learning algorithm. Here is the pseudo code for DDPG

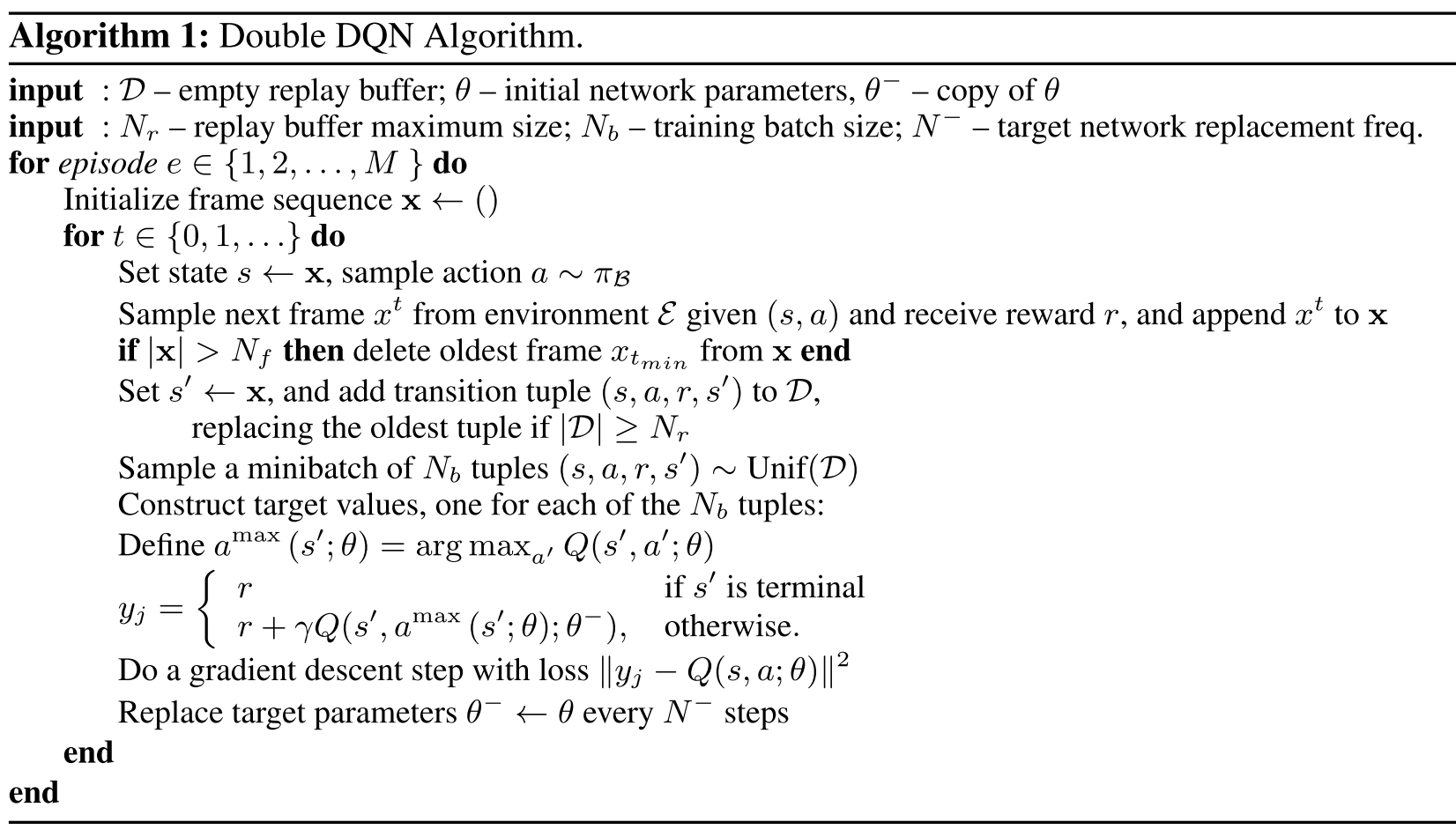

Double DQN is a off-policy, model-free reinforcement learning algorithm. Here is the pseudo code for Double DQN

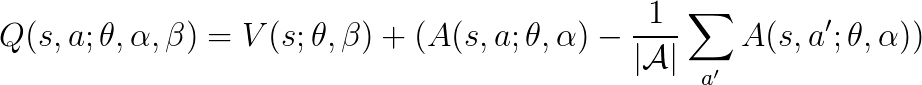

Similar to DDQN, dueling network contains two separate estimators: one for the state value function and one for the state-dependent action advantage function.

Formula for the decomposition of Q-value:

- θ is shared parameter for the network.

- α parameterizes output stream for advantage function Α.

- β parameterizes output stream for value function V.

TD3 is a off-policy, model-free reinforcement learning algorithm. Here is the pseudo code for TD3

- Reinforcement Learning - Goal Oriented Intelligence

- Reinforcement Learning by Sentdex

- OpenAI's Spinning Up Docs

- Wang et al., Dueling Network Architectures for Deep Reinforcement Learning

- Dueling Deep Q Networks

- Deriving Policy Gradients and Implementing REINFORCE

- Understanding Actor Critic Methods and A2C

- Keras DDPG Example