A startup called Sparkify wants to analyze the data they’ve been collecting on songs and user activity on their new music streaming app. The analytics team is particularly interested in understanding what songs users are listening to.

Currently, they don’t have an easy way to query their data, which resides in a directory of JSON logs on user activity on the app and a directory with JSON metadata on the songs in their app. They’d like to create a Postgres database with tables designed to optimize queries on song play analysis.

In this project, I will:

- Create a star schema: define fact and dimension tables for analytic focus.

- Write an ETL pipeline: transfer data from files in two local directories into tables in Postgres.

- Test the database and ETL pipeline.

- Do analyses on the song plays.

This repo consists of seven files and two directories:

- data directory contains two sub-directories:

- song_data is a subset of real data from the Million Song Dataset. Each file is in JSON format and contains metadata about a song and the artist of that song.

- log_data consists of log files in JSON format generated by event simulator simulating activity logs from Sparkify music streaming app.

- create_table.py drops and creates the database

sparkifydbwith its tables. This file is used to reset the database before running ETL scripts. - etl.py reads and processes files from

datadirectory and loads them into tables. - sql_queries.py contains all SQL queries and is imported to

create_table.py,etl.py, andetl.ipynb. - etl.ipynb reads and processes a single file from

song_dataandlog_dataand loads the data into tables. - test.ipynb displays the first few rows of each table to check the database.

- dashboard.ipynb generates statistics and analytic graphs on the database.

- images directory contains all images generated from

dashboard.ipynb - README.md (this file) gives a summary of the project and an explanation of designing the schema and programming. It also provides analyses on song plays.

Designing the database sparkifydb in PostgreSQL, the Sparkify team has two objectives:

- Load data from JSON files into tables to ease query data.

- Tables designed to optimize queries on song play analysis.

Each file in song_data contains metadata about the song and artist of that song. This data, therefore, is stored in two separate tables:

- artists (artist_id, name, location, latitude, longitude):

- artist_id is the primary key of this table as it's unique to each artist.

- Since the value of artist_id in JSON file is text, its data type is VARCHAR.

- songs (song_id, artist_id, title, year, duration):

- song_id is the primary key as it's unique to each song.

- artist_id is foreign key is to link to the table artists.

Each file in log_data contains data about the user and the song, the time, the location, the browser, etc., when he uses the app. This data, therefore, is stored in two separate tables:

- users (user_id, first_name, last_name, gender, level):

- user_id is the primary key, it's unique to each user.

- Since the value of user_id in JSON file is a number, its data type is INT

- songplays (songplay_id, user_id, start_time, song_id, session_id, location, user_agent):

- songplay_id is the primary key. It doesn't exists in JSON file, so its data type is set to SERIAL to ease inserting data.

- user_id, song_id are foreign keys to link to tables users, songs

This design of four tables satisfies 3NF, but it limits flexibility and doesn't optimize song play analysis queries.

For example, to answer the question "which is the most favorite artist in the app?" we need to join three tables songplays, songs, and artists. Another example, to answer "which type of user is more active?" we need to join two tables songplays and users.

Therefore, to optimize queries on song play analysis, we do denormalization.

- add two more columns level and artist_id to the table songplays.

- break down start_time into specific units: day, month, year, hour, week, weekday. They are stored in a new table time (start_time, day, month, year, hour, week, weekday).

Finally, we have a database schema optimized on queries on song play in the figure below.

Foreign keys are required NOT NULL. Those are fields:

- artist_id of the table songs

- start_time, user_id, song_id, artist_id of the table songplays. However, to ease the tests, I let song_id and artist_id are IS NULL.

The user level indicates the type of user, free or paid, hence the column level of the table users and table songplays are also NOT NULL.

Since the data is loaded in from files, there may be a conflict at tables songs, artists, and time on its primary key if that user, song, artist, and time have been added earlier. We need, therefore, to set do nothing if having conflict when inserting data. For example,

song_table_insert = ("""

INSERT INTO songs(song_id, title, artist_id, year, duration)

VALUES(%s, %s, %s, %s, %s)

ON CONFLICT(song_id)

DO NOTHING;

""")Since users might change from free to paid and vice versa, we update the column level for users table for the existing records.

user_table_insert = ("""

INSERT INTO users(user_id, first_name, last_name, gender, level)

VALUES(%s, %s, %s, %s, %s)

ON CONFLICT(user_id)

DO UPDATE

SET level = EXCLUDED.level;

""")The primary key of table songplays is an auto-increment field, so there's no conflict when inserting data. But I may have duplicate data. We need to remove duplicates when inserting data.

From my perspective, two records are duplicated if they have the same values in all fields except songplay_id.

# insert songplay records

songplay_data = list()

for index, row in df.iterrows():

# get songid and artistid from song and artist tables

cur.execute(song_select, (row.song, row.artist, row.length))

results = cur.fetchone()

if results:

songid, artistid = results

else:

songid, artistid = None, None

songplay_data.append((

pd.to_datetime(row.ts, unit='ms'), row.userId,

row.level, songid, artistid, row.sessionId,

row.location, row.userAgent

))

# remove duplicates

songplay_data = list(set(songplay_data))

cur.executemany(songplay_table_insert, songplay_data)The scripts will connect to PostgreSQL at the address 127.0.0.1 by using the username student and password student. The user student has to have permission to create a database. You also must have a database named studentdb on your system.

Following these steps below to test the program:

- Create the database

sparkifydb: run the scriptcreate_tables.py. Attention: This script will drop your databasesparkifydbif it exists. - Import data from the directory

datainto the database:- Run the script

etl.pyif you want to load data from all JSON files into tables. - Run the notebook

etl.ipynbif you want to load data from one JSON file insong_dataand one JSON file inlog_datainto tables.

- Run the script

- After creating the database and importing data, you're free to run notebooks:

- The notebook

dashboard.ipynbprovides a general statistic on tables and gives some analytic graphs on song plays. - The notebook

test.ipynbdisplays 5 rows of each table.

- The notebook

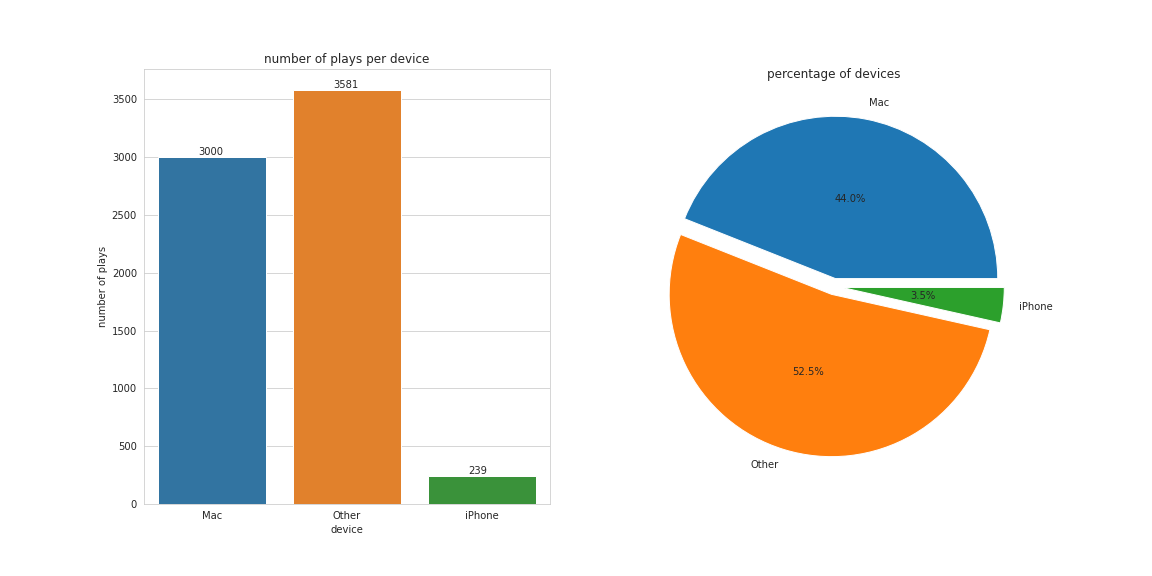

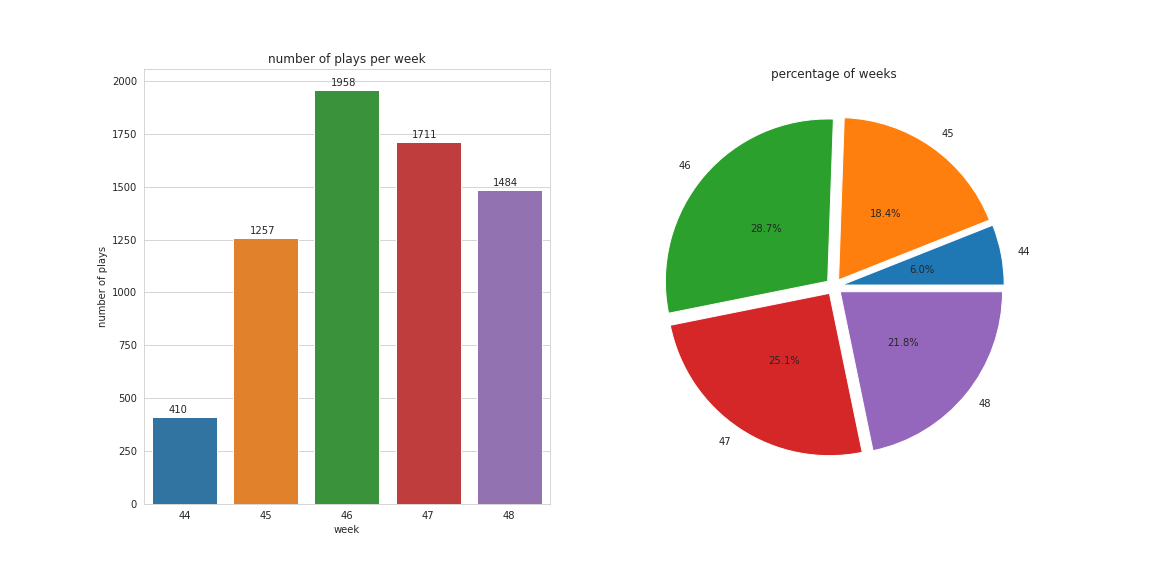

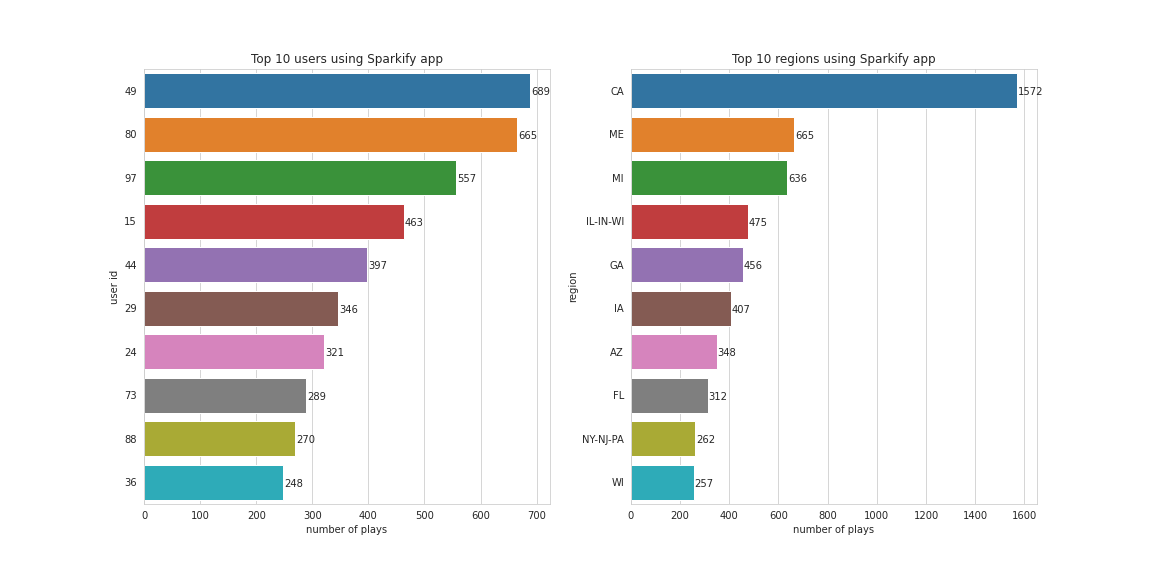

These statistics are realized after loading all files in data into the database.

- Total songplays = 6820

- Total users = 96

- Total artists = 69

- Total songs = 71

These analyses are realised after loading all files in data into the database.

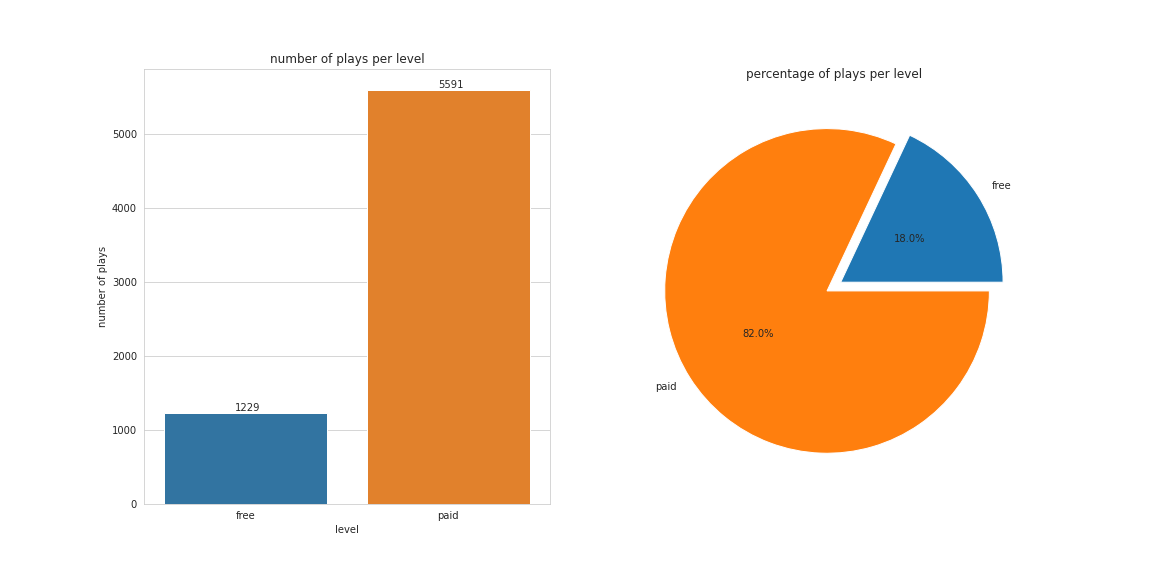

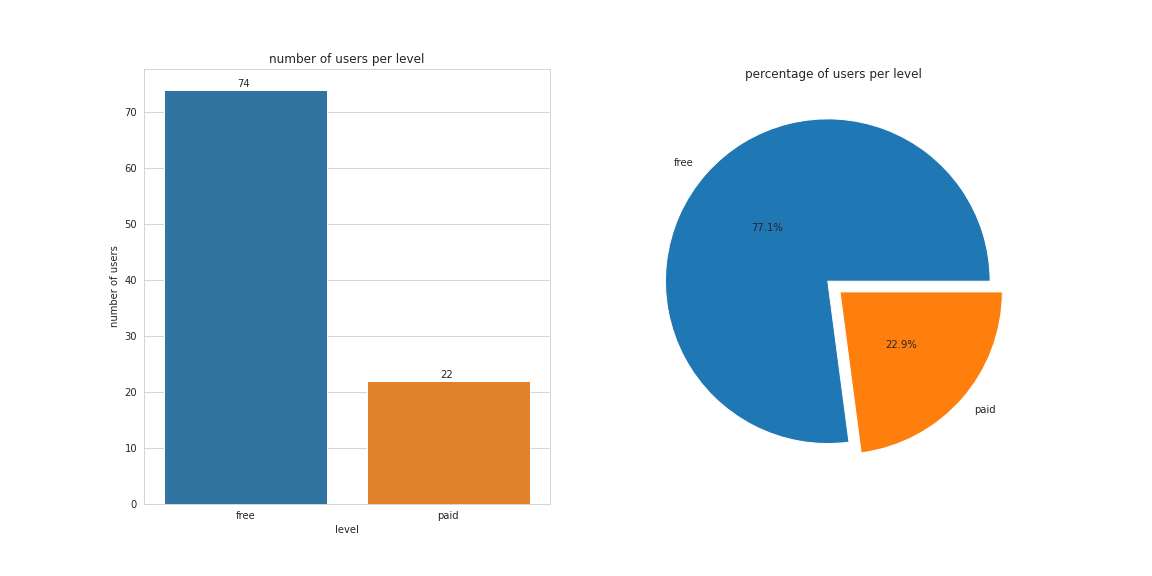

SELECT level, count(songplay_id)

FROM songplays

GROUP BY level;SELECT level, count(user_id)

FROM users

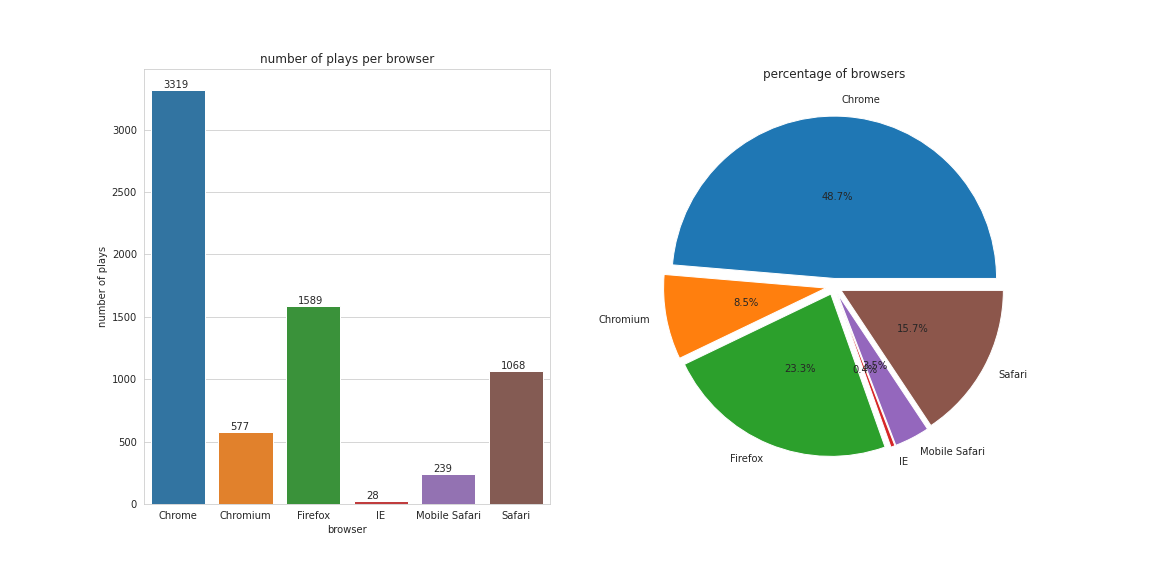

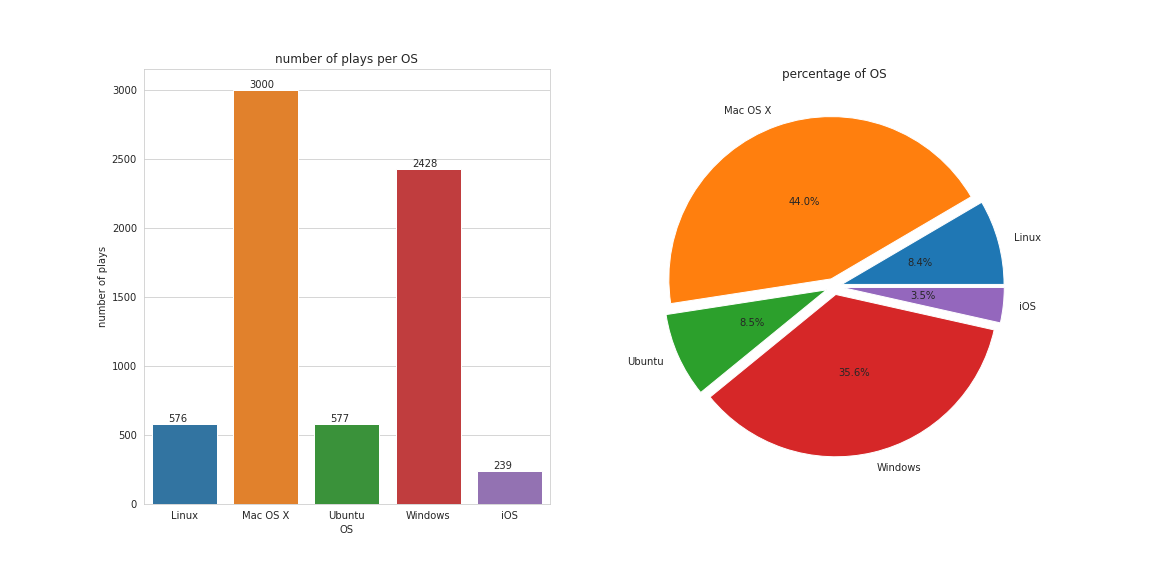

GROUP BY level;SELECT user_agent, count(songplay_id)

FROM songplays

GROUP BY user_agent;SELECT week, count(songplay_id)

FROM songplays

JOIN time ON songplays.start_time = time.start_time

GROUP BY week

ORDER BY week;SELECT user_id, count(songplay_id) AS plays

FROM songplays

GROUP BY user_id

ORDER BY plays DESC

LIMIT 10;SELECT REVERSE(TRIM(SPLIT_PART(REVERSE(location), ',', 1))) AS region, count(songplay_id) AS plays

FROM songplays

GROUP BY region

ORDER BY plays DESC

LIMIT 10;