Entity-oriented PPO Demo

This is a demo of "entity-oriented" deep reinforcement learning (name is inspired by "object-oriented programming").

Feeling frustrated about not being able to describe your game's observation and action space using gym.spaces.Discrete or gym.spaces.Discrete for RL libraries? Entity-oriented RL comes to the rescue - it gives a much more intuitive observation and action space API to game practitioners. Specifically, Entity-oriented RL allows game practitioners to describe the entities in the game using JSON-like syntax such as follows:

entity-ppo-demo/mine_sweeper_demo.py

Lines 87 to 116 in 25d7f6e

The mine_sweeper_demo.py contains an end-to-end example. Please follow the steps below to get started.

Colab

To help get started, we have prepared a colab notebook here - https://colab.research.google.com/drive/1WM23R9TA-C6rmDTFAy4hbnB87C1hcmM2?usp=sharing#scrollTo=HGzNOrnSiaHF

Local Setup

poetry install

poetry run pip install torch==1.12.1+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.html

poetry run pip install torch-scatter -f https://data.pyg.org/whl/torch-1.12.1+cu113.htmlRun an experiment

poetry run python mine_sweeper_demo.py env.id=MineSweeper total_timesteps=1000000

xvfb-run -a poetry run python microrts_demo.py env.id=GymMicrorts rollout.num_envs=16 total_timesteps=1000000 rollout.steps=256 eval.capture_videos=True eval.interval=300000 eval.steps=2000 eval.num_envs=1 eval.processes=1The schema and documentation of configuration flags (such as total_timesteps) can be found here

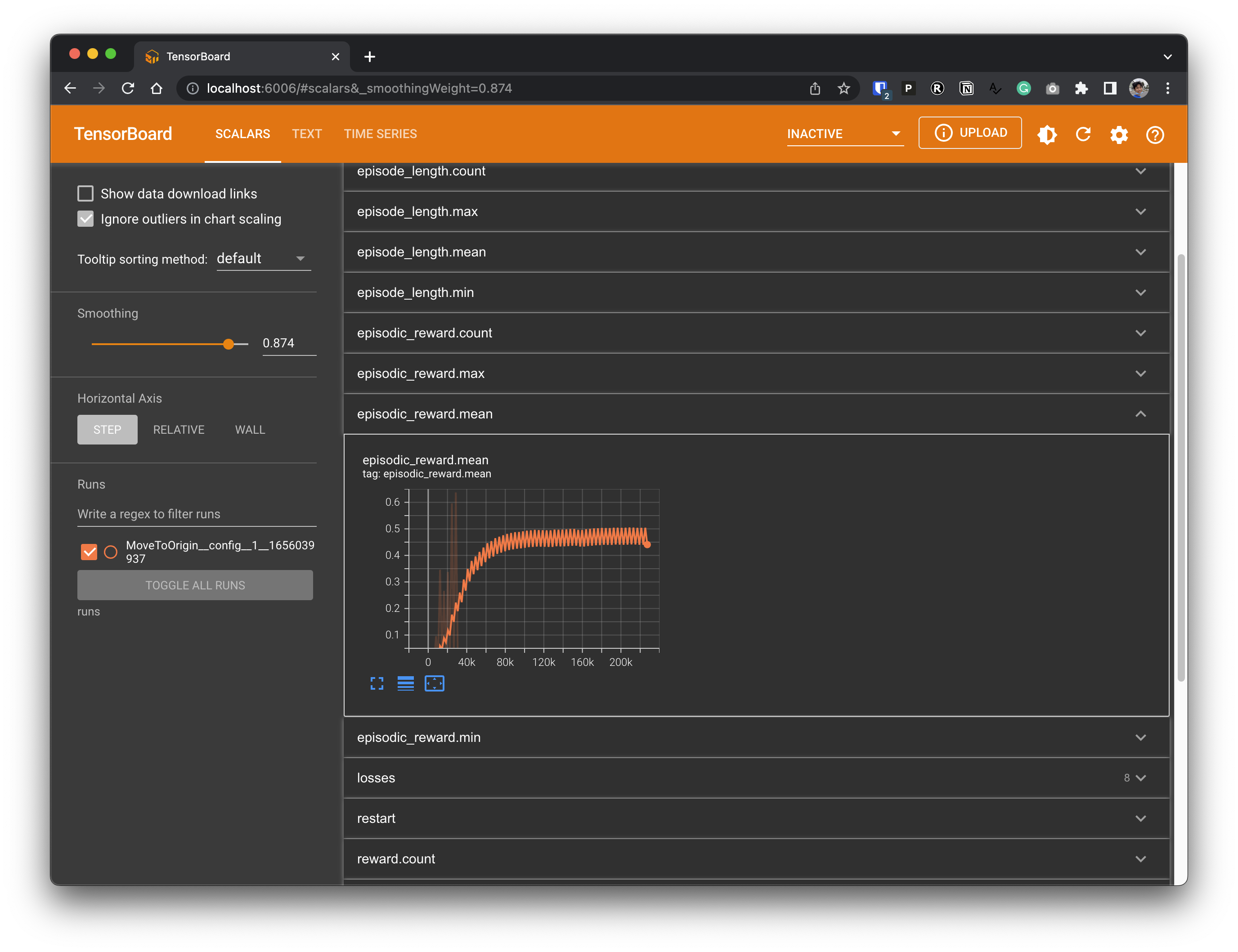

Visualize metrics

tensorboard --logdir runsExperiment tracking

poetry run python mine_sweeper_demo.py total_timesteps=1000000 track=true

xvfb-run -a poetry run python microrts_demo.py env.id=GymMicrorts rollout.num_envs=16 total_timesteps=1000000 rollout.steps=256 eval.capture_videos=True eval.interval=300000 eval.steps=2000 eval.num_envs=1 eval.processes=1 track=trueIt is possible to track the experiments to weights and biases. To do so, please set track=true in the command line arguments. See the following tracked experiments:

More info

To learn more about how to formulate observation space and action space with entity-gym, see https://entity-gym.readthedocs.io/en/latest/quick-start-guide.html.

For more advanced training examples with more complex games, see https://github.com/entity-neural-network/enn-zoo