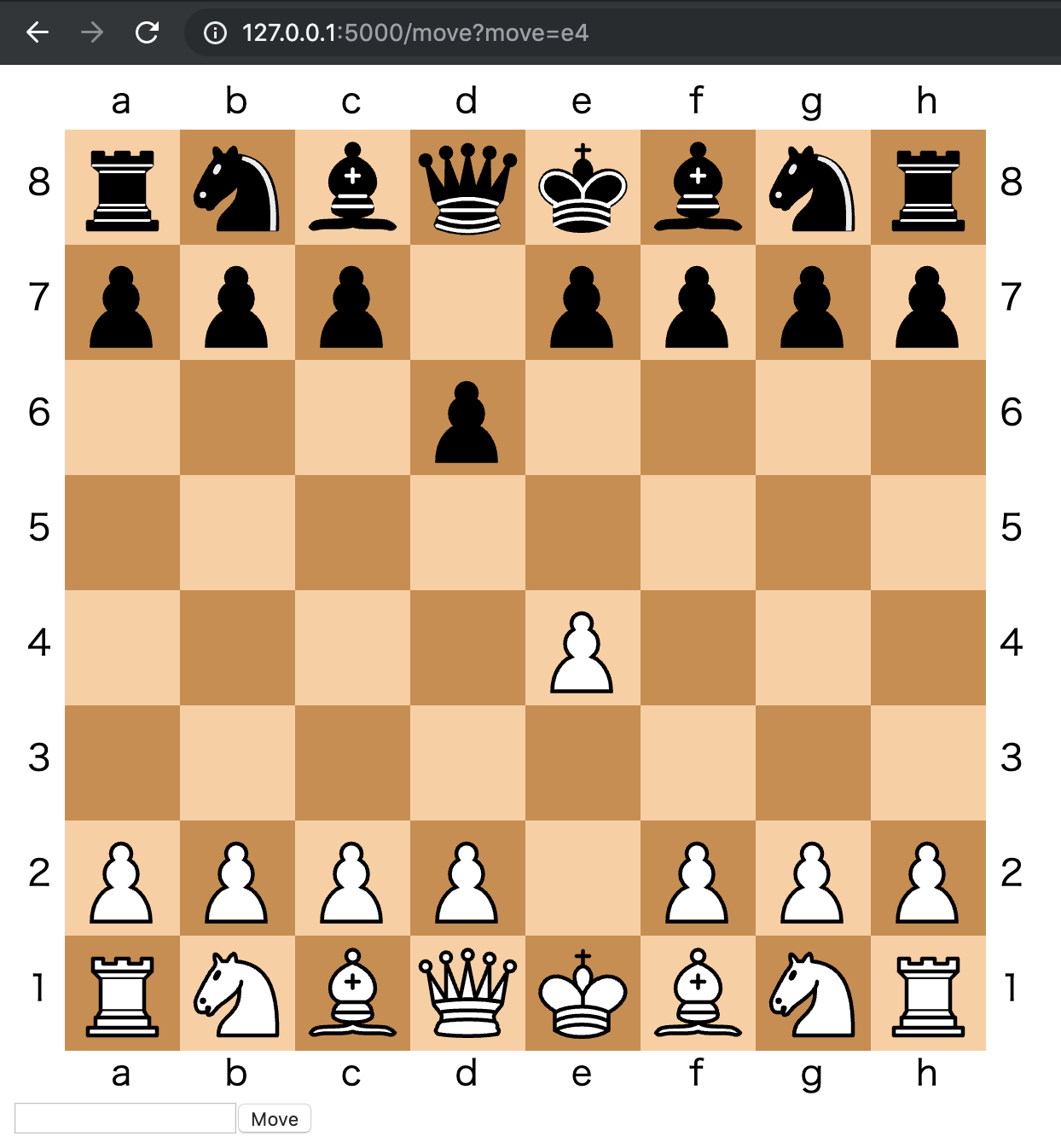

A toy implementation of neural network chess written while livestreaming.

https://www.twitch.tv/tomcr00s3

$ pip install -r requirements.txt

$ python play.py # runs web server on http://localhost:5000

- Roll out search beyond 1-ply

- Make trainer multi GPU

- Train on more data

- Add RL self play learning support

twitchess is a simple 1 look ahead neural network value function. The trained net is nets/value.pth. It takes in a serialized board and outputs a range from -1 to 1. -1 means black wins, 1 means white wins.

We serialize the board into an 8x8x5 bitvector. See state.py for how.

The value network is trained on 10M board positions from http://www.kingbase-chess.net/

- Establish the search tree

- Use a neural network to prune the search tree

Definition: Value network V = f(state)

What is V?

- V = -1 black wins board state

- V = 0 draw board state

- V = 1 white wins board state

Should we fix the value of the initial board state?

What's the value of "about to lose"?

Simpler:

- All positions where white wins = 1

- All positions where draw = 0

- All positions where black wins = -1

State(Board):

Pieces(2+7*2 = 16):

- Universal

- Blank

- Blank (En passant)

- Pieces

- Pawn

- Bishop

- Knight

- Rook

- Rook (can castle)

- Queen

- King

Extra move:

- To move

8x8x4 + 1 = 257 bits (vectors of 0 or 1)

$ wget http://kingbase-chess.net/download/599 data/kb2018.zip