Ecco is a python library for exploring and explaining Natural Language Processing models using interactive visualizations.

Ecco provides multiple interfaces to aid the explanation and intuition of Transformer-based language models. Read: Interfaces for Explaining Transformer Language Models.

Ecco runs inside Jupyter notebooks. It is built on top of pytorch and transformers.

Ecco is not concerned with training or fine-tuning models. Only exploring and understanding existing pre-trained models. The library is currently an alpha release of a research project. Not production ready. You're welcome to contribute to make it better!

Documentation: ecco.readthedocs.io

- Interfaces for Explaining Transformer Language Models

- Finding the Words to Say: Hidden State Visualizations for Language Models

The API reference and the architecture page explain Ecco's components and how they work together.

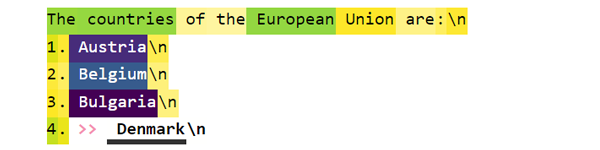

Predicted Tokens: View the model's prediction for the next token (with probability scores). See how the predictions evolved through the model's layers. [Notebook] [Colab]

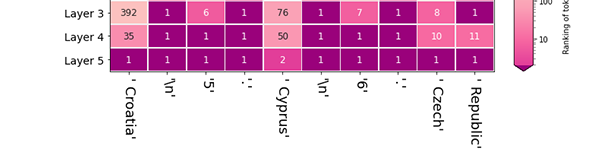

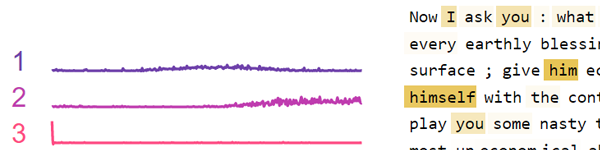

Rankings across layers: After the model picks an output token, Look back at how each layer ranked that token. [Notebook] [Colab]

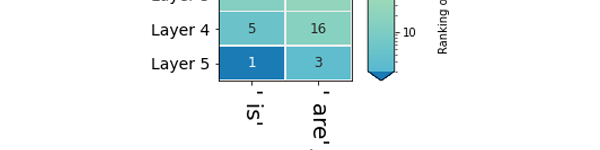

Layer Predictions:Compare the rankings of multiple tokens as candidates for a certain position in the sequence. [Notebook] [Colab]

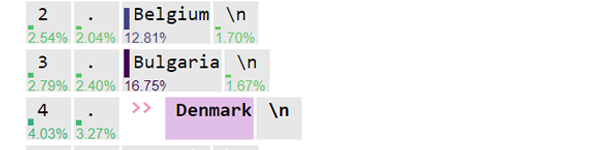

Input Saliency: How much did each input token contribute to producing the output token? [Notebook] [Colab]

Detailed Saliency: See more precise input saliency values using the detailed view. [Notebook] [Colab]

Neuron Activation Analysis: Examine underlying patterns in neuron activations using non-negative matrix factorization. [Notebook] [Colab]

Having trouble?

- The Discussion board might have some relevant information. If not, you can post your questions there.

- Report bugs at Ecco's issue tracker