2Nankai University

Our code has been tested in the following environment:

python==3.10.14

pytorch==1.13.1

pytorch-lightning==1.9.5

numpy==1.26.4

scipy==1.14.0

mmcv-full==1.7.0

matplotlib==3.9.0

You can prepare the KITTI and NYU-Depth-V2 datasets according to here, and download the TOFDC dataset from here. Then you can specify the dataset path in the corresponding configuration file to properly load the data. The train/val/test split files are provided in the data_splits folder.

The pretrained weights of the Swin-Transformer backbone can be downloaded from here. To correctly load them, specify the file path of the downloaded weights in the model/pretrain path of the corresponding configuration file. For training on the KITTI Official dataset, download the pretrained weights from here and configure them in the same manner.

Use the following command to start the training process:

python train.py CONFIG_FILE_NAME --gpus NUMBER_OF_GPUS

For example, to launch training on the NYU-Depth-V2 dataset with 4 GPUs, you can use python train.py dct_nyu_pff --gpus 4. Our training utilizes the Data Distributed Parallel (DDP) support provided by pytorch and pytorch-lightning.

Use the following command to launch the evaluation process:

python test.py CONFIG_FILE_NAME CHECKPOINT_PATH --vis

For example, to evaluate the model trained on the NYU-Depth-V2 dataset, use: python test.py dct_nyu_pff checkpoints/dcdepth_nyu.pth. Specify the --vis option to save the visualization results.

We release the model checkpoints that can reproduce the results reported in our paper. The first three error metrics of TOFDC are multiplied by 10 for presentation.

| Model | Abs Rel |

Sq Rel |

RMSE |

|

|

|---|---|---|---|---|---|

| NYU-Depth-V2 | 0.085 | 0.039 | 0.304 | 0.940 | 0.992 |

| TOFDC | 0.188 | 0.027 | 0.565 | 0.995 | 0.999 |

| KITTI Eigen | 0.051 | 0.145 | 2.044 | 0.977 | 0.997 |

If you find our work useful in your research, please consider citing our paper:

@article{wang2024dcdepth,

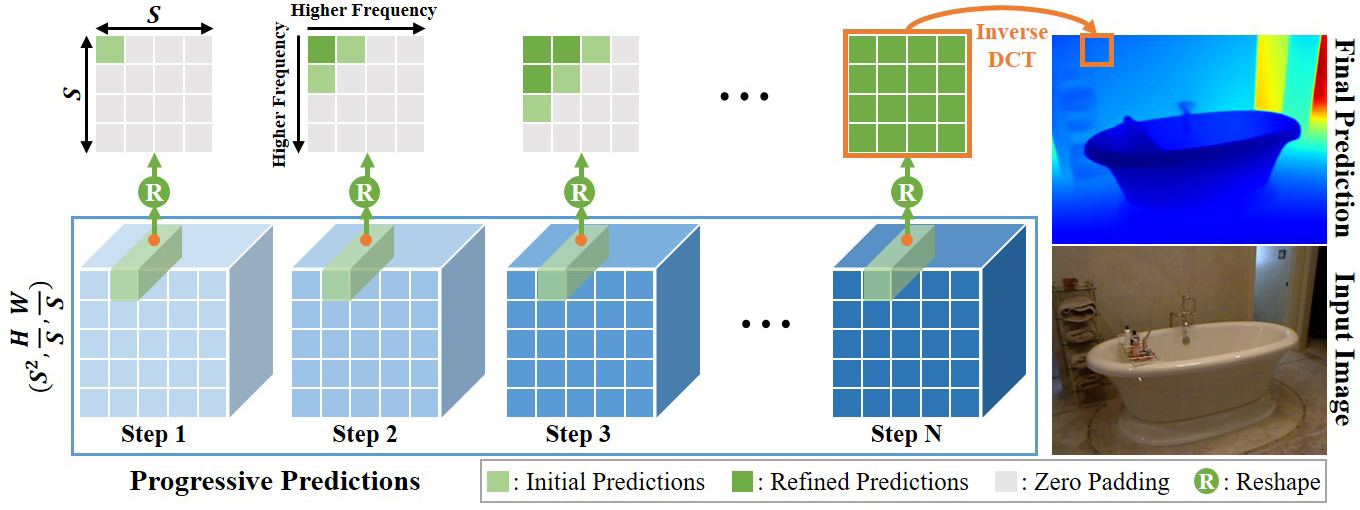

title={DCDepth: Progressive Monocular Depth Estimation in Discrete Cosine Domain},

author={Wang, Kun and Yan, Zhiqiang and Fan, Junkai and Zhu, Wanlu and Li, Xiang and Li, Jun and Yang, Jian},

journal={arXiv preprint arXiv:2410.14980},

year={2024}

}

If you have any questions, please feel free to contact kunwang@njust.edu.cn

Our code implementation is partially based on several previous projects: IEBins, NeWCRFs and BTS. We thank them for their excellent work!