The aim of this project is to simulate and control one or more cars in V-REP using ROS. This is part of the Open Source Self-Driving Car Initiative (see in GitHub https://github.com/OSSDC/).

The mission of this initiative and open organization is to bring the best research in self-driving car space and create a full stack of open source software and hardware components to allow anyone to build and test from toy to full-size self-driving cars.

We are investigating several solutions, like Unity3D, a PS3/PS4 simulator and V-REP, to check their advantages and drawbacks.

V-Rep is a General purpose 3D robot simulator with integrated development environment developed by Coppelia Robotics. Sensors, mechanisms, robots and whole systems can be modeled and simulated in various ways.

There are several ROS interfaces available for V-REP: see here. You could use the RosInterface, but in this case, you should program in LUA to apply the command coming from ROS (you can do it directly in V-REP using the embedded script that you can associate with any object in the scene).

In this case, I used the VREP ROS Bridge, mainly because the functionalities I needed are already implemented.

To test the scene, you will need to follow this tutorial. You will install ROS, V-REP and the V-REP Ros Bridge.

Remember to run roscore in another terminal before launching V-REP.

Now you can run V-REP and open the scene roads_car_ros.

When you play start, the plugin will:

- publish images coming generated by the vision sensor placed on the white car (in the topic

/vrep/CameraTop) - subscribe to a velocity twist command to move the red car (in the topic

/vrep/car0/SetTwist)

If you type rostopic list in a terminal you should see:

jokla@Dell-PC:~$ rostopic list

/rosout

/rosout_agg

/tf

/vrep/CameraTop

/vrep/CameraTop/compressed

/vrep/CameraTop/compressed/parameter_descriptions

/vrep/CameraTop/compressed/parameter_updates

/vrep/CameraTop/compressedDepth

/vrep/CameraTop/compressedDepth/parameter_descriptions

/vrep/CameraTop/compressedDepth/parameter_updates

/vrep/CameraTop/theora

/vrep/CameraTop/theora/parameter_descriptions

/vrep/CameraTop/theora/parameter_updates

/vrep/camera_info

/vrep/car/SetTwist

/vrep/car0/SetTwist

/vrep/info

You can find the model of the car here, and the model of the roads here.

Now we want to generate a velocity command using a joystick. We will use the node https://github.com/jokla/teleop_twist_joy and a PS4 Joystick ( but you can use any joystick working under Ubuntu).

- Clone in your source catkin_ws folder the package

teleop_twist_joy: $ git clone git@github.com:jokla/teleop_twist_joy.git- Run a

catkin_make

To check if the joystick is working under Ubuntu you can install jstest-gtk:

$ sudo apt-get install jstest-gtk

You can also check this tutorial: ConfiguringALinuxJoystick

Now you can try to launch the node ():

roslaunch teleop_twist_joy teleop-ps4.launch

This node will publish in two topics:

/joy

/vrep/car0/SetTwist

If you run the scene in V-REP, you should be able to move the red car. You have to keep L1 pressed and after use the two thumbsticks to apply a linear velocity vx (right thumbstick - up and down) and angular velocity wz (right thumbstick - left and right).

You can change the buttons and axis modifying this configuration file.

##Test OpenTLD

TLD = Robust Object Tracking Based on Tracking-Learning-Detection

You will need to clone the following two packages in your catkin_ws:

$ git clone https://github.com/pandora-auth-ros-pkg/open_tld

$ git clone https://github.com/pandora-auth-ros-pkg/pandora_tld

After do a catkin_make and open the file /config/predator_topics.yaml

You will need to modify the name of the input_image_topic with /vrep/CameraTop.

To launch the node:

roslaunch pandora_tld predator_node.launch

A image window should appear, read here to have more info about the Keyboard shortcuts.

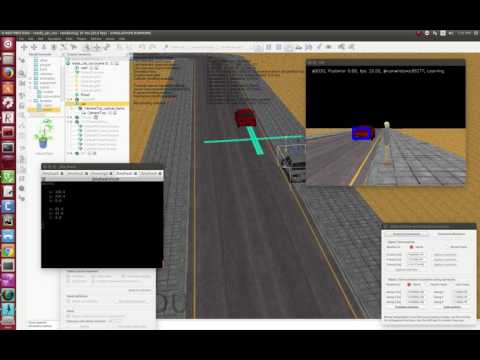

This is the result:

- Simulate the Ackermann steering mechanism. Right now the car is just a static object. There is simple model in V-REP under the folder

example - Add other sensors, like a lidar.