Code review is a crucial aspect of the software development process, ensuring that code changes are thoroughly examined for quality, security, and adherence to coding standards. However, the code review process can be time-consuming, and human reviewers may overlook certain issues.

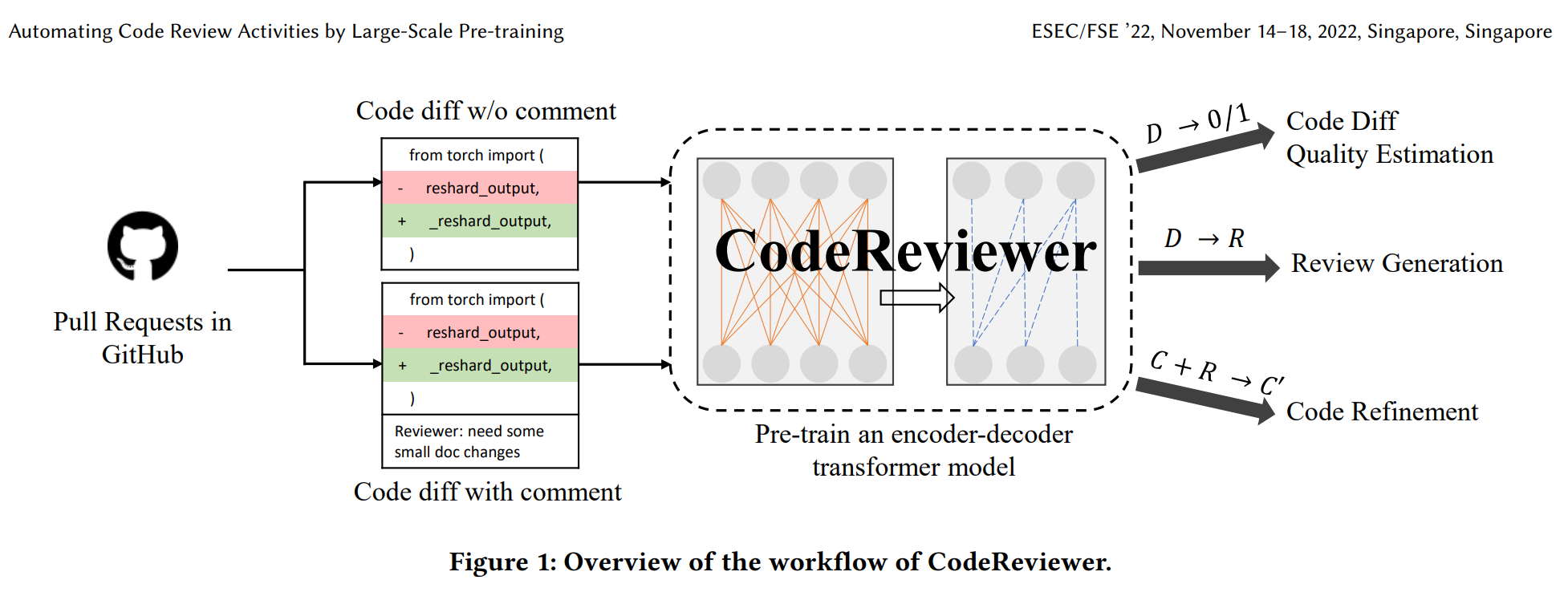

In this series of Jupyter notebooks, we embark on a journey to collect code review data from GitHub repositories and perform code review predictions using a prominent language model: CodeReviewer from Microsoft Research. Our primary goal is to explore the capabilities of this model in generating code reviews and evaluate its performance.

First, we collect the code review data from popular GitHub repositories. This data includes code changes and associated human-authored code reviews. By leveraging this data, the model learns to generate contextually relevant code reviews.

We take the pre-trained language checkpoint and fine-tune the model on code review datasets. Fine-tuning allows the models to specialize in generating code reviews, making them more effective in this task.

Once the models are trained, they can generate code reviews for new code changes. These generated reviews can highlight potential issues, suggest improvements, and provide feedback to developers.

We use the BLEU-4 score metric to assess the quality of generated code reviews. This metric measures the similarity between model-generated reviews and target human reviews.

To get started with our work, follow these steps:

-

Clone this repository to your local machine:

git clone https://github.com/waleko/Code-Review-Automation-LM.git cd Code-Review-Automation-LM -

Set up the required dependencies from

requirements.txt. E.g.: usingpip:pip install -r requirements.txt

-

Run the provided notebooks to explore data collection, model inference, and evaluation.

Incredible thanks to the authors of CodeReviewer for their scientific contributions. This work is basically an in-depth exploration of their research, and I am grateful for their efforts.

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.

For any questions or inquiries, please contact inbox@alexkovrigin.me.