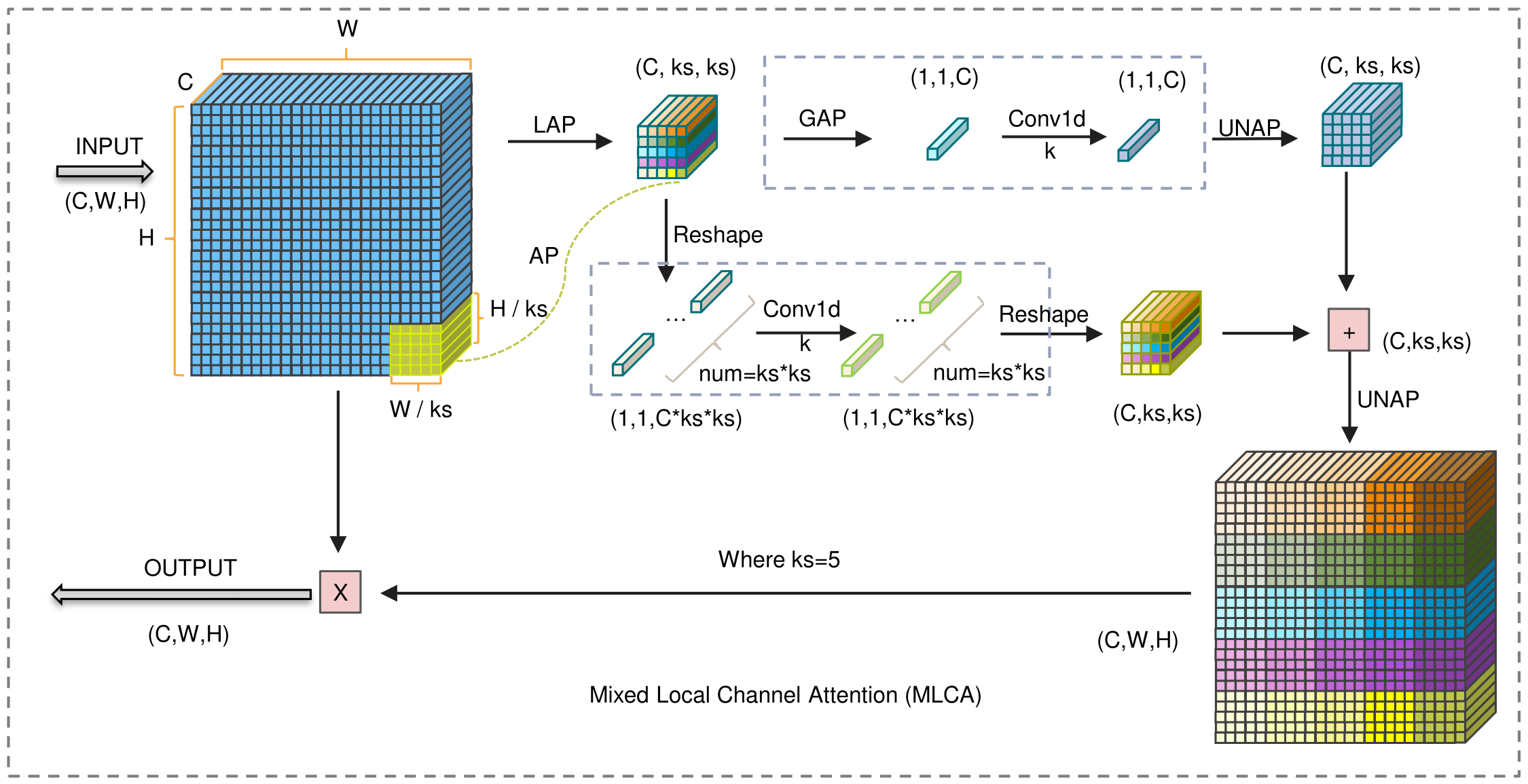

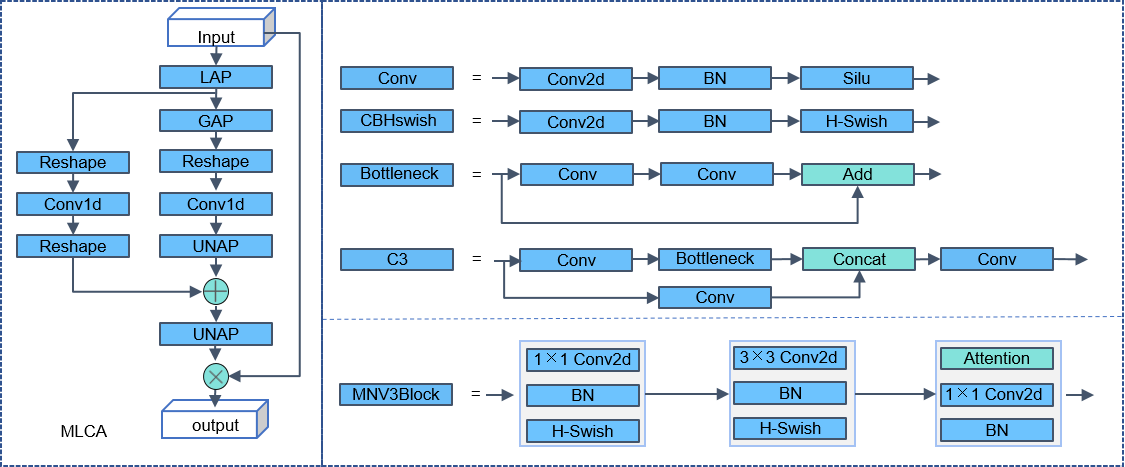

This project introduces a lightweight Mixed Local Channel Attention (MLCA) module that considers both channel-wise and spatial-wise information, combining local and global context to enhance network representation. Based on this module, we propose the MobileNet-Attention-YOLO (MAY) algorithm to compare the performance of various attention modules. On the Pascal VOC and SMID datasets, MLCA demonstrates a better balance between model representation effectiveness, performance, and complexity compared to other attention techniques. When compared to the Squeeze-and-Excitation (SE) attention mechanism on the PASCAL VOC dataset and the Coordinate Attention (CA) method on the SIMD dataset, MLCA achieves an improvement in mAP of 1.0% and 1.5%, respectively.

- [Chinese Interpretation of Mixed Local Channel Attention](Chinese Interpretation Link) [TODO: Will be written and updated if necessary]

If this project and paper have been helpful to you, please cite the following paper:

@article{WAN2023106442, title = {Mixed local channel attention for object detection}, journal = {Engineering Applications of Artificial Intelligence}, volume = {123}, pages = {106442}, year = {2023}, issn = {0952-1976}, doi = {https://doi.org/10.1016/j.engappai.2023.106442}, url = {https://www.sciencedirect.com/science/article/pii/S0952197623006267}, author = {Dahang Wan and Rongsheng Lu and Siyuan Shen and Ting Xu and Xianli Lang and Zhijie Ren}, }

For example:

D. Wan, R. Lu, S. Shen, T. Xu, X. Lang, Z. Ren. (2023). Mixed local channel attention for object detection. Engineering Applications of Artificial Intelligence, 123, 106442.

- Codebase using YOLOv5 framework

- References for GradCAM visualization and partial modules

- ECA implementation

- SqueezeNet repository

- Video tutorial for GradCAM visualization (No need to modify source code) (YOLOv5, YOLOv7, YOLOv8)

- Explanation video for GradCAM principle

Thank you for your interest and support for this project. The authors strive to provide the best quality and service, but there is always room for improvement. If you find any issues or have any suggestions, please let us know. Additionally, this project is currently maintained by an individual and may contain errors. Your feedback and suggestions are welcome.

Other open-source projects will be organized and released gradually. Please check the author's homepage for downloads in the future. Homepage

- README.md file addition (completed)

- Heatmap visualization section addition, yolo-gradcam (completed, adapted from the objectdetection_script open-source project, detailed tutorials in the link, place yolov5_headmap.py in the root directory for normal usage, YOLOv7 and YOLOv8 likewise)

- Project environment configuration (MLCA module is plug-and-play, the entire project is based on YOLOv5-6.1 version, refer to README-YOLOv5.md file and requirements.txt for configuration)

- Folder correspondence explanation (consistency with YOLOv5-6.1, no hyperparameter changes) (TODO: Detailed explanation)

- Innovation points summary and code implementation (TODO)

- Paper figures (due to journal copyright issues, no PPT source files are provided, apologies): - Diagrams, network structure visuals, flowcharts: PPT (personal choice, can also use Visio, Edraw, AI, etc.) - Experimental comparisons: Origin (Matlab, Python, R, Excel all applicable)