Source code repo for paper Zero-Shot Information Extraction as a Unified Text-to-Triple Translation, EMNLP 2021.

git clone --recursive git@github.com:cgraywang/deepex.git

cd ./deepex

conda create --name deepex python=3.7 -y

conda activate deepex

pip install -r requirements.txt

pip install -e .Requires PyTorch version 1.5.1 or above with CUDA. PyTorch 1.7.1 with CUDA 10.1 is tested. Please refer to https://pytorch.org/get-started/locally/ for installing PyTorch.

You can add --prepare-rc-dataset argument when running the scripts in this section, which would allow the script to automatically handle the preparation of FewRel dataset.

Or, you could manually download and prepare the FewRel dataset using the following script:

bash scripts/rc/prep_FewRel.shThe processed data will be stored at data/FewRel/data.jsonl.

TACRED is licensed under LDC, please first download TACRED dataset from link. The downloaded file should be named as tacred_LDC2018T24.tgz.

After downloading and correctly naming the tacred .tgz data file, you can add --prepare-rc-dataset argument when running the scripts in this section, which would allow the script to automatically handle the preparation of TACRED dataset.

Or, you could manually download and prepare the TACRED dataset using the following script:

bash scripts/rc/prep_TACRED.shThe processed data will be stored at data/TACRED/data.jsonl.

This section contains the scripts for running the tasks with default setting (e.g.: using model bert-large-cased, using 8 CUDA devices with per-device batch size equal to 4).

To modify the settings, please checkout this section.

bash tasks/OIE_2016.shbash tasks/PENN.shbash tasks/WEB.shbash tasks/NYT.shbash tasks/FewRel.shbash tasks/TACRED.shGeneral script:

python scripts/manager.py --task=<task_name> <other_args>The default setting is:

python scripts/manager.py --task=<task_name> --model="bert-large-cased" --beam-size=6

--max-distance=2048 --batch-size-per-device=4 --stage=0

--cuda=0,1,2,3,4,5,6,7All tasks are already implemented as above .sh files in tasks/, using the default arguments.

The following are the most important command-line arguments for the scripts/manager.py script:

--task: The task to be run, supported tasks areOIE_2016,WEB,NYT,PENN,FewRelandTACRED.--model: The pre-trained model type to be used for generating attention matrices to perform beam search on, supported models arebert-base-casedandbert-large-cased.--beam-size: The beam size during beam search.--batch-size-per-device: The batch size on a single device.--stage: Run task starting from an intermediate stage:--stage=0: data preparation and beam-search--stage=1: post processing--stage=2: ranking--stage=3: evaluation

--prepare-rc-dataset: If true, automatically run the relation classification dataset preparation scripts. Notice that this argument should be turned on only for relation classification tasks (i.e.:FewRelandTACRED).--cuda: Specify CUDA gpu devices.

Run python scripts/manager.py -h for the full list.

NOTE

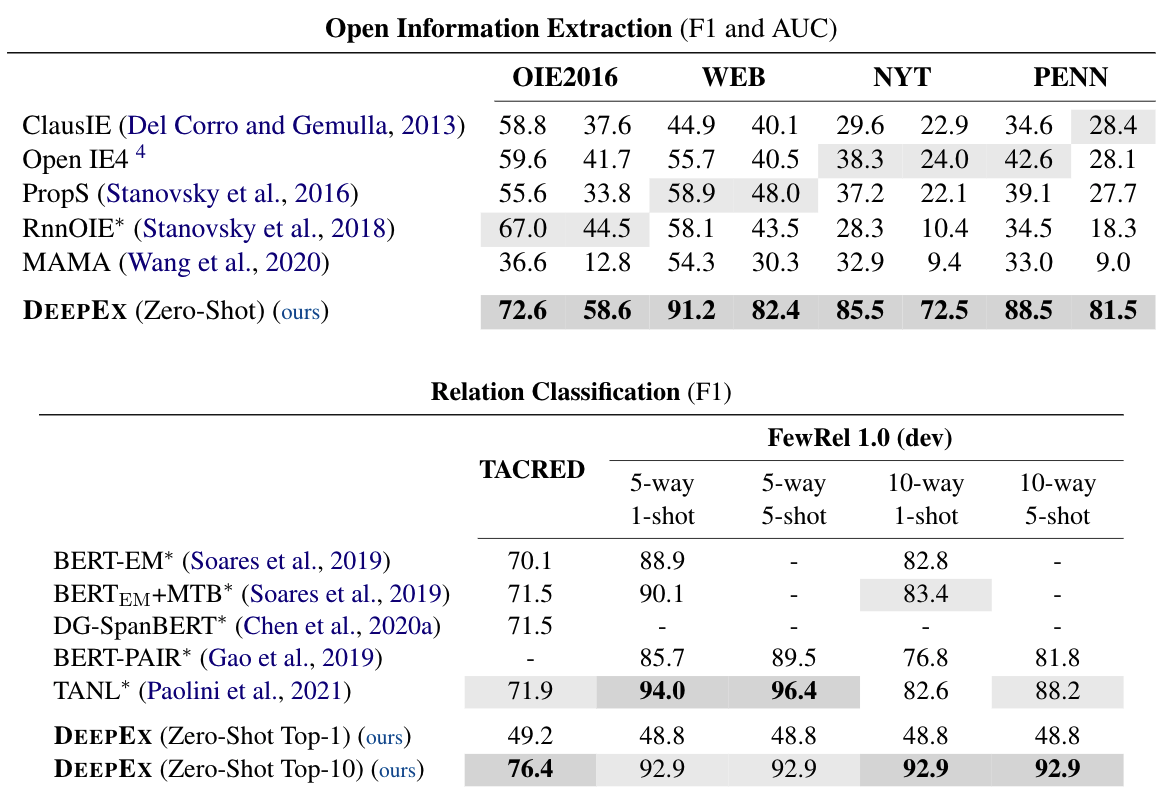

We are able to obtain improved or same results compared to the paper's results. We will release the code and datasets for factual probe soon!

We implement an extended version of the beam search algorithm proposed in Language Models are Open Knowledge Graphs in src/deepex/model/kgm.py.

@inproceedings{wang-etal-2021-deepex,

title = "Zero-Shot Information Extraction as a Unified Text-to-Triple Translation",

author = "Chenguang Wang and Xiao Liu and Zui Chen and Haoyun Hong and Jie Tang and Dawn Song",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

year = "2021",

publisher = "Association for Computational Linguistics"

}

@article{wang-etal-2020-language,

title = "Language Models are Open Knowledge Graphs",

author = "Chenguang Wang and Xiao Liu and Dawn Song",

journal = "arXiv preprint arXiv:2010.11967",

year = "2020"

}