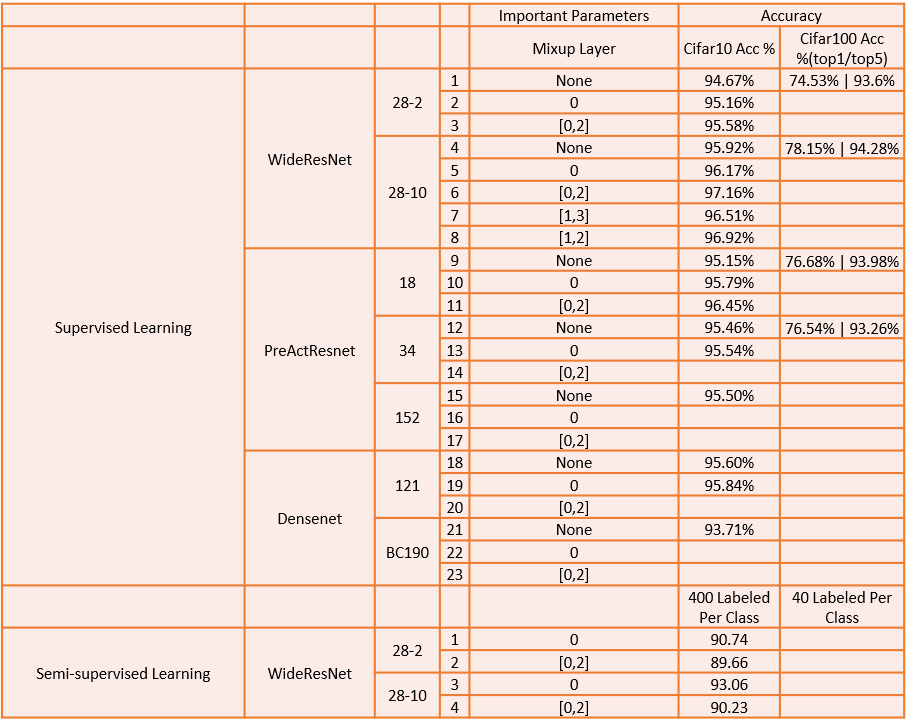

In this project, we mainly implement the mixup[1] and manifold mixup[2] algorithm on supervised and semi-supervised tasks in 3 datasets together with 3 neural networks(WideResNet[3],PreActResnet[4],DenseNet[5]).

The implementation almost achieves claimed results in the paper, and the main result are listed following. Details about parameters and some tricks need to pay attention to will be discussed in remaining sections.

python >=3.5

Pytorch >=1.1.0

torchvision >= 0.2.1

pillow

numpy

scikit-learnThe dataset cifar10,cifar100 should be stored under the folder cifar with the root

path.join(basepath,"dataset","cifar")The dataset svhn should be arranged in the folder svhn with the root

path.join(basepath,"dataset","svhn")Here basepath is the arbitrary path under which you store the dataset.

The details of hyper-parameters and the related usage commands have been carefully listed in TrainDetail.xlsx file.

Here are 3 parameters we should pay attention to:

-

basepathHere

basepathis the arbitrary path under which you store the dataset. We will also store the log of training underbathpath. For example, the supervised training record (written usingtensorboard) ofCifar10is stored underpath.join(basepath,"Cifar10")

while the semi-supervised training record path is

path.join(basepath,"Cifar10-SSL")

-

datasetThe parameter

datasetshould be chosen fromCifar10,Cifar100andSVHN. -

device_idThe available GPU id (e.g.,

"0,1").

Complete the experiment and fill the blank in TrainDetail.xlsx .

[1] Zhang, Hongyi, et al. "mixup: Beyond empirical risk minimization." arXiv preprint arXiv:1710.09412 (2017).

[2] Verma, Vikas, et al. "Manifold mixup: Encouraging meaningful on-manifold interpolation as a regularizer." stat 1050 (2018): 13.

[3] Zagoruyko, Sergey, and Nikos Komodakis. "Wide residual networks." arXiv preprint arXiv:1605.07146 (2016).

[4] He, Kaiming, et al. "Identity mappings in deep residual networks." European conference on computer vision. Springer, Cham, 2016.

[5] Huang, Gao, et al. "Densely connected convolutional networks." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.