The repo contains Kubernetes deployment files and documentations to deploy cloud native foundational model systems, including BLOOM Inference server and Stable-Diffusion Web UI, and evaluate energy consumptions.

- Kubernetes v1.27 Clusters on GPU servers with root access to GPU nodes.

- NVidia GPU Driver installed

- Nvidia GPU Operator installed

- kube-prometheus deployed.

- Nvidia DCGM-exporter deployed.

- kubectl access to the clusters on the load-testing server.

- Python 3.9 installed on load testing server.

- We leverage two existing open sourced foundation AI model servers for deployment.

- One is BLOOM Inference server that is developed by BigScience Workshop. BLOOM is a Large Open-science Open-access Multilingual Language Model. The architecture of BLOOM is essentially similar to GPT3 (auto-regressive model for next token prediction). BLOOM has been trained on 46 different languages and 13 programming languages.

- The second one is Stable Diffusion Server that is developed by Ommer-Lab. Stable Diffusion is a latent text-to-image diffusion model trained on 512x512 images from a subset of the LAION-5B database. It can be used to generate images from text prompts.

- Build container image for BLOOM inference server.

a) Clone the open source BLOOM inference server code.

> git clone https://github.com/huggingface/transformers-bloom-inference

> cd transformers-bloom-inferenceb) Build your own docker image for the bloom inference server and push to your own image repo. Replace <img_repo> to your own repo.

> docker build -f Dockerfile -t <img_repo>/bloom:latest .

> docker push <img_repo>/bloom:latest- Build container image for Stable-Diffusion server.

a) Similarly, we clone the open sourced docker version of Stable diffusion server.

> git clone https://github.com/AbdBarho/stable-diffusion-webui-docker.gitb) Modify the provided Dockerfile to enable the API calls for the stable diffusion server.

> cd stable-diffusion-webui-docker

> vi services/AUTOMATIC1111/DockerfileAdd ENV variable COMMANDLINE_ARGS=--api to enable api serving and also modify the CMD to enable APIs.

ENV COMMANDLINE_ARGS=--api

EXPOSE 7860

ENTRYPOINT ["/docker/entrypoint.sh"]

CMD python -u webui.py --listen --port 7860 ${CLI_ARGS} ${COMMANDLINE_ARGS}c) Build and push you own stable diffusion server image. Replace <img_repo> to your own repo.

> docker build -f Dockerfile -t <img_repo>/stable-diffusion:latest .

> docker push <img_repo>/stable-diffusion-web-ui-api:latest- Clone this repo.

> git clone https://github.com/wangchen615/OSSNA23Demo.git

> cd OSSNA23Demo- Deploy the manifests for both BLOOM and Stable Diffusion servers and allocate 1 GPU per each server.

> kubectl create -f manifests/bloom-server.yaml

> kubectl create -f manifests/stable-diffusion.yaml- Forward the ports to localhosts of load-testing server for both FM services, prometheus endpoint and grafana for

load testing purposes and detach the screen using

^+A+D.

a) BLOOM Server

> screen -S bloom-server

> kubectl port-forward svc/bloom-service 5001b) Stable diffusion server

> screen -S stable-diffusion

> kubectl port-forward svc/stable-diffusion 7860c) Prometheus endpoint

> screen -S prometheus

> kubectl --namespace monitoring port-forward svc/prometheus-k8s 9090d) Grafana endpoint

> screen -S grafana

> kubectl --namespace monitoring port-forward svc/grafana 3000- Import Nvidia DCGM exporter dashboard to the grafana dashboard.

- Open FM service UIs for image or text generation.

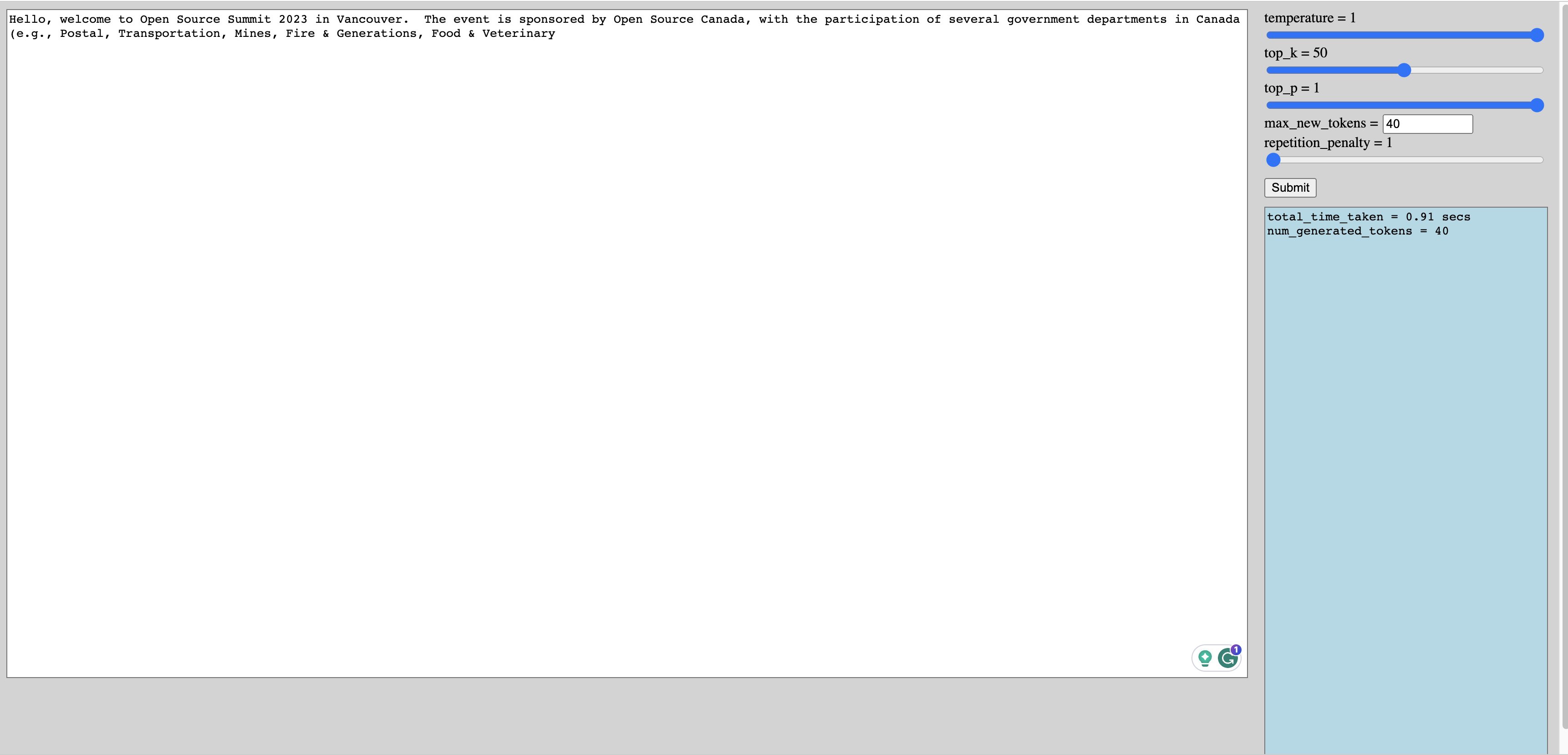

a) On the load-testing server, open http://localhost:5001 in your browser to try BLOOM query generation. Try the following query.

Hello, welcome to Open Source Summit 2023 in Vancouver.

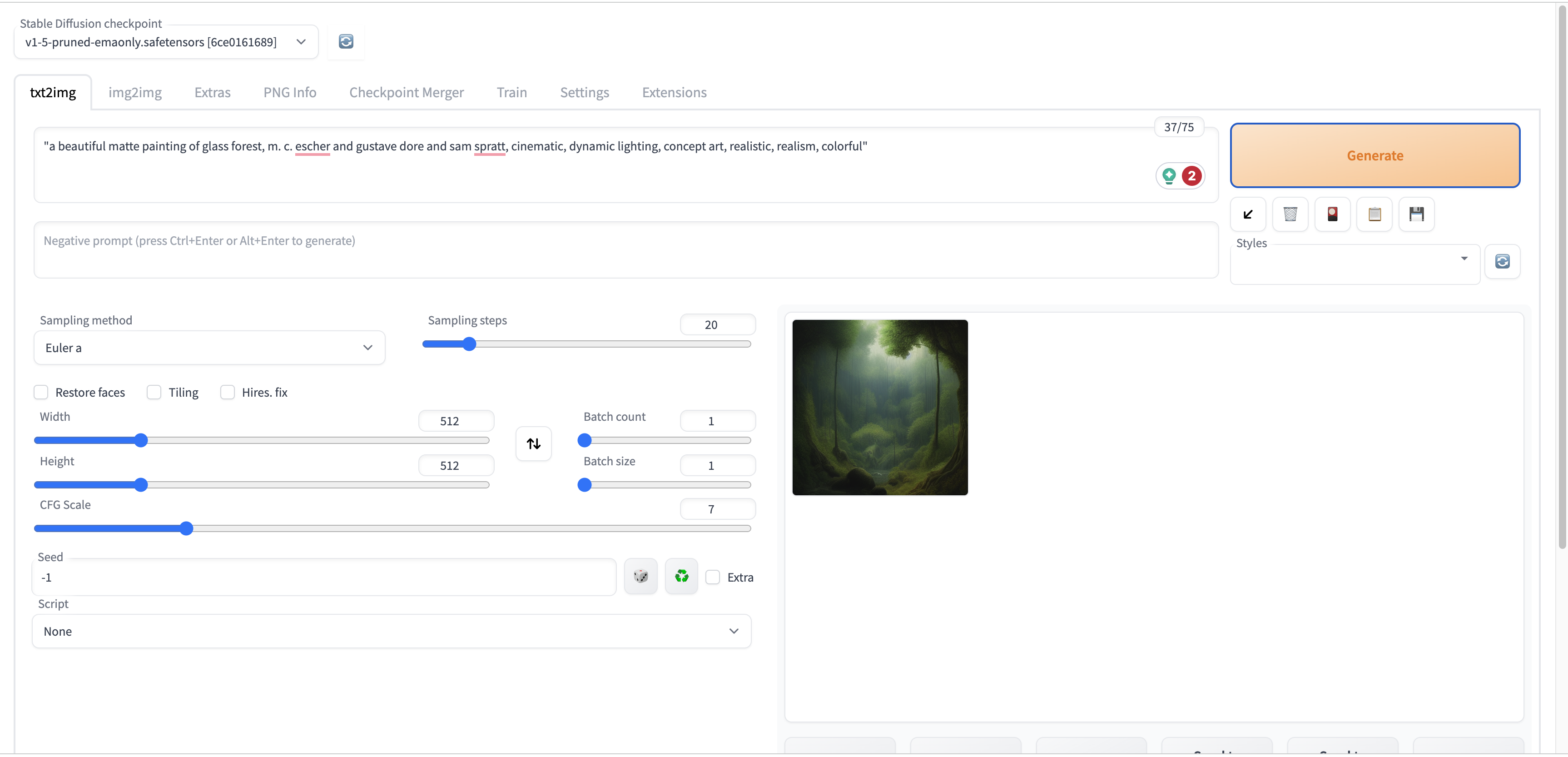

b) On the load-testing server, open http://localhost:7860 in your browser to try stable diffusion image generation. Try the following query.

"a beautiful matte painting of glass forest, m. c. escher and gustave dore and sam spratt, cinematic, dynamic lighting, concept art, realistic, realism, colorful"

- Run FM load generator scripts to generate load on both FM services.

a) Enable Python virtual environment for load-testing purposes.

> cd OSSNA23Demo

> python -m venv /path/to/new/virtual/bloom-load-generator

> source /path/to/new/virtual/bloom-load-generator/bin/activate

> pip install -r requirements.txtb) Run load-testing script for stable-diffusion queries.

> screen -S stable-diffusion-load

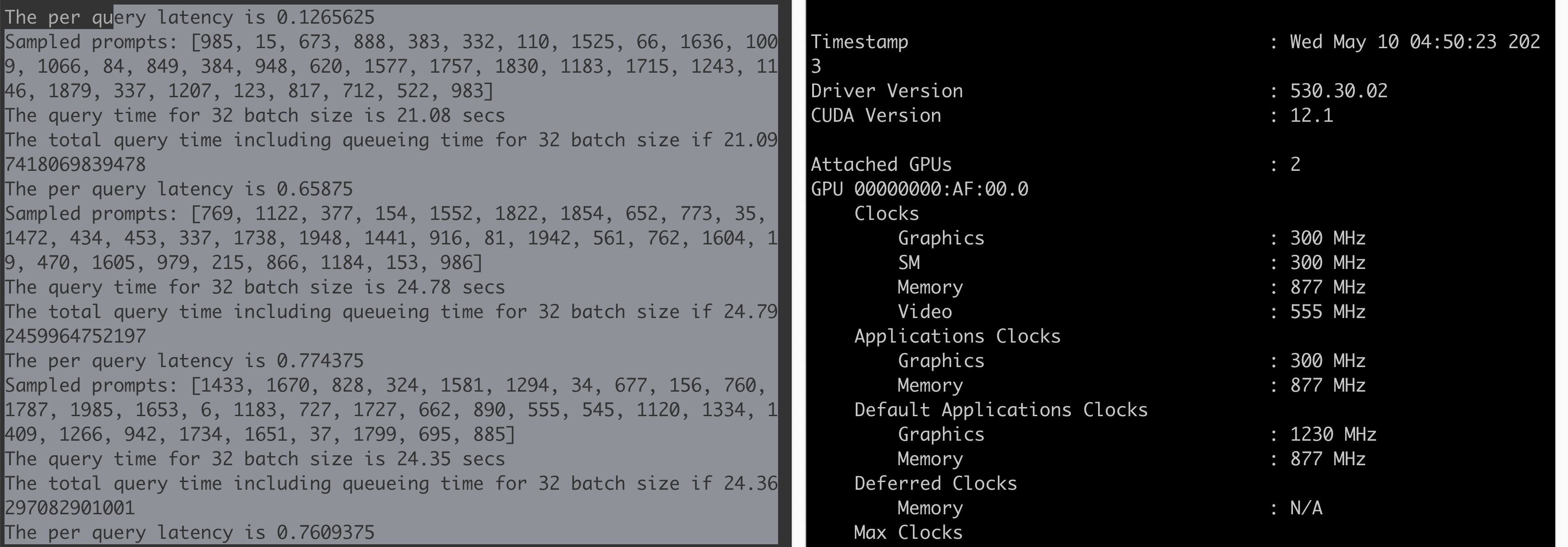

> python3 stablediffusion-generate-query.py --host localhost --port 7860 --exp-name "oss-demo-SD" --metric-endpoint http://localhost:9090 --num-tests 20Run load-testing script for BLOOM queries.

> screen -S bloom-load

> python3 bloom-generate-fixed-token-query.py --host localhost --port 5001 --exp-name "oss-demo-bloom" --metric-endpoint http://localhost:9090 --num-tests 20c) Observe the GPU energy consumption, GPU temperature, GPU frequency changes on

- Tune Nvidia GPU power capping

a) Show the effective range of GPU power capping

nvidia-smi -q -d POWERb) Configure GPU power capping for stable diffusion GPU. Find the stable diffusion GPU ID.

> sudo nvidia-smi -i GPU_ID -pl NEW_POWER_LIMIT- Tune Nvidia GPU graphics CLOCK frequencies.

a) Show the possible frequency setting for GPU graphics and memory.

nvidia-smi -q -d SUPPORTED_CLOCKSb) Change the frequency setting for bloom server GPU.

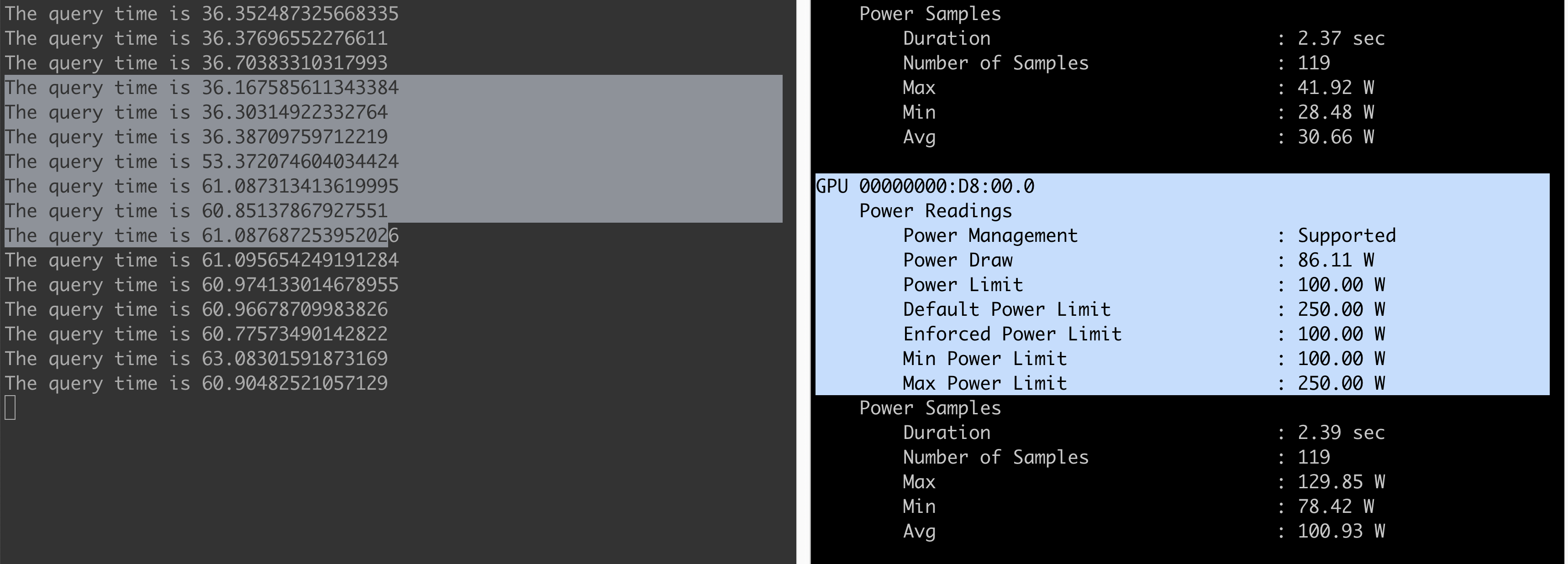

nvidia-smi -i GPU_ID -ac MEMORY_CLOCK,GRAPHICS_CLOCK- Take the stable diffusion server running on GPU 1 for example, if we set the power capping to 100W, the performance of

stable diffusion query doubles as shown in the following screenshot.

- Then the bloom server runs on GPU 0. We set the GPU graphic CLOCK frequency as 300 MHz, the query response time

for 32 batch size would increase significantly as shown below.

- From the DCGM dashboard, we can see the power consumption for GPU 1 is around 100W and the frequency of GPU

0 is around 300 MHz.

- BLOOM prompts are generated from reddit dataset.

- Stable diffusion prompts are from Gustavosta/Stable-Diffusion-Prompts.

- The full demo video is here.

- The slides for the talk is here.