|

|---|

RLLTE: Long-Term Evolution Project of Reinforcement Learning is inspired by the long-term evolution (LTE) standard project in telecommunications, which aims to track the latest research progress in reinforcement learning (RL) and provide stable and efficient baselines. In rllte, you can find everything you need in RL, such as training, evaluation, deployment, etc.

If you use rllte in your research, please cite this project like this:

@software{rllte,

author = {Mingqi Yuan, Zequn Zhang, Yang Xu, Shihao Luo, Bo Li, Xin Jin, and Wenjun Zeng},

title = {RLLTE: Long-Term Evolution Project of Reinforcement Learning},

url = {https://github.com/RLE-Foundation/rllte},

year = {2023},

}- Contents

- Overview

- Quick Start

- Implemented Modules

- Benchmark

- API Documentation

- How To Contribute

- Acknowledgment

For the project tenet, please read Evolution Tenet.

The highlight features of rllte:

- 👨

✈️ Large language model-empowered copilot; - ⏱️ Latest algorithms and tricks;

- 📕 Standard and sophisticated modules for redevelopment;

- 🧱 Highly modularized design for complete decoupling of RL algorithms;

- 🚀 Optimized workflow for full hardware acceleration;

- ⚙️ Support custom environments and modules;

- 🖥️ Support multiple computing devices like GPU and NPU;

- 🛠️ Support RL model engineering deployment (TensorRT, CANN, ...);

- 💾 Large number of reusable benchmarks (See rllte-hub);

See the project structure below:

-

Agent: Implemented RL Agents using rllte building blocks.

-

Common: Base classes and auxiliary modules.

-

Xploit: Modules that focus on exploitation in RL.

- Encoder: Neural nework-based encoders for processing observations.

- Policy: Policies for interaction and learning.

- Storage: Storages for storing collected experiences.

-

Xplore: Modules that focus on exploration in RL.

- Augmentation: PyTorch.nn-like modules for observation augmentation.

- Distribution: Distributions for sampling actions.

- Reward: Intrinsic reward modules for enhancing exploration.

-

Hub: Fast training API and reusable benchmarks.

-

Env: Packaged environments (e.g., Atari games) for fast invocation.

-

Evaluation: Reasonable and reliable metrics for algorithm evaluation.

-

Pre-training: Methods of pre-training in RL.

-

Deployment: Methods of model deployment in RL.

For more detiled descriptions of these modules, see https://docs.rllte.dev/api

- Prerequisites

Currently, we recommend Python>=3.8, and user can create an virtual environment by

conda create -n rllte python=3.8- with pip

recommended

Open up a terminal and install rllte with pip:

pip install rllte-core # basic installation

pip install rllte-core[envs] # for pre-defined environments- with git

Open up a terminal and clone the repository from GitHub with git:

git clone https://github.com/RLE-Foundation/rllte.gitAfter that, run the following command to install package and dependencies:

pip install -e . # basic installation

pip install -e .[envs] # for pre-defined environmentsFor more detailed installation instruction, see https://docs.rllte.dev/getting_started.

For example, we want to use DrQ-v2 to solve a task of DeepMind Control Suite, and it suffices to write a train.py like:

# import `env` and `agent` api

from rllte.env import make_dmc_env

from rllte.agent import DrQv2

if __name__ == "__main__":

device = "cuda:0"

# create env, `eval_env` is optional

env = make_dmc_env(env_id="cartpole_balance", device=device)

eval_env = make_dmc_env(env_id="cartpole_balance", device=device)

# create agent

agent = DrQv2(env=env,

eval_env=eval_env,

device='cuda',

tag="drqv2_dmc_pixel")

# start training

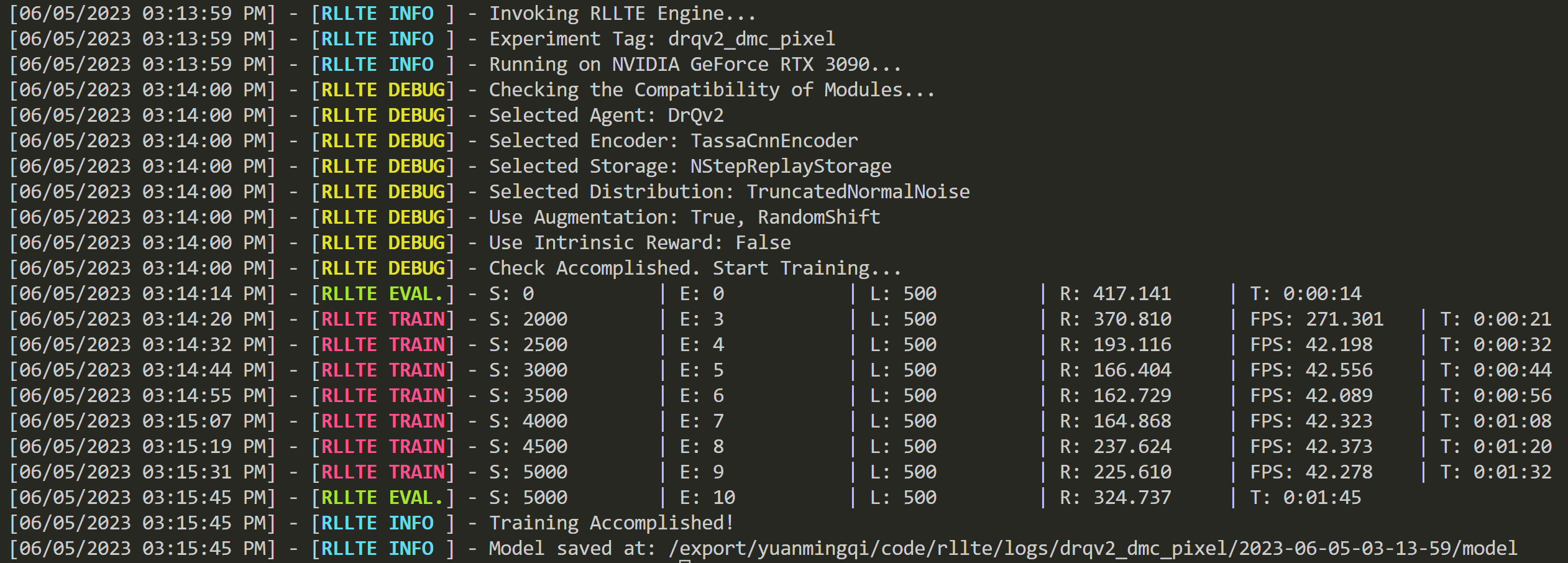

agent.train(num_train_steps=5000)Run train.py and you will see the following output:

Similarly, if we want to train an agent on HUAWEI NPU, it suffices to replace DrQv2 with NpuDrQv2:

# import `env` and `agent` api

from rllte.env import make_dmc_env

from rllte.agent import DrQv2

if __name__ == "__main__":

device = "npu:0"

# create env, `eval_env` is optional

env = make_dmc_env(env_id="cartpole_balance", device=device)

eval_env = make_dmc_env(env_id="cartpole_balance", device=device)

# create agent

agent = DrQv2(env=env,

eval_env=eval_env,

device='cuda',

tag="drqv2_dmc_pixel")

# start training

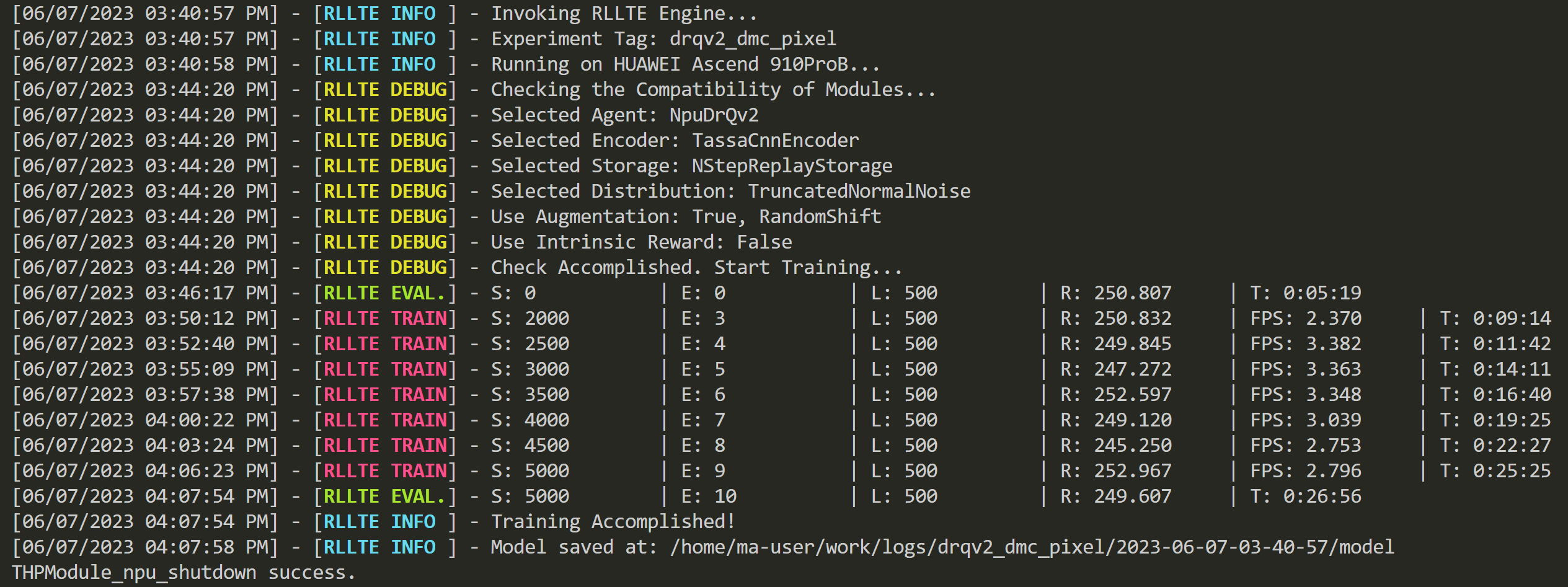

agent.train(num_train_steps=5000)Then you will see the following output:

Please refer to Implemented Modules for the compatibility of NPU.

For more detailed tutorials, see https://docs.rllte.dev/tutorials.

| Type | Module | Recurrent | Box | Discrete | MultiBinary | Multi Processing | NPU | Paper | Citations |

|---|---|---|---|---|---|---|---|---|---|

| Original | SAC | ❌ | ✔️ | ❌ | ❌ | ❌ | ✔️ | Link | 5077⭐ |

| DDPG | ❌ | ✔️ | ❌ | ❌ | ❌ | ✔️ | Link | 11819⭐ | |

| PPO | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | Link | 11155⭐ | |

| DAAC | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | Link | 56⭐ | |

| IMPALA | ✔️ | ✔️ | ✔️ | ❌ | ✔️ | ❌ | Link | 1219⭐ | |

| Augmented | DrQ-v2 | ❌ | ✔️ | ❌ | ❌ | ❌ | ✔️ | Link | 100⭐ |

| DrQ | ❌ | ✔️ | ❌ | ❌ | ❌ | ✔️ | Link | 433⭐ | |

| DrAC | ❌ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | Link | 29⭐ |

- DrQ=SAC+Augmentation, DDPG=DrQ-v2-Augmentation, DrAC=PPO+Augmentation.

- 🐌: Developing.

NPU: Support Neural-network processing unit.Recurrent: Support recurrent neural network.Box: A N-dimensional box that containes every point in the action space.Discrete: A list of possible actions, where each timestep only one of the actions can be used.MultiBinary: A list of possible actions, where each timestep any of the actions can be used in any combination.

| Module | Remark | Repr. | Visual | Reference |

|---|---|---|---|---|

| PseudoCounts | Count-Based exploration | ✔️ | ✔️ | Never Give Up: Learning Directed Exploration Strategies |

| ICM | Curiosity-driven exploration | ✔️ | ✔️ | Curiosity-Driven Exploration by Self-Supervised Prediction |

| RND | Count-based exploration | ❌ | ✔️ | Exploration by Random Network Distillation |

| GIRM | Curiosity-driven exploration | ✔️ | ✔️ | Intrinsic Reward Driven Imitation Learning via Generative Model |

| NGU | Memory-based exploration | ✔️ | ✔️ | Never Give Up: Learning Directed Exploration Strategies |

| RIDE | Procedurally-generated environment | ✔️ | ✔️ | RIDE: Rewarding Impact-Driven Exploration for Procedurally-Generated Environments |

| RE3 | Entropy Maximization | ❌ | ✔️ | State Entropy Maximization with Random Encoders for Efficient Exploration |

| RISE | Entropy Maximization | ❌ | ✔️ | Rényi State Entropy Maximization for Exploration Acceleration in Reinforcement Learning |

| REVD | Divergence Maximization | ❌ | ✔️ | Rewarding Episodic Visitation Discrepancy for Exploration in Reinforcement Learning |

- 🐌: Developing.

Repr.: The method involves representation learning.Visual: The method works well in visual RL.

See Tutorials: Use Intrinsic Reward and Observation Augmentation for usage examples.

rllte provides a large number of reusable bechmarks, see https://hub.rllte.dev/ and https://docs.rllte.dev/benchmarks/

View our well-designed documentation: https://docs.rllte.dev/

Welcome to contribute to this project! Before you begin writing code, please read CONTRIBUTING.md for guide first.

This project is supported by The Hong Kong Polytechnic University, Eastern Institute for Advanced Study, and FLW-Foundation. EIAS HPC provides a GPU computing platform, and Ascend Community provides an NPU computing platform for our testing. Some code of this project is borrowed or inspired by several excellent projects, and we highly appreciate them. See ACKNOWLEDGMENT.md.